Tactile

by: Edmund Oetgen and Akhil Nair

Gitlab Repository: http://gitlab.doc.gold.ac.uk/anair010/creativeProject

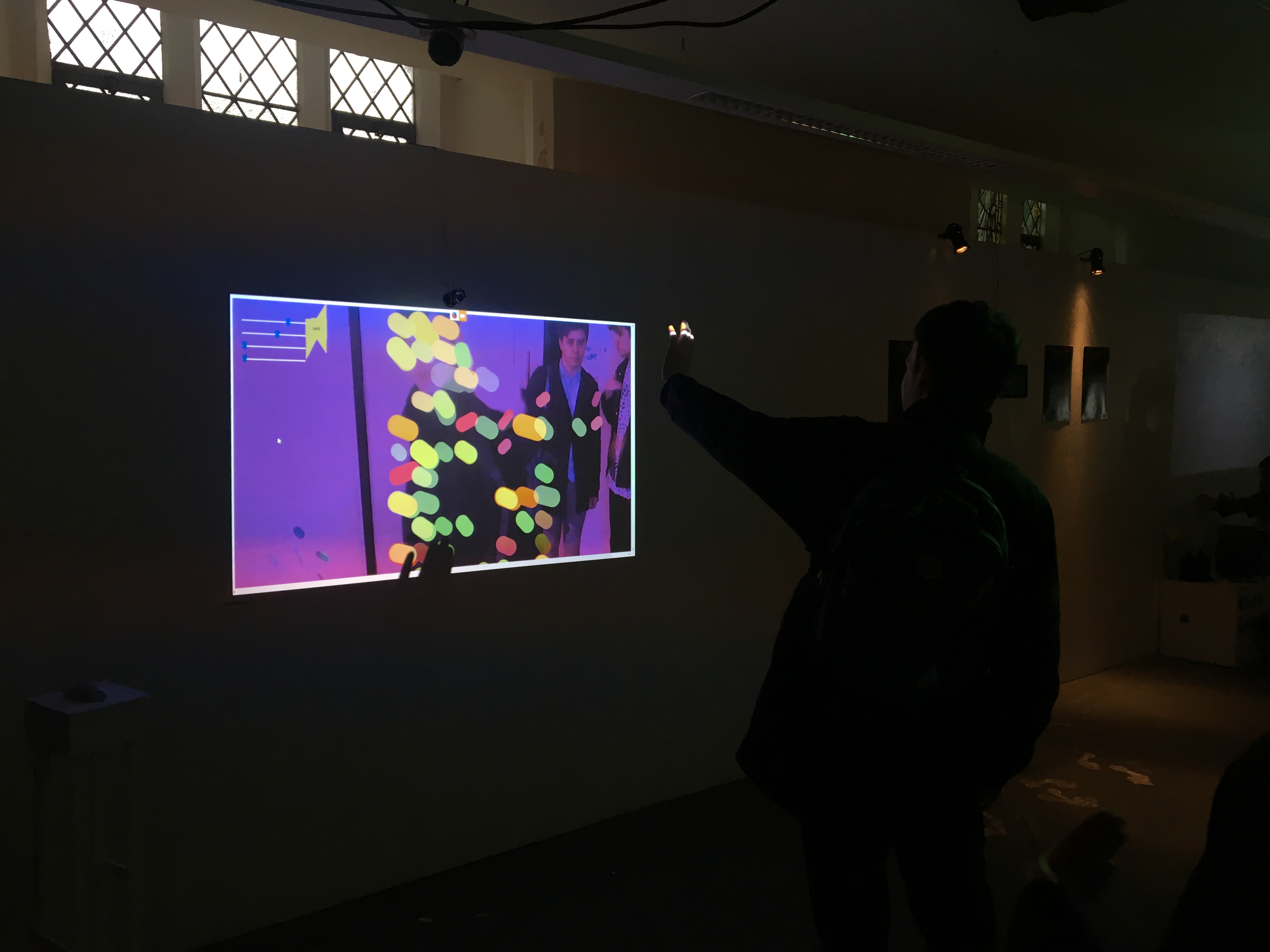

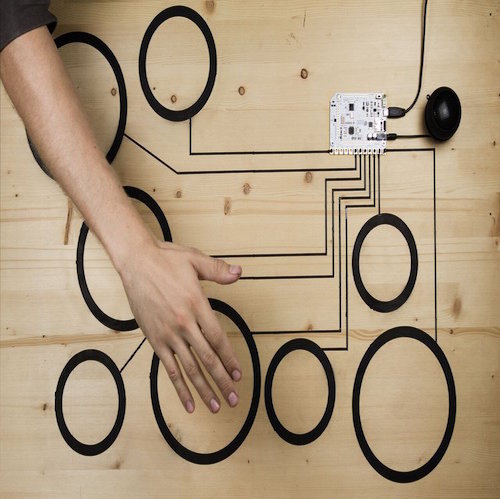

Tactile is an interactive audio-visual installation that explores the relationship between what we see and hear. Like a sandbox users are encouraged to explore sounds with their hands, manipulating a stream of audio with their movements. Through this, a relationship is formed between action and sound. Tactile aims to get users thinking about the sounds around them. Besides music, people tend to take sound for granted, rarely engaging with it as actively as they might with sights or physical objects

We employ computer vision to detect hand positions, and machine learning to track various predefined gestures. These then control the parameters of a custom built granular synthesiser to warp, stretch and dismantle the sounds in a variety of ways.

The aim of this piece is to explore how the senses work together to draw conclusions from multiple stimuli, and how this implicit relationship can be exploited to create either more immersive or unsettling experiences. Ultimately, the project will invite the audience to actively engage with how sound can shape their perception of their

Surroundings.

Research

When interacting with objects in the real world, sound implicitly describes the physical properties of a given material, action, or environment, supplementing senses such as touch and vision. In media that deprives its consumer of touch or visual stimuli, sound becomes a tool to communicate this information. Tactile experiments with how sound can be used to supplement, alter, or displace other sensory information, and with our research we have explored media that similarly plays with the idea of sound as a primary carrier of this information.

The use of sound in classic animations is a context where sound can be used to compensate for a lack of visual information. As visuals are often abstract representations of their real word equivalents, The use of exaggerated sound design compensates for the lack of visual information. Using easily recognizable sounds not usually heard in said contexts, a metaphorical connection is made between actions and sound rather than a physical one.

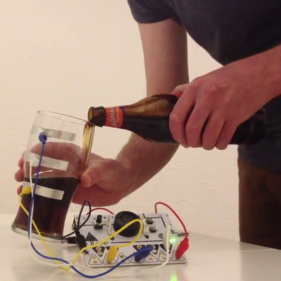

Projects such as mogees use vibration sensors that allow users to map signals created by striking physical objects in different ways to synthesized sounds or samples. This provides an opportunity to create interesting effects when unusual sounds are used that are unrelated to the object used. This video shows a table mapped to a synthesizer that produces a metallic sound. This demonstrates the ways in which the visual information that the viewer is given about a material’s physical properties can be distorted through the use of sound.

Many installations explore the relationship between sound and action through varying methods. Ouichi Okamoto’s installation Re-Rain for example, has fifteen speakers, each with an umbrella stood on its cone, continuously looping pre-recorded samples of falling rain. At first, this pairing of sight and sound may elicit the familiar response of being caught in a storm, however it becomes clear that things are not quite right. With more than just the lack of water the piece subverts the user’s preconceived assumptions of the experience. For example, the noise is coming from beneath rather than above. The umbrella’s themselves have transformed from protection against the rain to its source, reflecting the sounds in strange ways as it hits their tops, their frames rattling as the volume increases. Okamoto focuses on a mundane everyday object and through sound challenges the audience’s own experiences with it; our project will also consider the user’s ideas of what an object is from its sounds, but instead focus on how these assumptions can affect their interactions with new, unconventional environments.

Another installation, Waltz Binaire’s Soap and Milk, uses a fluid simulation as a medium for user interaction, but instead uses it to parallel the almost organic lifecycle of social media. Every time a tweet about the project is found a bubble appears, a high-powered infrared camera is then used to detect movements in front of the screen, allowing users to interact with the bubble as it gradually fades away. With our project we intend to achieve a similar intuitive, playful to Soap and Milk, but aim to take the level of interaction a step further with a tangible interface.

Build Commentary

We began our project with two separate technical research projects as starting points to explore the technologies we planned to use. One focused on sound generation, and the other used computer vision to detect shapes. Using OSC to communicate between the two projects meant that once we had decided what information needed to be passed from one part to the other we could develop each half simultaneously without worrying too much about integration.

The Computer vision program used OpenCV to detect shapes drawn in black on a piece of paper in real time through the webcam, and find how many edges it had. This worked primarily with regular shapes. In the early stages of the project, it was unclear what kind of user interaction was to be used, and this was a general test to try to obtain an understanding of how to use OpenCV, and what it was capable of.

After these projects were completed, we began to consider how these two sides would come together to create a cohesive experience, and we decided to create an interface that used user’s fingers to manipulate sound and visuals.

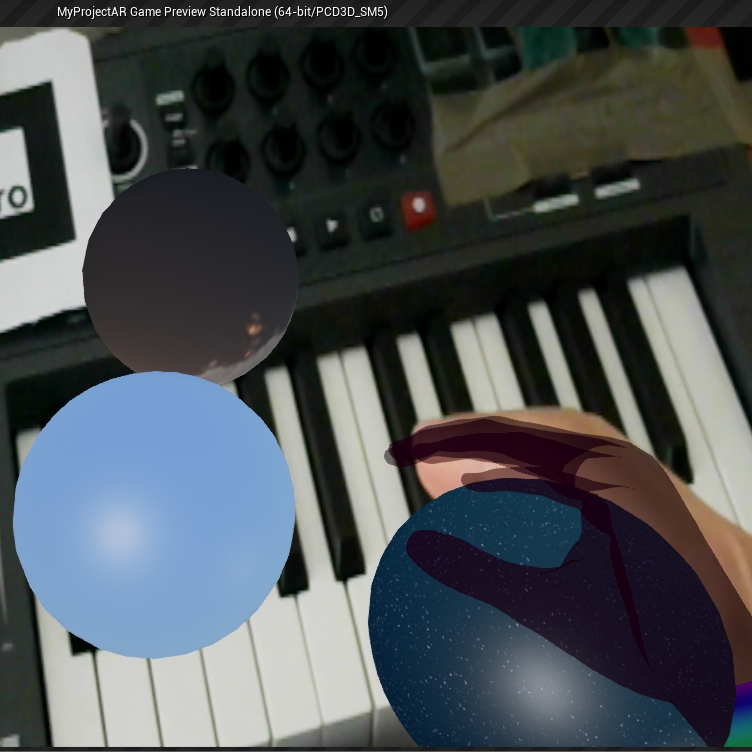

We did initial tests with a piece of frosted translucent perspex with a PS3 Eye camera underneath. When viewed from the opposite side, the points of contact any object makes with the perspex were very clear and focussed, while everything else was diffused and blurred. This simplified filtering the image through the webcam, and allowed us to get fairly accurate areas of contact with the surface.

To detect the fingers on the perspex, we used OpenCV to filter the webcam feed. Each frame was first blurred to remove noise from the image, and the brightness and contrast of the image was changed to get it into the range that we required. Finally, we apply a threshold filter to the image to get areas of pure black and white. After this, we used the OpenCV contour finder to transform areas of black into objects that we can get positions from. The contour finder is constrained to only find areas of black that have an area under a certain amount, to filter out any large contours. Taking the centroid positions of these resulting contours gives us the finger positions we need.

The audio parts of the project were built in Openframeworks using the ofxMaxim addon. With Tactile the goal was never to make a musical instrument, but we did want to create something that was both expressive and fun to use. As such we aimed to obscure the controls just enough to conceal the software, but responsive enough to This also factored into why we chose the sounds we did, opting for a combination of synthesised sounds, speech and field recordings to highlight the arbitrary distinction between noise and music and shifting focus to the sounds themselves.

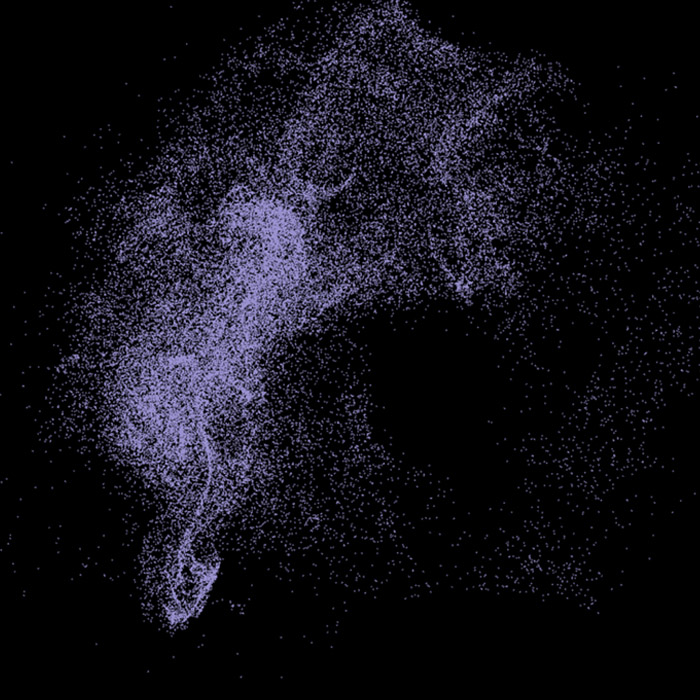

Originally we attempted to use and inverse FFT to merge disparate sounds together. However after researching and testing this approach it turned out to be far too ambitious and were unable to get the results we were after(Some of these experiments are shown below). Instead we based our granular synth on Maximillian’s maxiTimePitchStretch class. We built a class to house this class and added functions to manipulate its parameters. We also made a function that allowed us to apply envelopes to any of these parameters using maxiEnv objects.

A selection of these synth classes are loaded with various samples which are played on loop. When the program detects OSC messages specifying the positions of fingers it will loop on the current grain until released. The position values are loaded into a vector and used to manipulate the pitch and playback speed.

At this stage we built a cardboard case to house the webcam and screen, which allowed us to test how we wanted people to interact with our project. As we were using a webcam we had to ensure an accurate distance from the screen and lense, without getting anything in the way. This restricted how the project was presented. We thought about several different designs that tried to take advantage of this but found many of limited our results even more. Others, such as fitting everything inside an old television, simply felt a bit too gimmicky. In the end we decided to go for a simple box design, as this gave good results without distracting too much from the message we were trying to get across.We were able to get fairly accurate X and Y positions of the fingers and calculate their centroid. Controlling the synthesiser with this data was intuitive, but also rather limited, offering little improvement over just using a mouse on a screen.

Using wekinator, we then implemented gestures using the centroid of the finger positions. We came to the decision to use the centroid to implement gestures, as the number of fingers that a user would place on the surface of the screen is not fixed, and wekinator, along with many other machine learning models require a static number of inputs. We used dynamic time warping to record gestures that in theory would be able to be detected regardless of the speed the user performs them, however in practice this was not entirely reliable, as rather than using static positions as inputs, we used velocities of fingers, as this allows users to perform gestures anywhere on the input surface. The tradeoff with this is that the gestures have to be of similar size to the gestures the model was trained with.

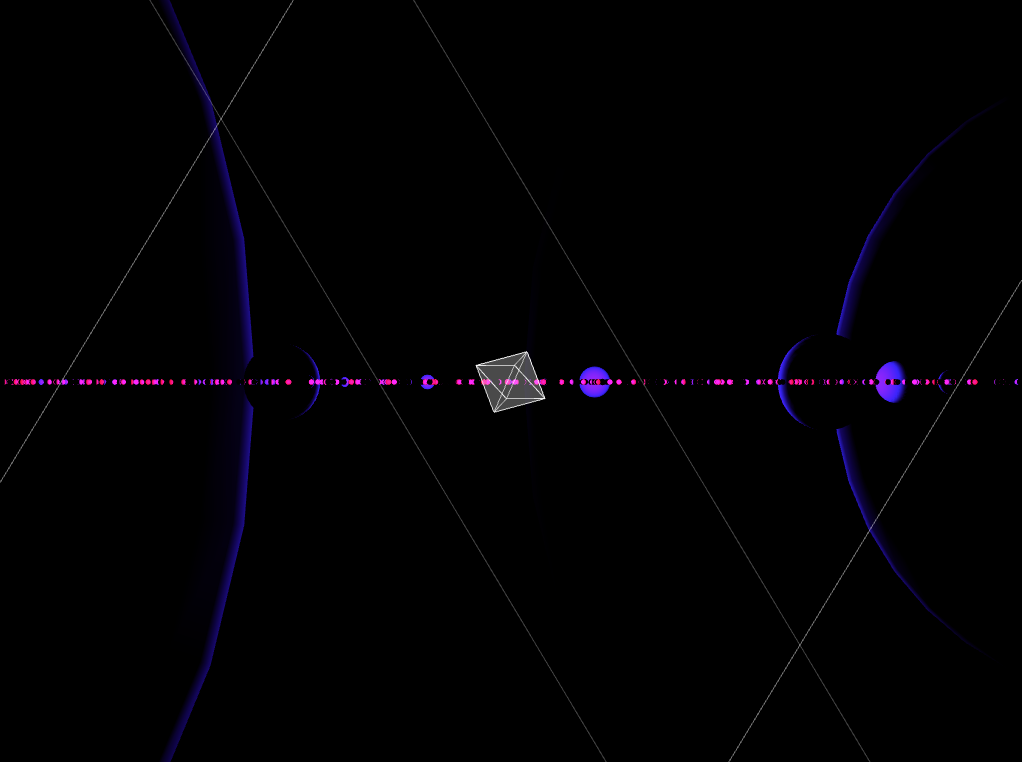

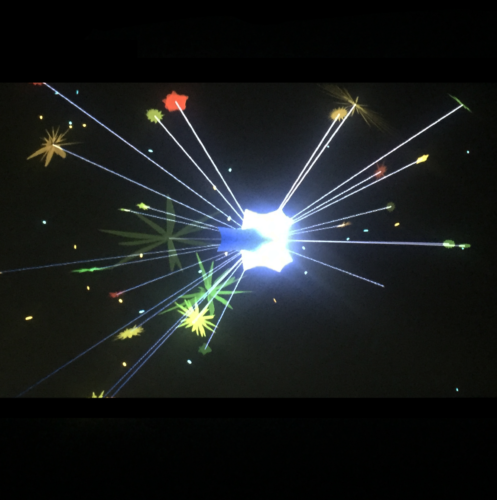

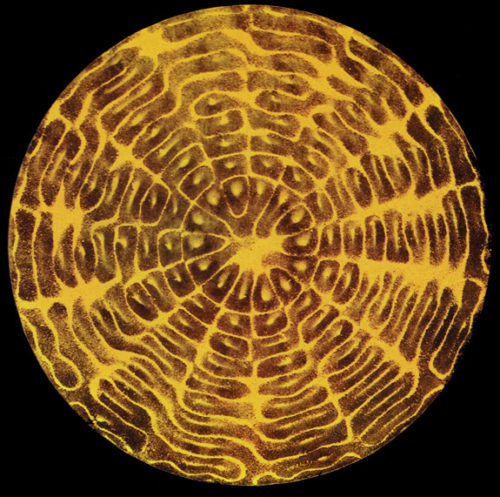

Finally, we implemented a shader using GLSL to visualize the user input. This seemed like a straightforward way to create some interesting graphics at a high speed. Each finger vertex has a series of distorted concentric circles around it, and when multiple fingers are placed on the screen, the moire patterns created between them are reminiscent of cymatic phenomena where grains of sand are placed on a vibrating surface. We felt this fit nicely with the theme of our project.

Evaluation

Overall we feel the project was successful in its goal of creating a new way for people to play with sound, though it may not fully convey the points we were trying to get across. Regardless, we both agree to have learnt a lot from this project both technically and in how we will approach similar projects in the future.

Both the parts of the system are competent and perform well. The component software is also designed with enough modularity that it can both be implemented in other projects without too much hassle.

While our finger detection works, it requires calibrating every time the webcam is run, as external lighting conditions influence the accuracy of the finger detection. It is not as robust as we would have liked, however when it is set up with the correct parameters it works well. The solution also does not respond as expected when a user places their hand too close to the screen, as it is only made to work with fingertips.

The synthesiser works well, but isn't all that versatile. Ideally we would have built more effects. We also feel that adding in functionality to filter the incoming data from OSC at this stage would have increased the accuaracy of the controls, and this in turn would have made the whole process more intuitive. This part of the project could have also been improved by adding some more intelligence as to how the samples merge and interact with each other, rather than all acting independantly. From what we have learnt about wekinator in this project we feel this could be a more applicable use for it, by manually arranging the different timbres and textures to which the different gestures will be associated.

The interface of using fingertip detection for user interaction could have used further thought, as it is not as intuitive as it could have been. When testing with users, we noticed many people tried to use their entire hand on the surface, or placed their hand too close to the surface of the screen, and would have to be told explicitly how it worked. Initially, we intended to have a tank of liquid sitting on the top of our interface, but our project started to move away from what we were trying to convey with this, and as a result, our custom interface seems excessive for what we required.

Although we got wekinator working correctly recognising a few simple gestures, we were unable to get the system to respond to this data in an expressive way, which was a direct result of our inconsistent finger detection. We also felt it wasn’t always clear what direct effect your action would have on a sound, and struggled to find a way to improve this whilst still producing interesting results.

We also planned on having the visualizer projected onto the surface of the box, however as it was created so late in the project, there was no time to secure a projector to display it this way

The final form factor of the piece was sufficient to achieve the results we wanted, but is an aspect that could have been implemented more creatively, in line with some of our initial designs.