Augmented Flamenco

by: Julius Naidu

You can download the repo here

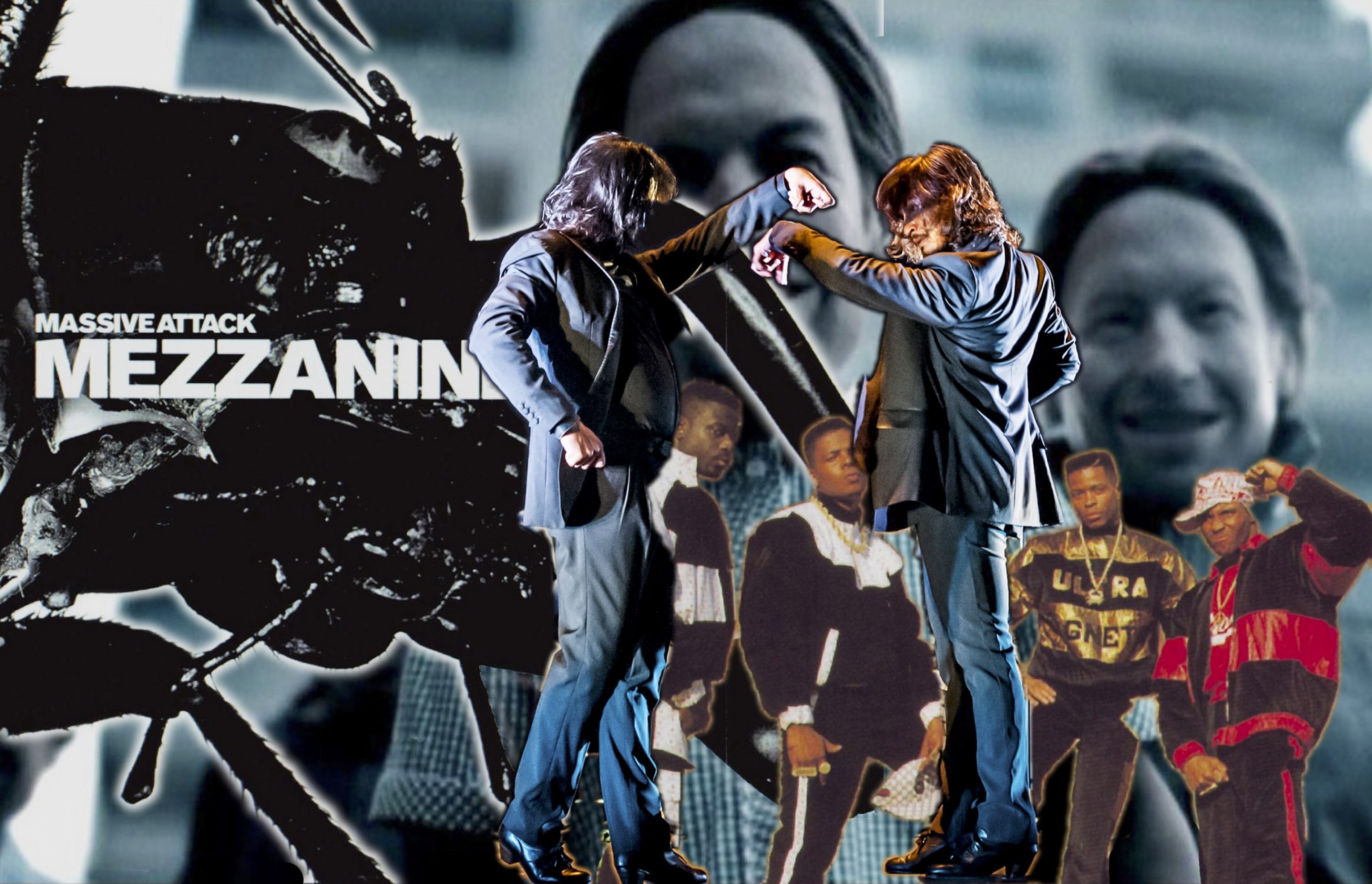

My original idea was to build a system that allowed for augmentation of a flamenco dancer's performance. The percussive footwork and physical gestures would be captured by the system for data extraction and used in triggering sounds thereby creating an augmented flamenco dance.

After months of smashing my head against an xbox Kinect 1 I made the decision to ditch it and instead simplify my process by working just with the webcam on my macbook pro. Instead of trying to understand the functionality of the Kinect and map its data to the functionality I wanted to build in OpenFrameworks I could instead work with the image from the webcam. I decided its data would be somewhat simpler and there would also be more support for how to work with the webcam rather than working with the Kinect.

This however also changed my project rather significantly. The original idea was already proving problematic in terms of physical practicalities. For example finding a rehearsal space which I could consistently use with a setup that involved a wooden platform, contact and dynamic mics, audio interface and sound system where I could rehearse regularly at Goldsmiths proved difficult. Also how would I set up the depth camera in relation to the performance? Setting it up in front of the performer would mean that I get a lot of functional data with which to work but then the performer would be a little obscured by the camera. This then raised a few questions. Perhaps put the camera to the side of the performer. But then I would have to be working with data related to the side view of the performer and working with a front view of the performer was already proving tricky. Now that I had decided to work with the webcam on the mac was I going to set up the mac in front of the performer? Surely that would obscure the performance more.

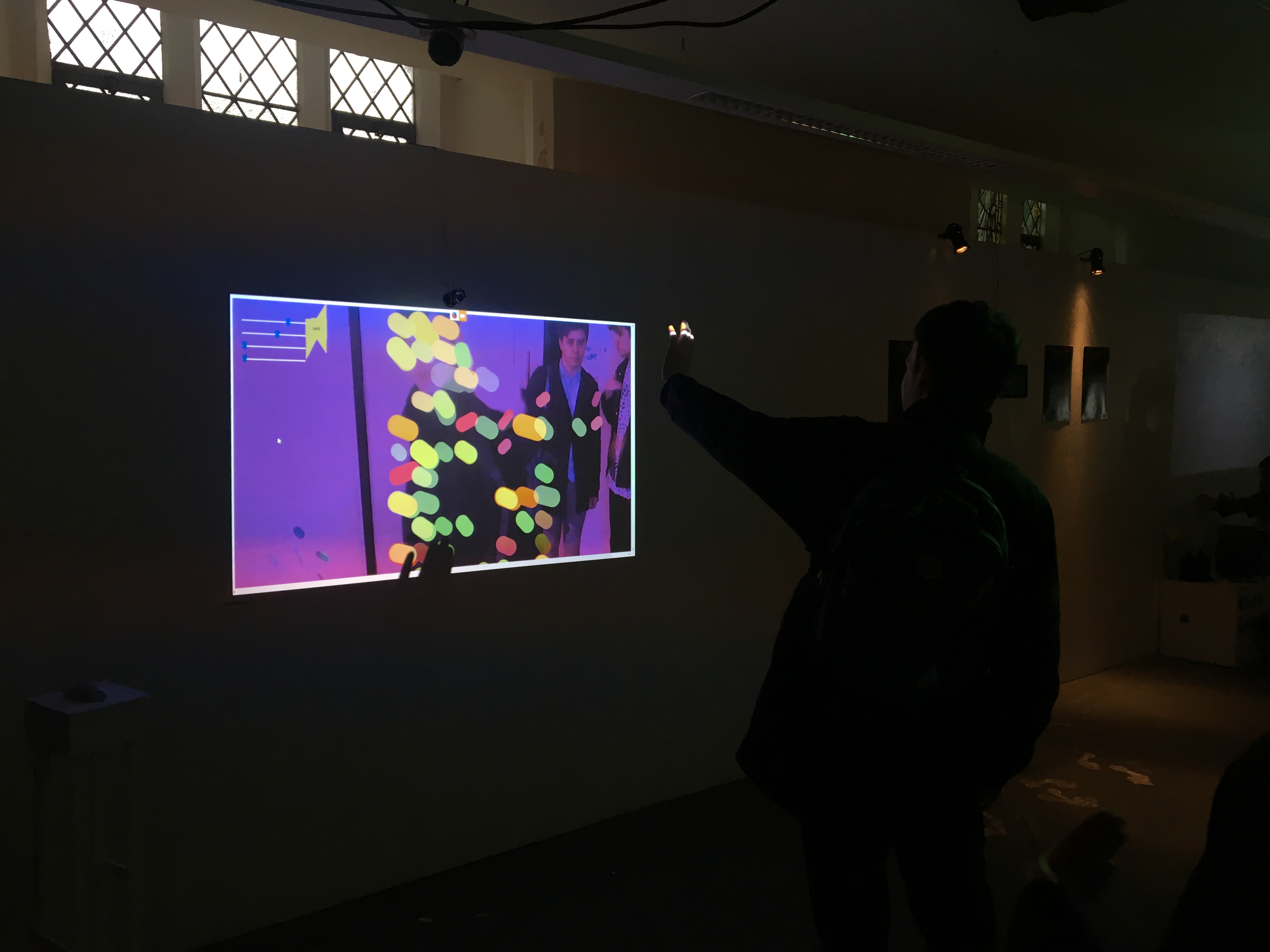

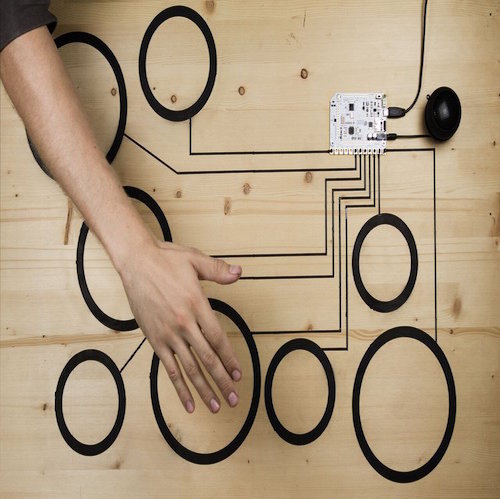

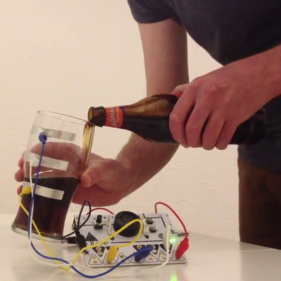

It is at this point that I turned to my music computing project where I was using triggers on percussion. The functionality of triggers on percussion is similar to the triggers that would be placed on the raised board used by the flamenco dancer. In this way the percussionist can be sat at the instruments with the laptop at the side. The percussionist can then be playing the percussive beats and then modulating the beat patterns with gestural movement using the webcam to the side of him/her. The photos below show the physical set up.

So with this basic idea in mind I decided to embark on the most basic incarnation of the project using the webcam and microphone on my macbook pro. My program now takes audio input from the mic and allows the user to modulate the live audio input via the computer vision functionality which modifies parameters in the granulator being fed by the live audio.

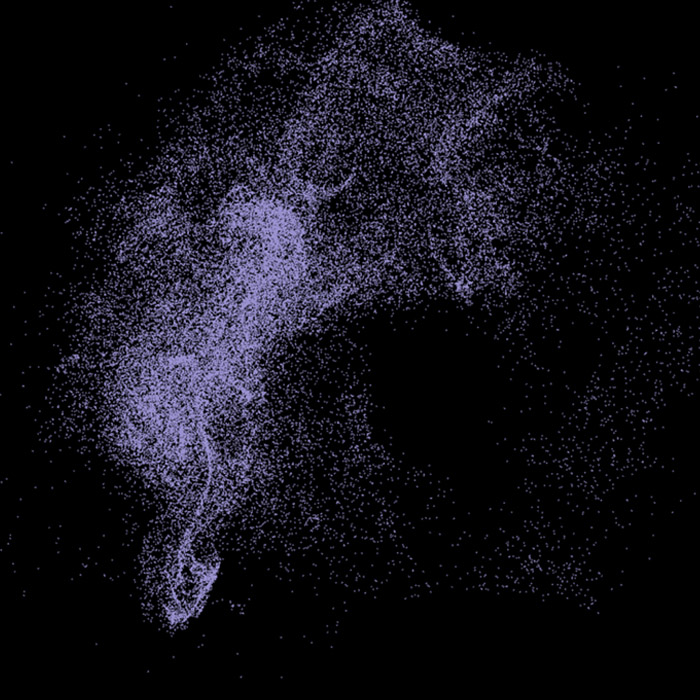

With help from Dr Simon Katan I was able to implement a one pole filter to downsample my video input and reduce the large quantity of numbers output by cvFlow, a computer vision library from Kyle Mcdonald, to a handful of numbers. This helpful data extraction then allowed me to plug those numbers into parameter controls for the maximillian granulator. He also showed me how to represent my newly downsampled data as a line rotating at one of its ends. The angle being generated at this point of rotation is my main point of data extraction for use with the granulator parameters and with my max patch. I also created a class to handle parsing of the various data from the CV to the parameters of the granulator and was happy to use an ampersand reference in my link function! (big achievement).

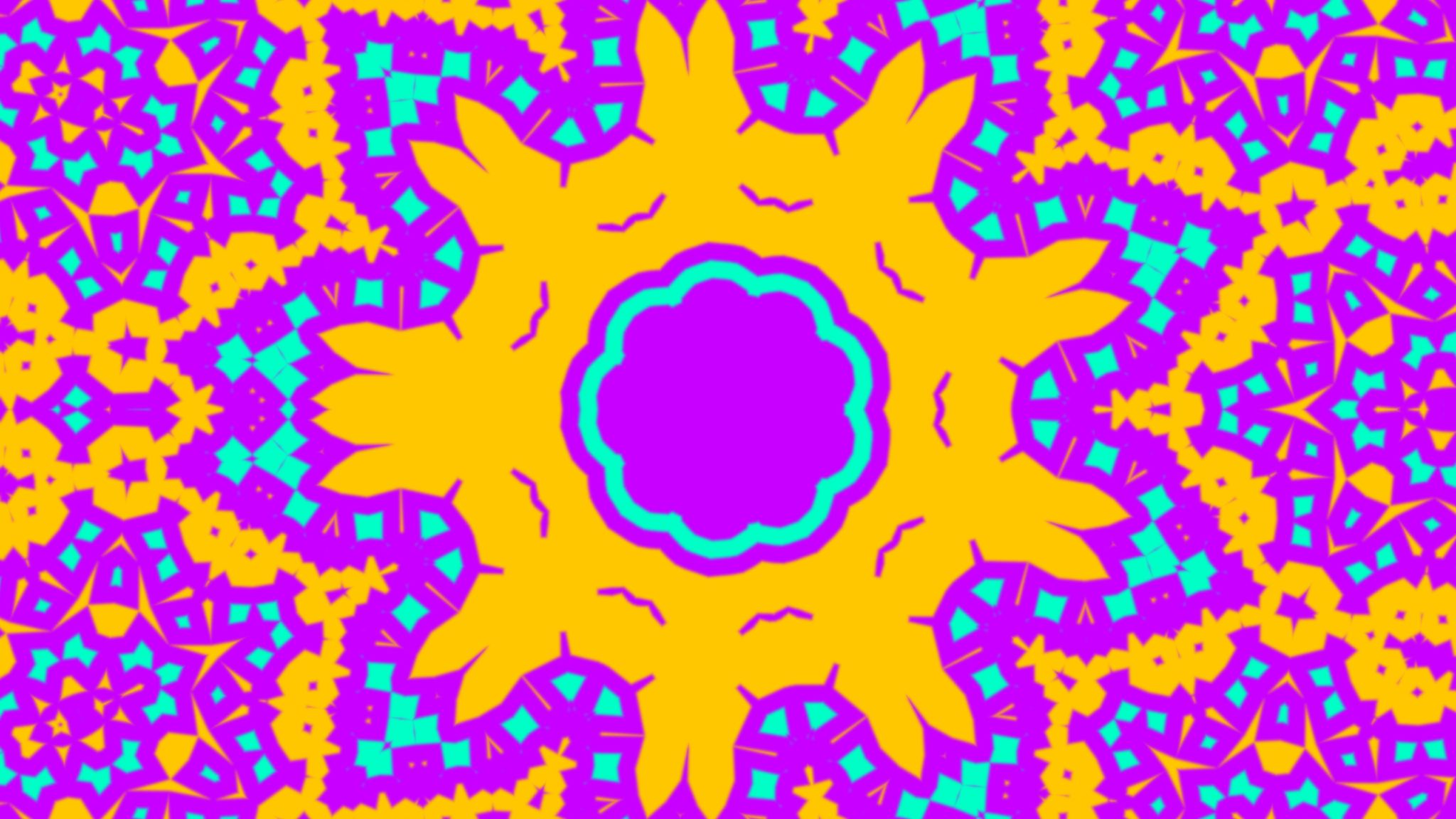

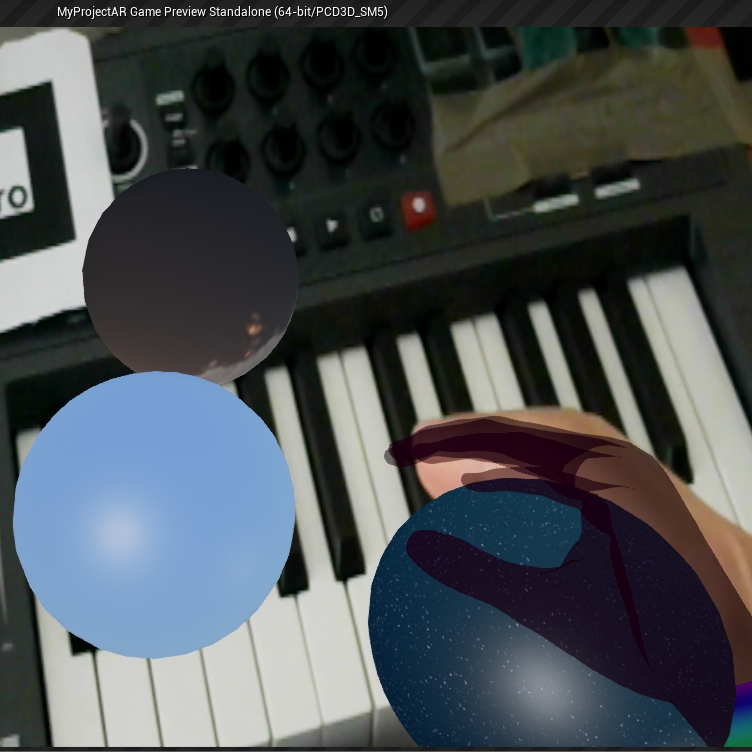

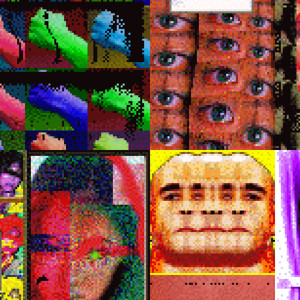

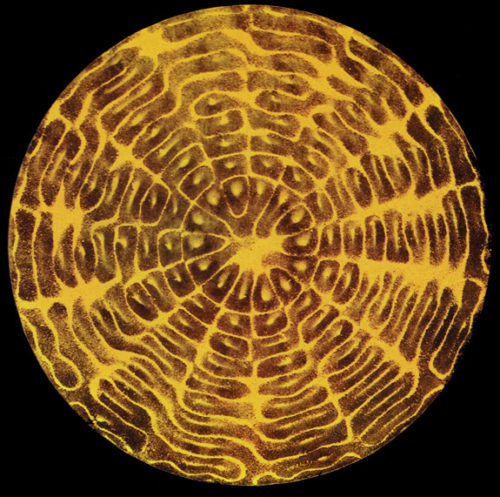

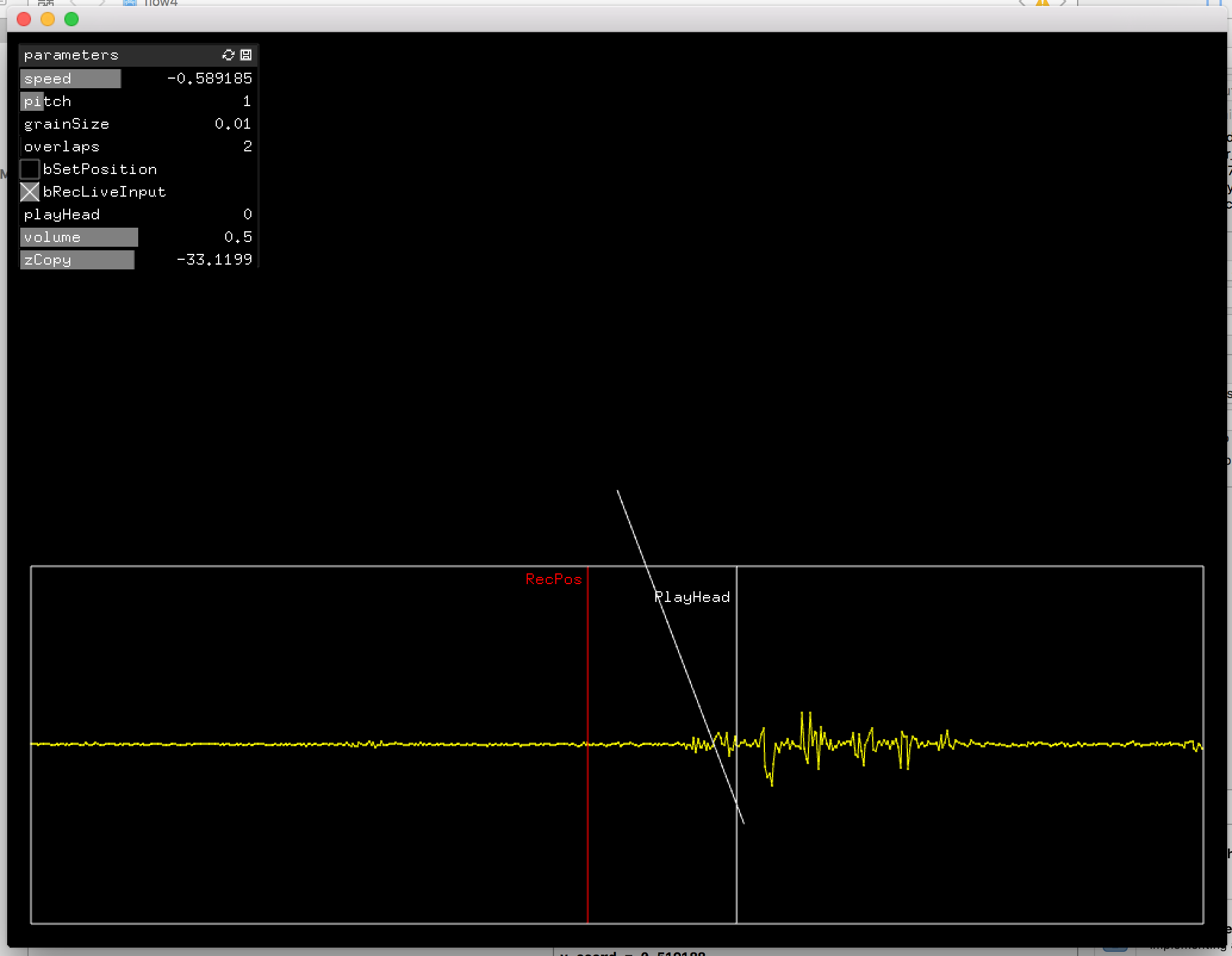

With help from fellow student Pawel Dziadur I was able to implement a version of Maximillian’s granulator by Joshua Batty which takes live audio input and loops it. This bit of code also includes a GUI for the granulation parameters and nice visualisation of the sound wave. Now the user can record in live sounds and mess around with them by moving their hands up and down in front of the webcam.

A screenshot of this is what you see below.

I would like to be performing a greater degree of maths to be massaging the data I’m getting from the CV to provide more meaningful functionality with the granulator. My ambitions stretch as far as calculating euclidean distance relationships between certain points in the CV and mapping those relationships to the granulation but at this stage that seems a bit of a dream.

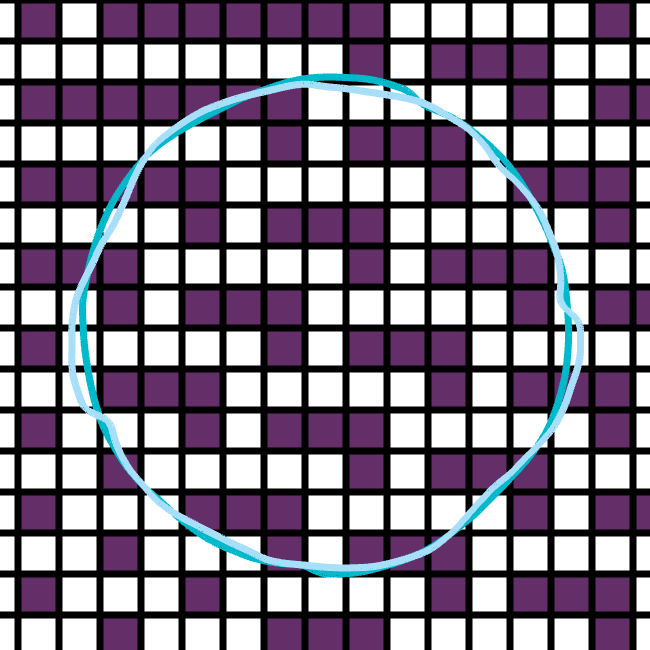

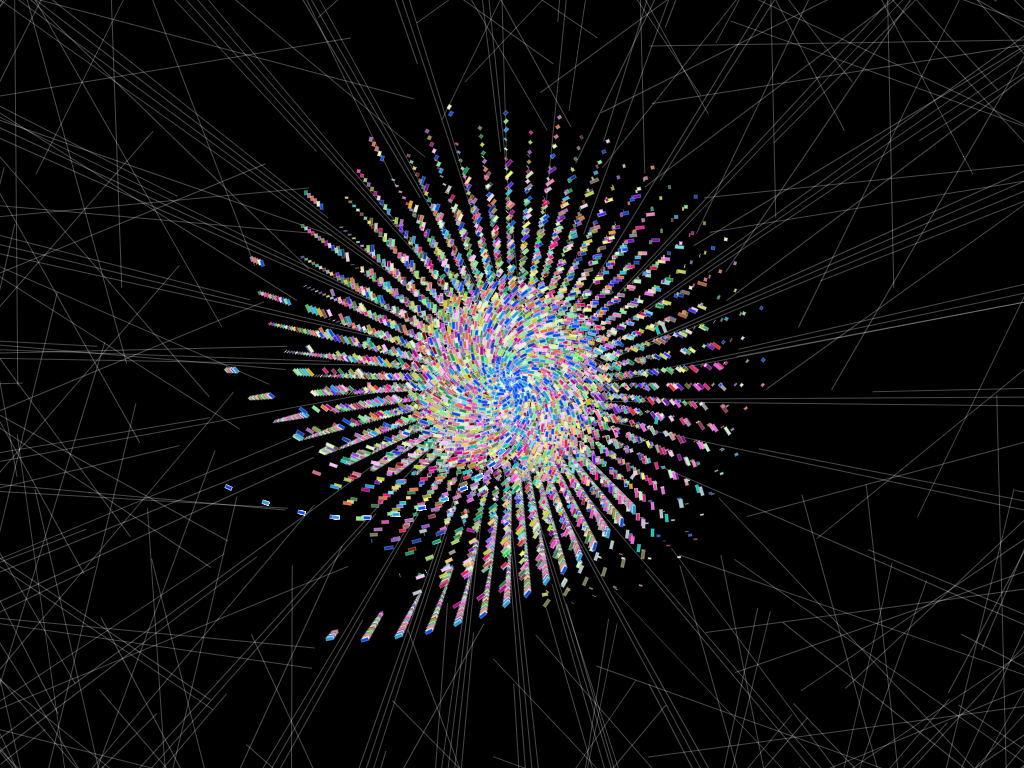

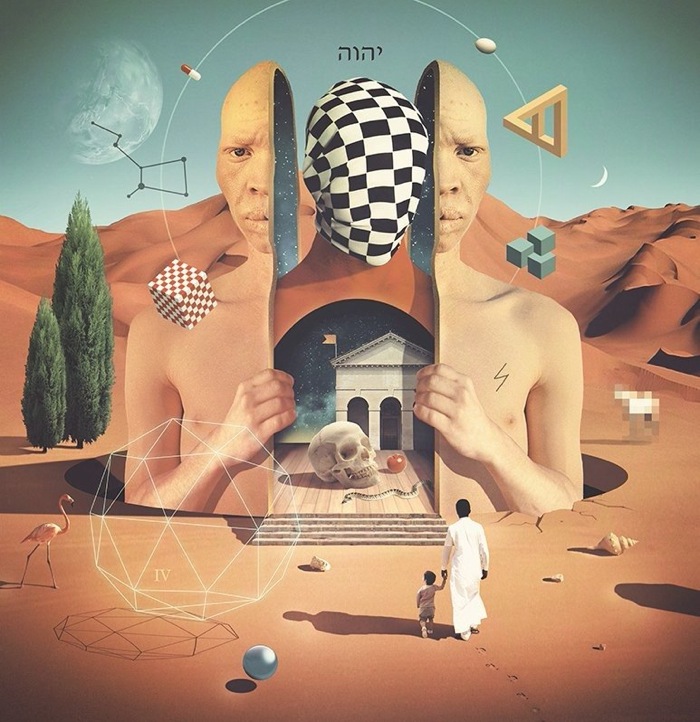

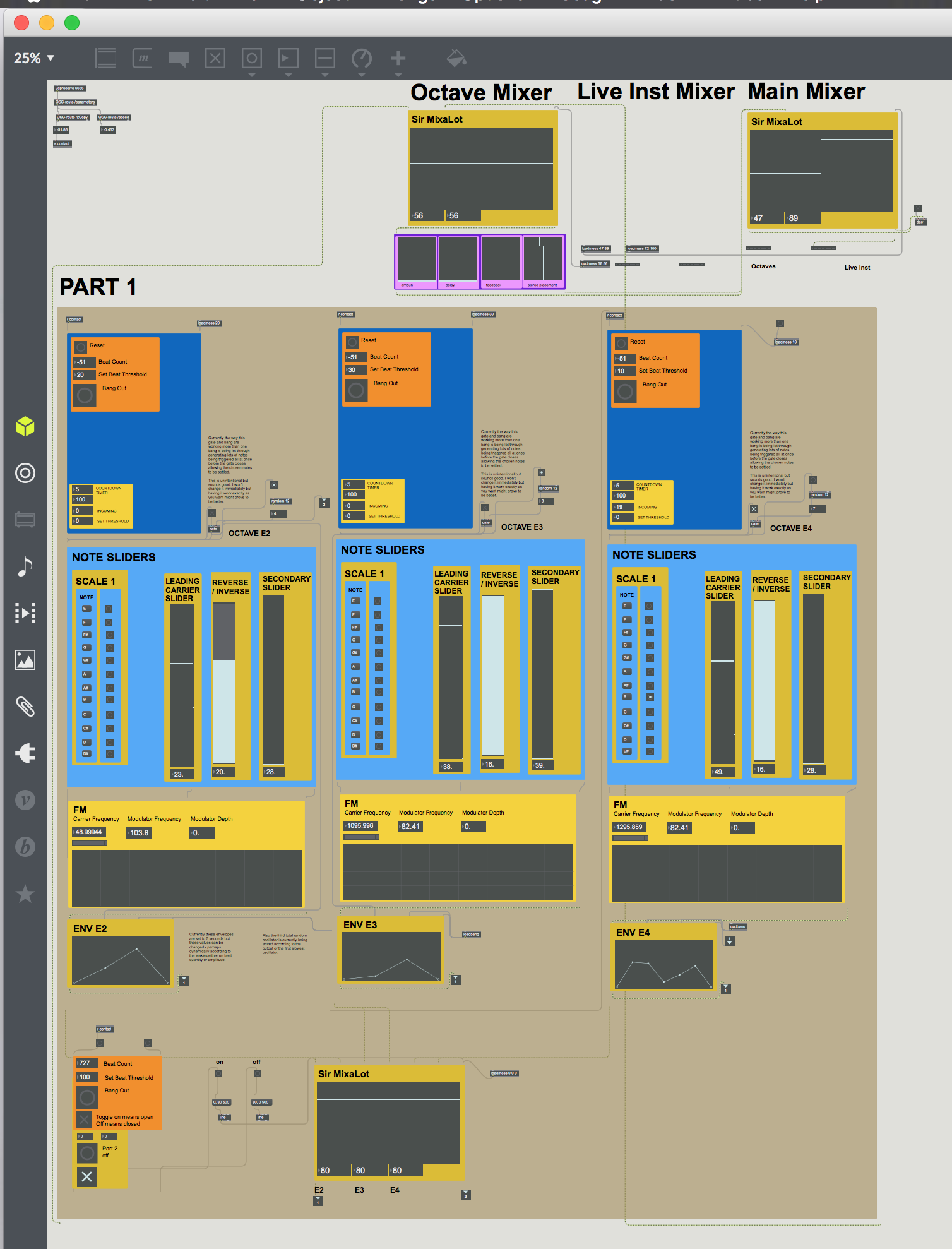

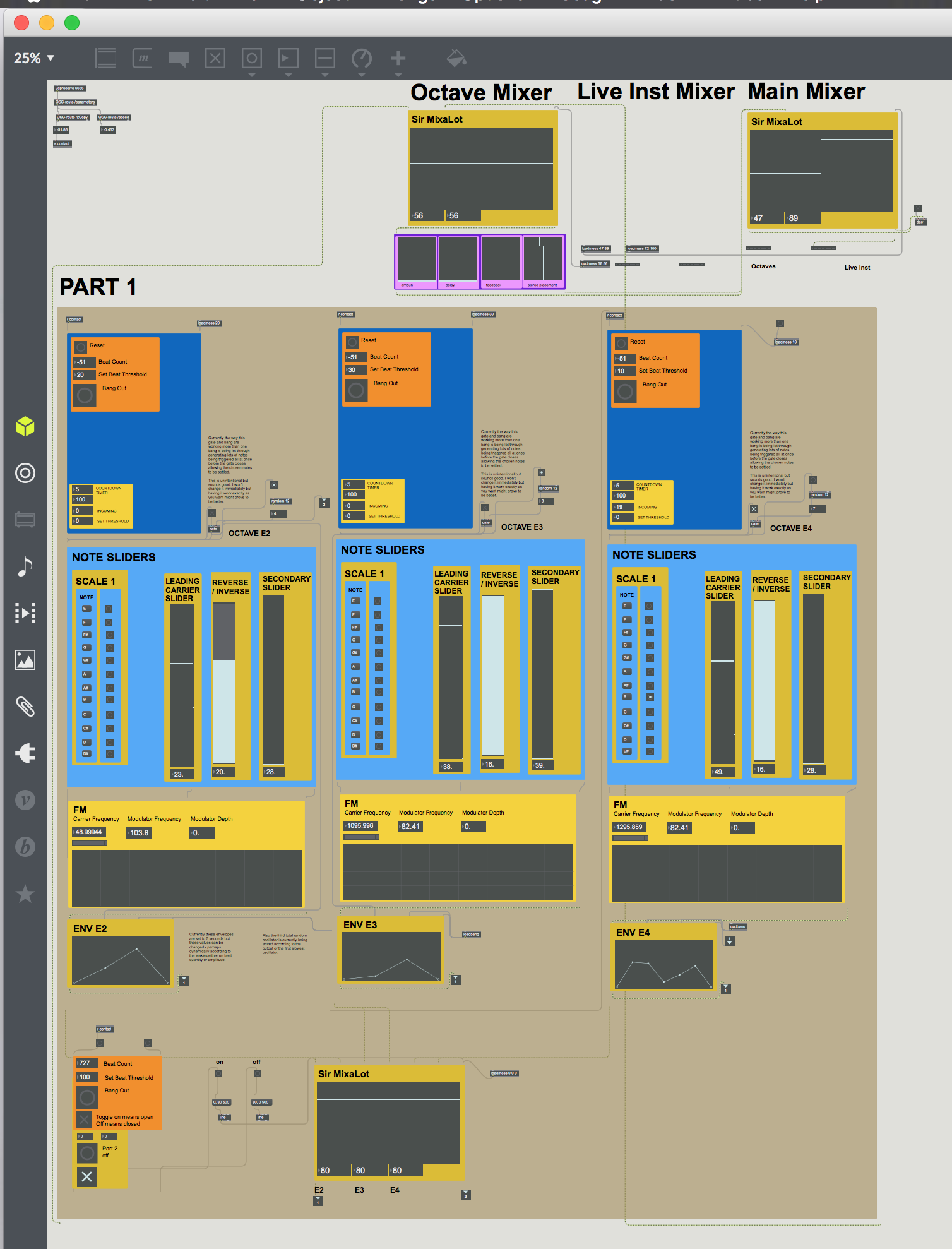

I am then also sending OSC messages from the CV in OpenFrameworks to a max patch I built for a music computing project which produces generative sounds using three oscillators. In this way I have live sounds being granulated and modulated direct in Open Frameworks and accompanying sounds being generated in the Max patch which can be seen below.

The way the Max patch is generating sound has been built using FM synthesis with the relationships between carrier frequencies, modulating frequencies and modulator amplitudes having harmonic relationships. The oscillators are built to play all twelve notes in the octaves E2, E3 and E4 on the well-tempered scale. The idea was to play around with counterpoint and dissonance. With the weird sounds being generated by Maxim’s granulator and the dissonant sounds from Max the final effect is a weird, disturbing slightly haunting and nightmarish soundscape.

The oscillators were originally built with some beat detection for use with percussion with sounds and events only being triggered when certain thresholds are met. I removed the beat detection for the purpose of the current state of the project which is only using audio input fro the mic. The Max patch also makes strong use of Dr Freida Abtan’s leaky accumulator providing a nifty method for thresholding quantities of beats, or in this case higher values of integers for triggering events.

In terms of intended audience the original concept was a live performed piece which makes use of what is essentially a tool / system. What I have ended up with is a tool but not a finished performed piece. It is some sort of instrument for augmented movement and sound. The next step would be to translate this basic setup into a larger one replacing webcam input with a good depth camera and replacing the mac’s mic input with contact mics and microphones for greater control over the live audio input. I would also like to buld better functionality in the programming, as mentioned before, with an aim to creating some sort of ‘software web’ which captures the movements of the dancer as well as the percussive footwork and sends the data to event triggers.

Reflecting on how this project has gone and moving forward with my programming I know that my grasp of C++ needs to improve. It is a robust programming language with many applications such as robotics and I like it. In terms of this project it is my lack of knowledge in C++ that has hindered me. I feel that if I knew my way around the language better I would be focusing less on whether I am creating a function in the right place or whether I should be using inheritance or pointers and instead just concentrating on the maths and techniques necessary for creating meaningful data extraction and design.

It has also been great practice getting to grips with Git. I have tried it a little before but this project has taught me how to use it a little more and it is a great tool allowing for dynamic programming practice.