Augmenting Flamenco

by: Ralph Allan

Introduction

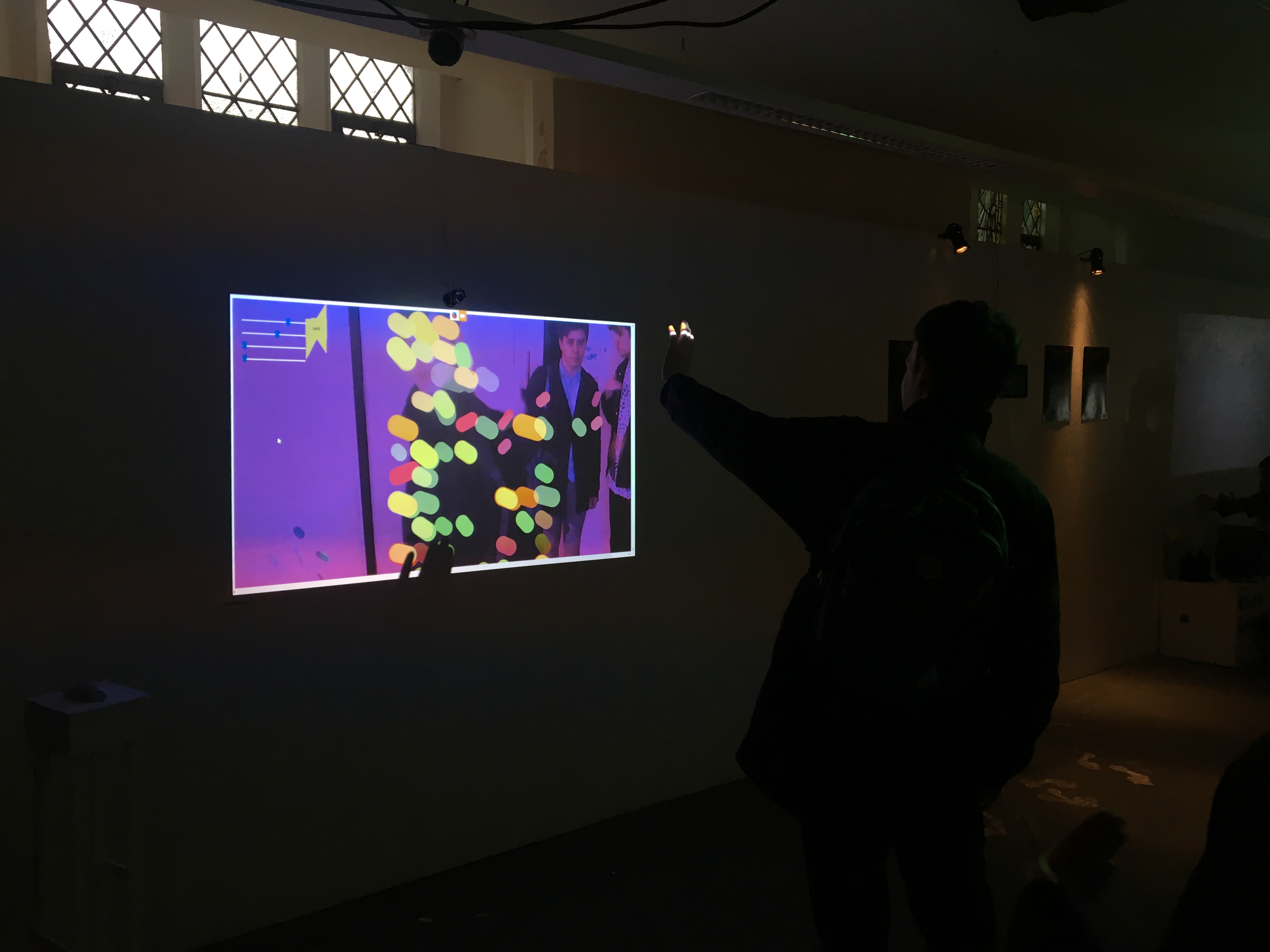

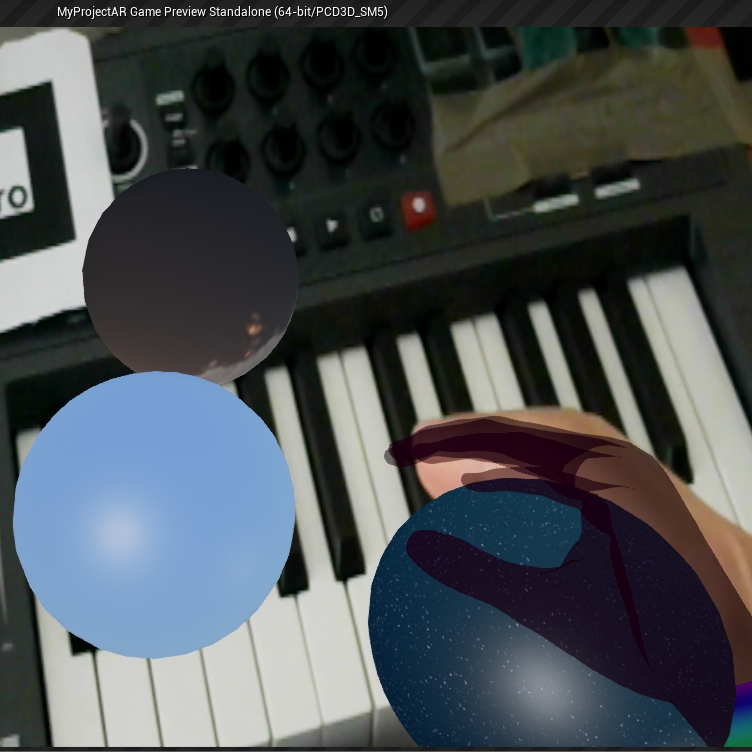

In collaboration with Julius Naidu, I hope to develop a piece of video software for use in an augmented dance piece. The software will take both audio (generated by Julius), and motion tracking data (from a Kinect sensor) as input; its output will be an animation reactive to both. This animation will be projected onto a sharkstooth gauze scrim, behind which Julius dances the flamenco.

This report will consist of a brief survey of projects pertinent to ours, an explanation of their influence on our decisions at this stage, and a more detailed description of the projected projected visuals.

Augmented Dance

Fidelity, choreographed by Natalia Brownlie, with sound by Miguel Neto, and visuals by Rodrigo Carvalho

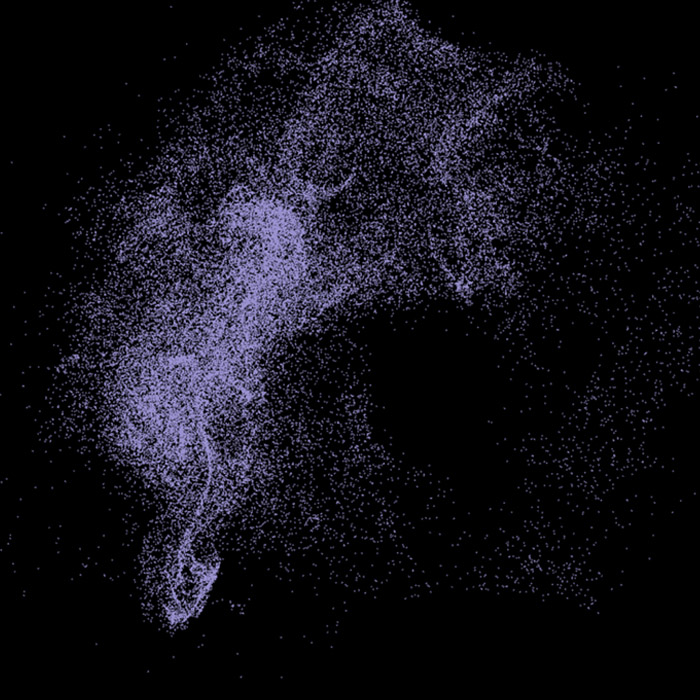

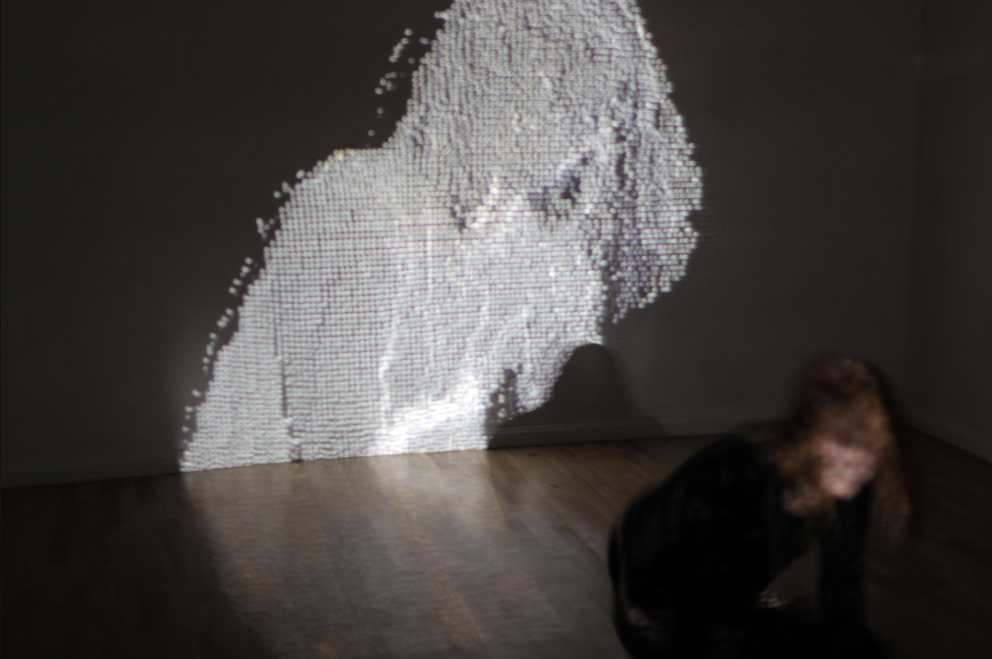

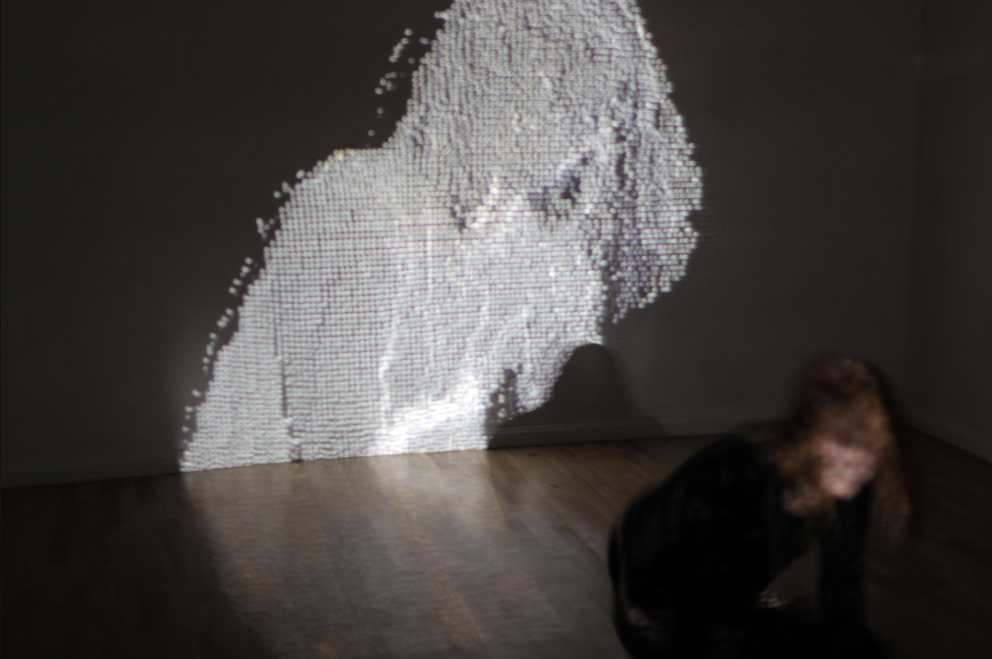

Fidelity is an interactive dance/video project in which a dancer’s movements are captured and used to create a live 3D video. Certain aspects of the video exaggerate a specific ‘dramatic’ gesture made by the dancer: the points of a projected point-cloud silhouette of the dancer disperse and reassemble (see 07:50):

This augmentation of gesture is something we will explore in our project. More on this later.

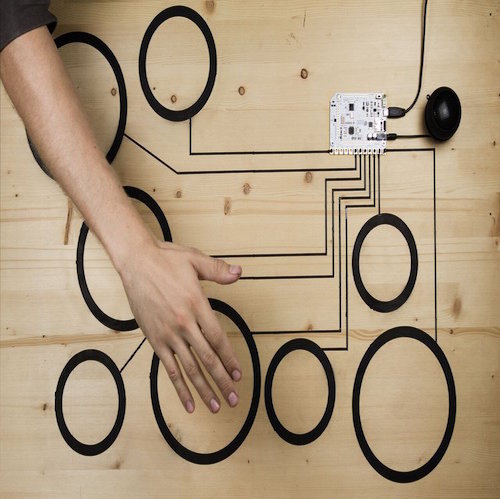

strands is an interactive installation by ecco screen in which an array of lines are projected onto fabric. The behaviour and physics of each strand changes of “its own accord”, while all strands respond to gestural interaction. This combination must inevitably lead to a certain degree of unpredictability in the strands’ response to gesture, lending a “lifelike” quality to the piece.

The visual elements of our project will be responsive to both generated sound and tracked motion. This combination will hopefully give the generated visuals a lifelike quality. The difference here, though, will be that none of the visual components of our piece will change of its own accord; they will change only in proportionate response to changes in sound and motion.

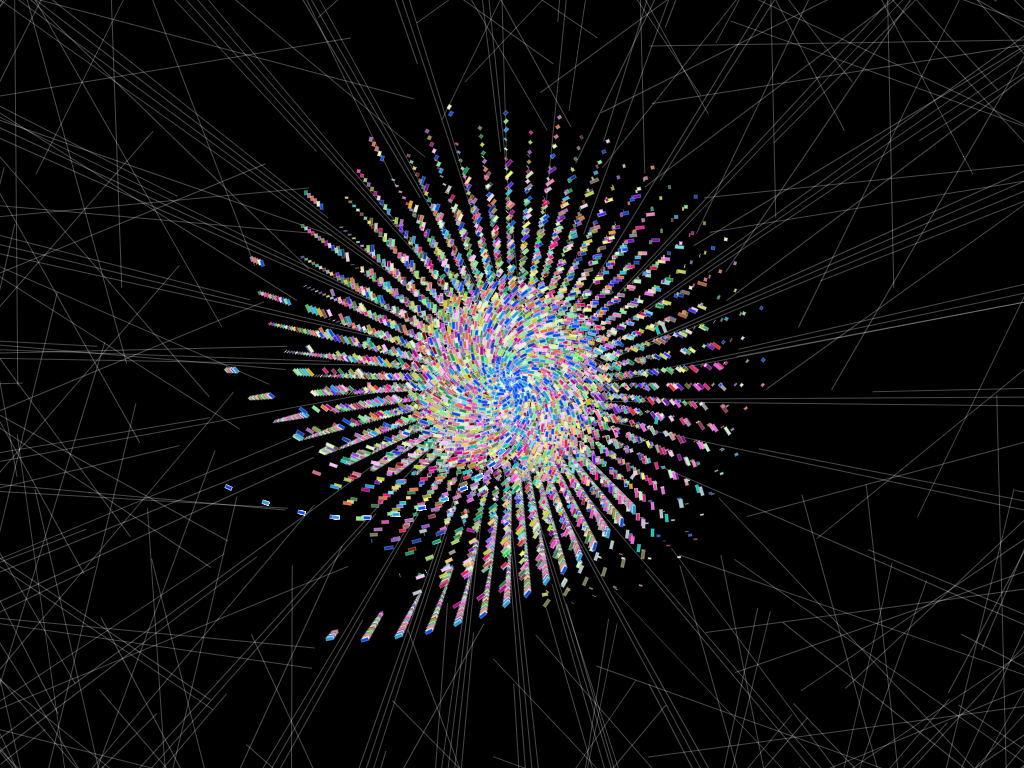

2047, part of the Interactive Swarm Space project, choreographed by Pablo Ventura, with visuals by Daniel Bisig

The dancer in this piece controls an “interactive swarm simulation … via her posture and movements.” The swarm visuals are projected onto the rear wall of a room, in front of which the dancer performs. As in Fidelity, the dancer’s shadow is cast onto the wall as a result of the positioning of the projector.

In 2047, the dancer controls the swarm simulation in such a way that its movements appear as an extension of hers. This idea, along with the silhouetting here and in Fidelity, sparked a more concrete idea for the visual aspect of our project.

Projections

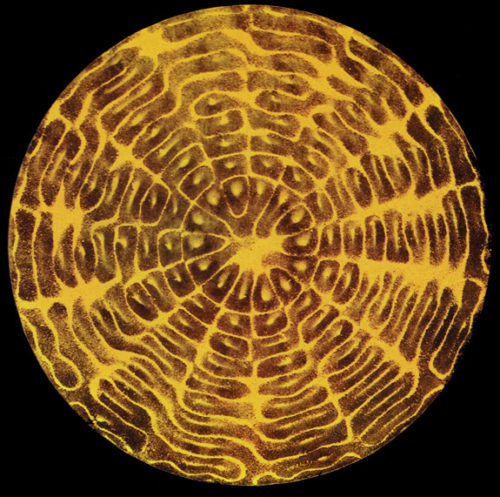

Based on these projects, I have had some initial ideas for my part of our project. Both Julius and I will use motion tracking data (from a Kinect sensor) and live audio (from a mic picking up the sound of Julius’ steps as he dances). Alongside these sources, I will use the audio generated by Julius (as his part of the project) to recreate an image of him dancing. This image will be an outline of the dancer. The outline will be made up of small bands, each representing a frequency band of the generated audio. These bands will themselves be ‘dancing’ as they will be a real-time spectral representation of the audio. The outline will respond to the dancer’s gesture, in a proportionate manner; and to his steps: every ‘big’ gesture or foot-stomp will result in an increase in the size of the form suggested by the vague outline. As the dancer limits his movements, so the limits of the outline will recede or shrink.

We hope to project this dancing figure onto a sharkstooth gauze scrim (as per Simon Katan’s recommendation). Throughout the performance we will adjust the lighting to alternately reveal and obscure the actual dancing figure behind the scrim.

References

[1] Fidelity

[2] strands

[3] 2047