A.M.I (Another Memory Interupted)

By Joe McAlister

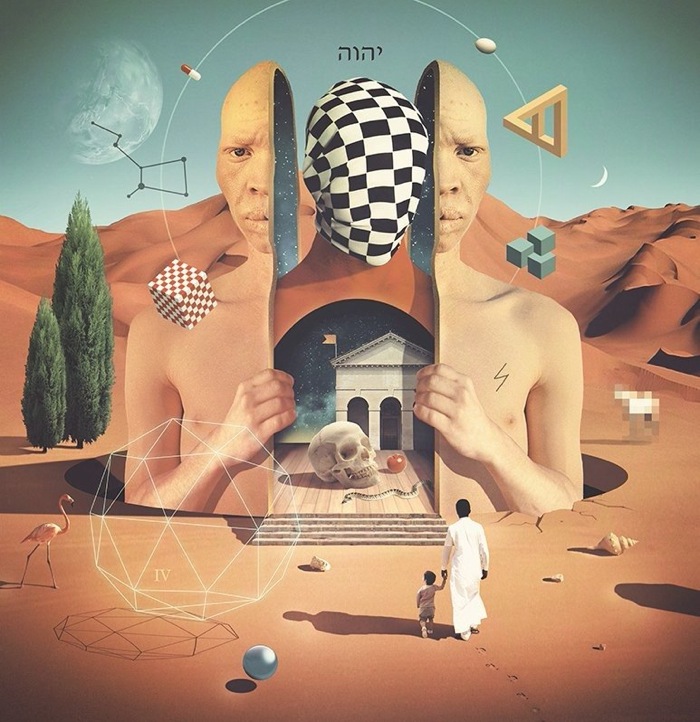

A.M.I was a piece that unexpectedly changed its direction mid-creation. Built as a direct response to "The Watsons"1 it continues an unwritten narrative that documents a very unusual part of my life. It explores the theme of self representation, and how we often mis-represent each other through social media. Creating a foe-self, one free from imperfections and insecurities. It's only when we look at these personas at face value we begin to notice how we subconsciously hide things from ourselves. Whether it's ignoring a specific part of life, living a complete "pseudo life" or just hiding emotions.

A.M.I is an exploration of this, a physical manifestation of the overwhelming emotions that often bleed into reality and take a hold of our lives, encaged in the box her brains desperately try to process memories, categorising them, probing them scientifically, desperately trying to determine the best course of action for the smallest of things. Trying to take a rational "computerist" view of every situation which just produces this overwhelming and almost clinical feeling. Often detaching the user from their own emotions, allowing them to view in 3rd person, almost as if they were staring in a reality tv-show about themselves.

It's also a much more personal piece, it details a period of my life the postdates The Watsons, a difficult time where I struggled to understand several elements of my life. This is what I wanted to get across, to let the user feel how I felt, in almost a self therapeutic move, to help myself understand my own emotions a little. The Watsons were directly a comment on how it felt finding a group of friends, a family. People who looked after me and would help me throughout every step of my life. A.M.I is a representation of the darker side to this, the paranoia and worrying that directly follows.

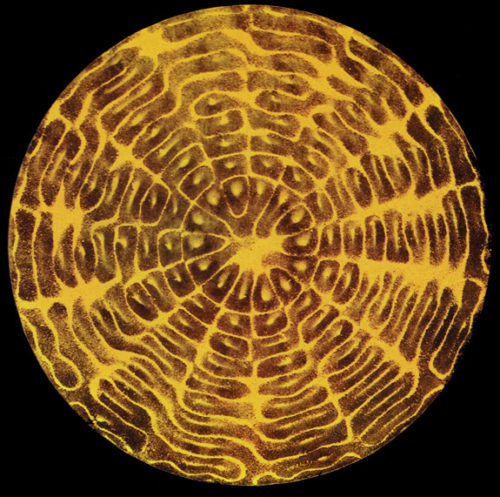

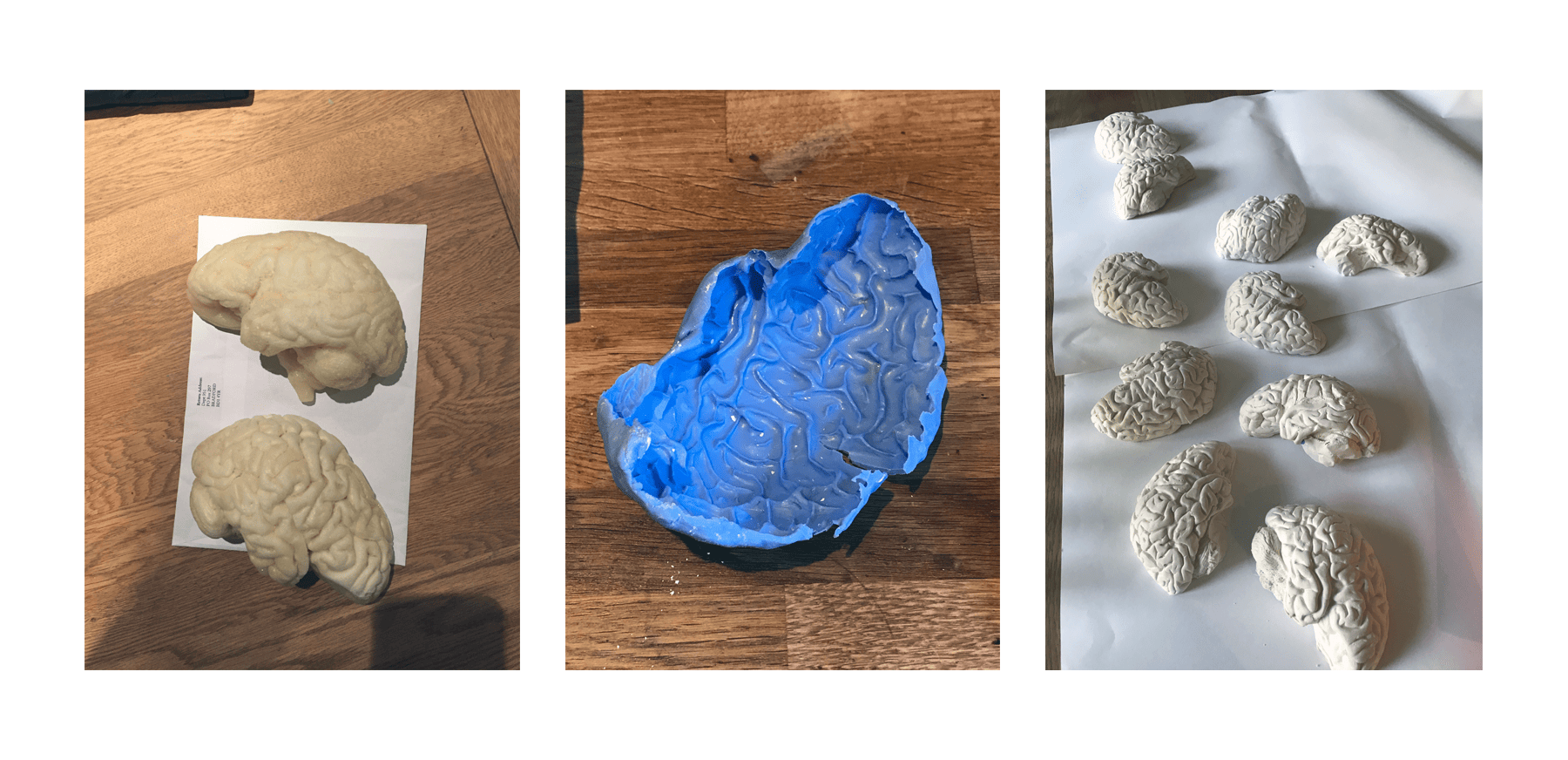

Creating the physical form

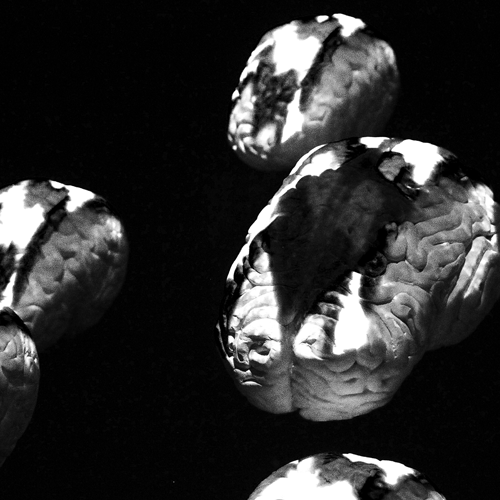

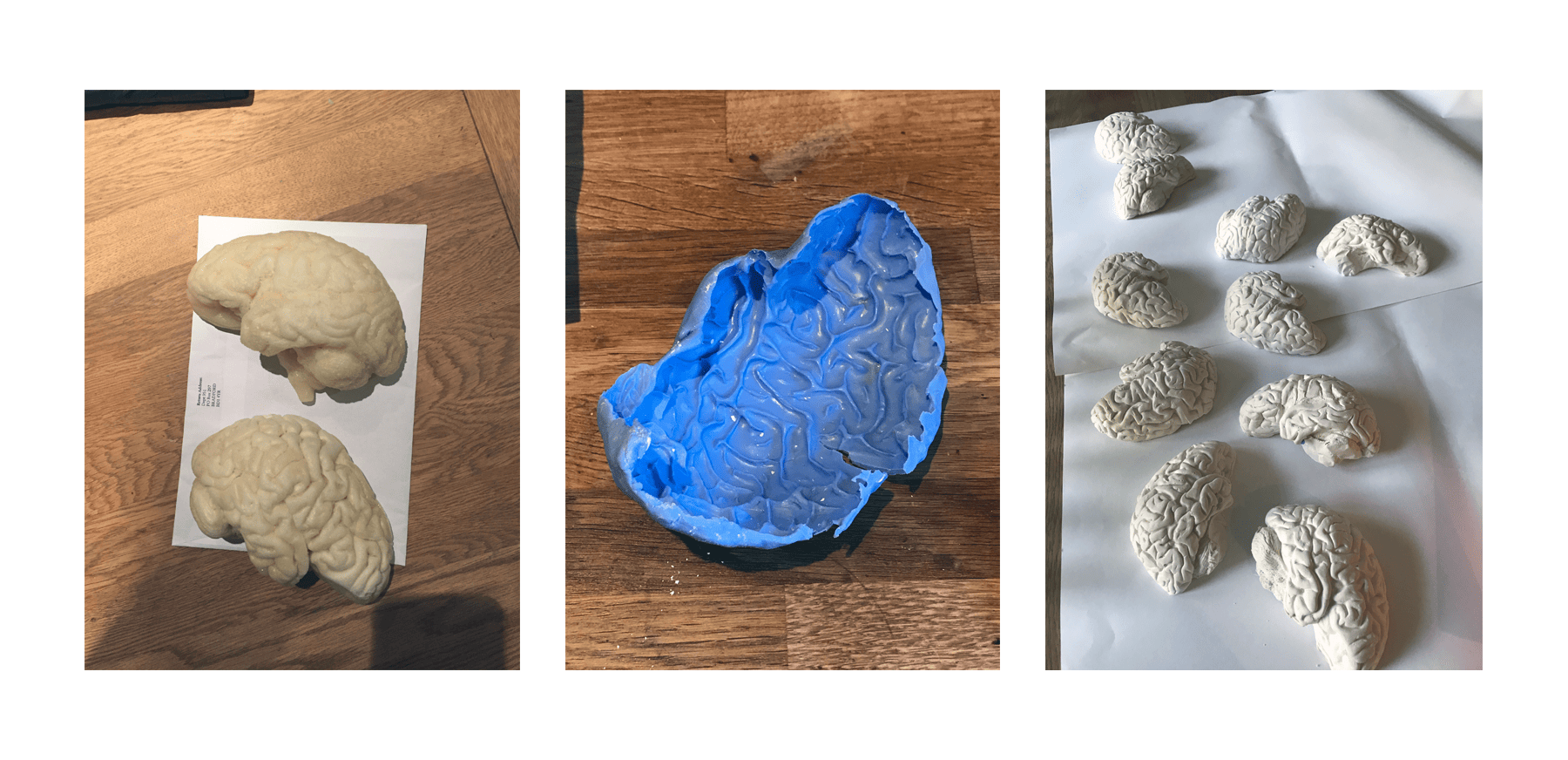

I wanted to find a form that would help illiterate and describe the overwhelming thinking process. It made sense to use a body part, specifically the brain. After deciding on the brain form I knew projection mapping, something I have used in the past was the most appropriate. It would allow me to animate and visually dictate to the user a process. This lead to the creation of a basic plan for a rig where the projector was suspended from the ceiling allowing for downwards projection onto brains. Knowing that creating the brains were likely to be difficult I began the process of experimenting with materials in February, first trying to create brains out of expanding foam to minimise the weight as much as possible. While this was somewhat successful they often resulted in porous and unpredictable textures, none of which are suitable for projection mapping. That is when I adapted the plans to instead suspend the brains from the ground on poles, allowing for much heavier pieces. This worked consequently allowing me to create brains using plaster, by first sculpting a clay model, casting it and then casting subsequent brains using plaster. Another bonus of plaster was the ability to later carve into the brains, finishing each by hand to assure a smooth finish. It was important however for each to be subtly different. I wanted the piece to feel organic, as if all the brains were from individual people so some have different slanting or even shape. While beautiful it made the mapping process somewhat harder as there was no uniformity.

Above (left to right): A first prototype expanding-foam brain; the silicon mould created from the clay sculpt, used to cast the plaster brains; finished plaster casts.

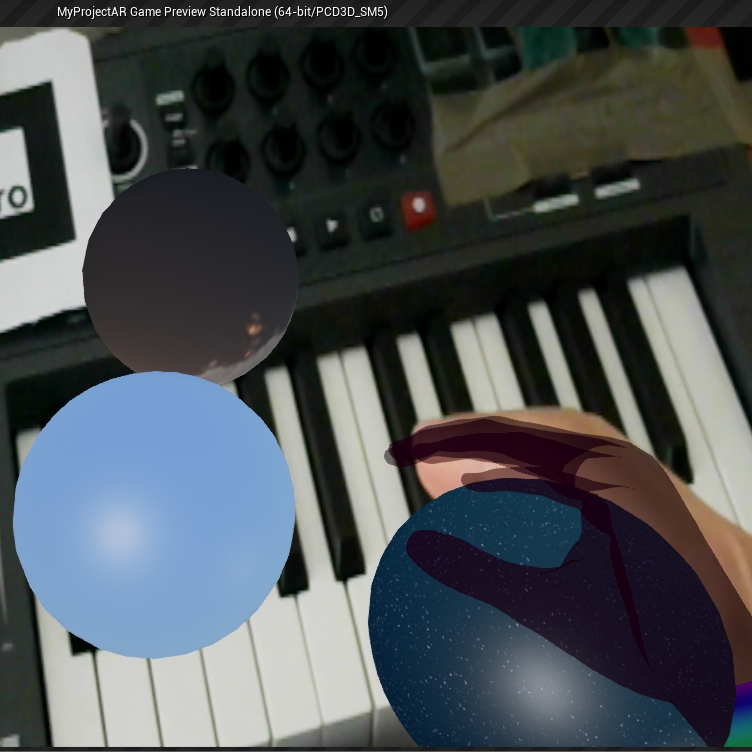

Creating the digital form

Creating the technical side of A.M.I was a challenge. Many limitations and subsequent ambitions required me to find multiple creative solutions that would allow the piece to be as close to my initial realisation as possible. To make it easier to explain I've broken down the system into 3 categories: font-end mapping system, interaction and the script builder.

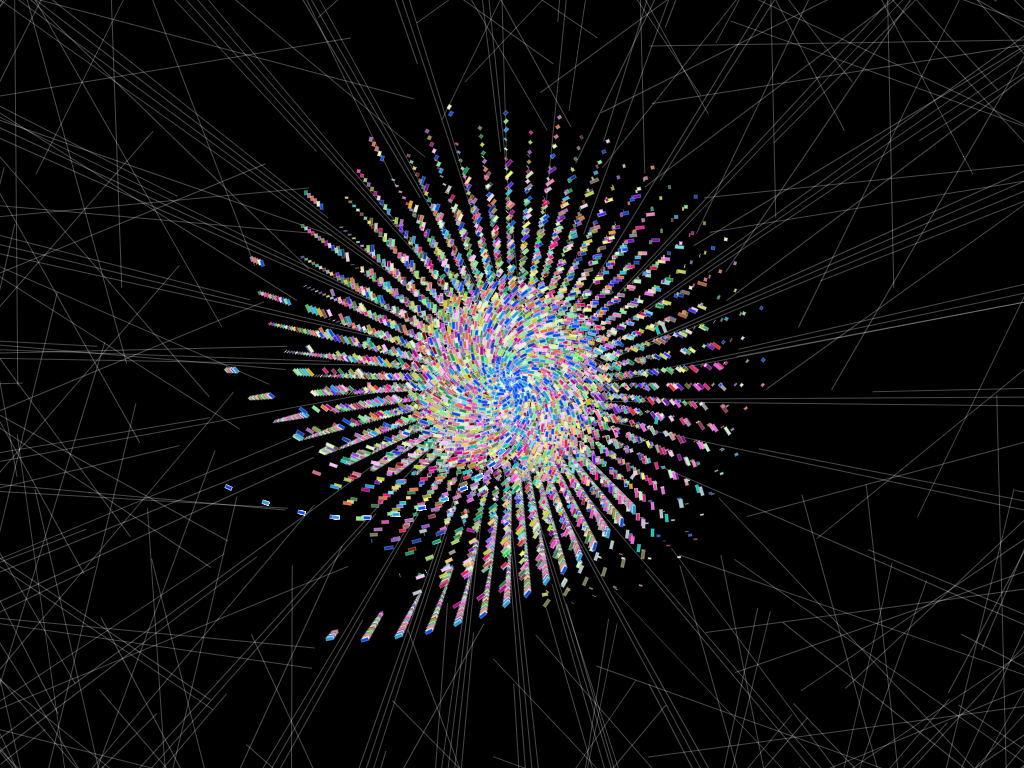

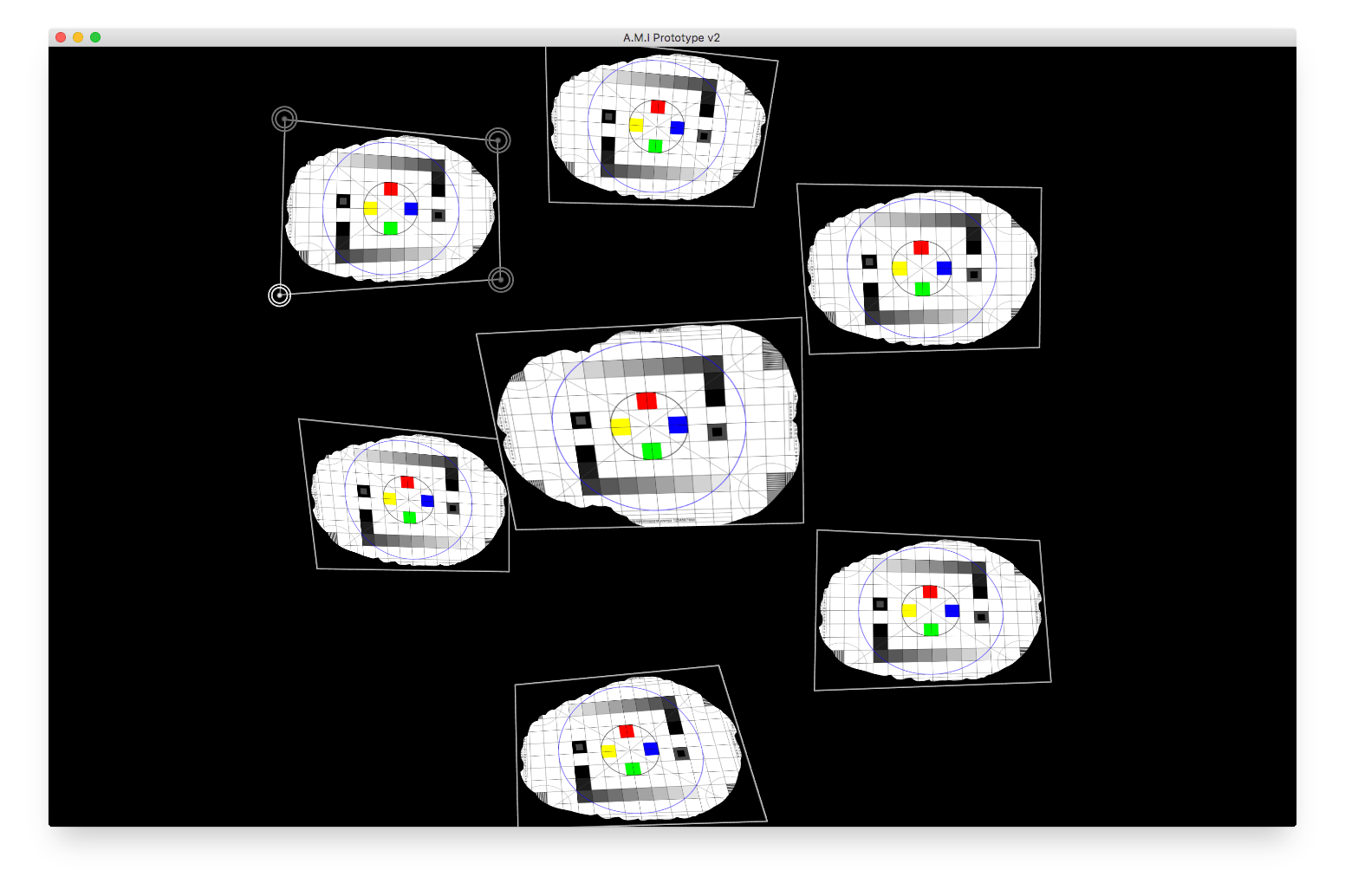

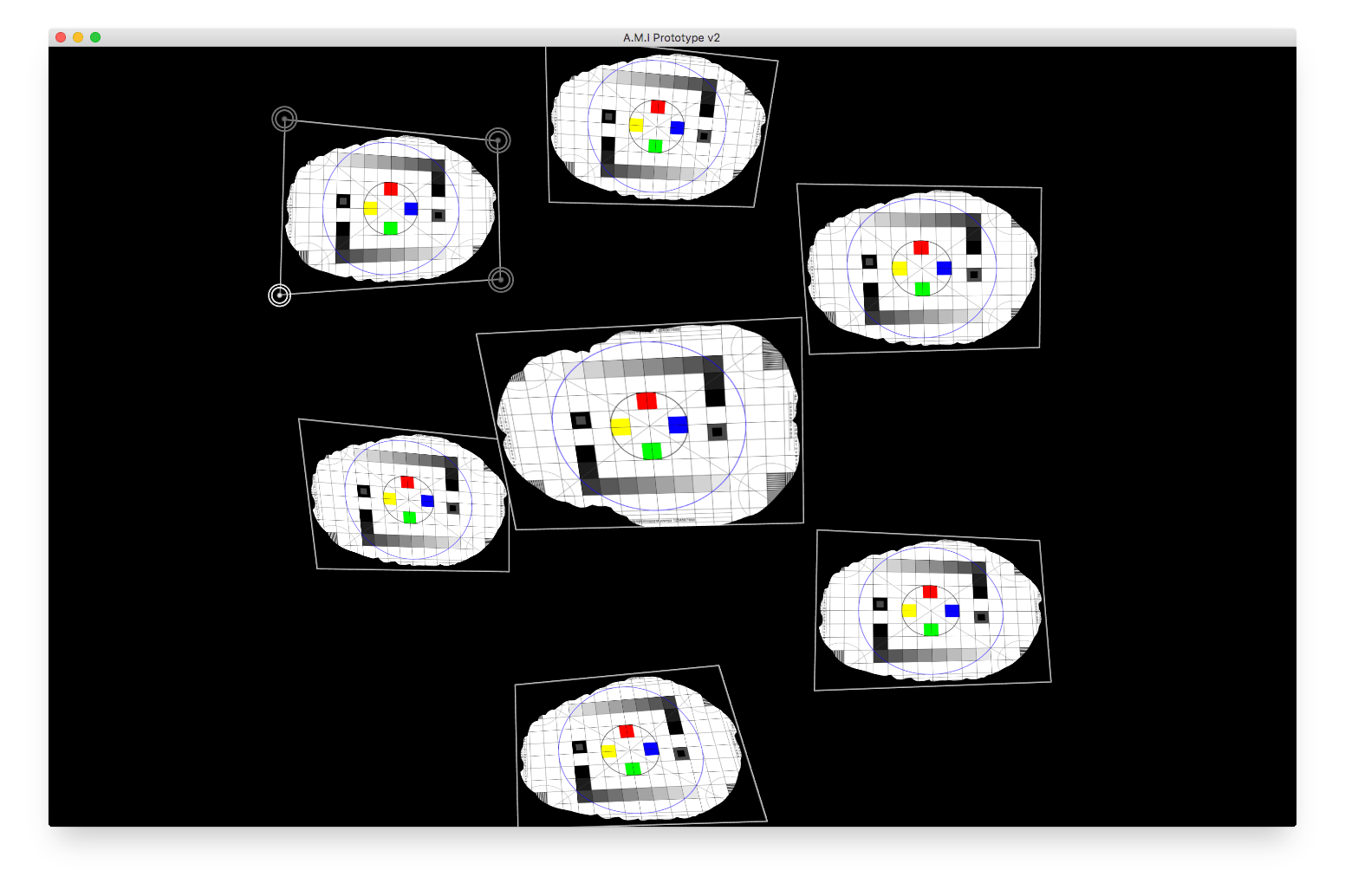

Front-end mapping system

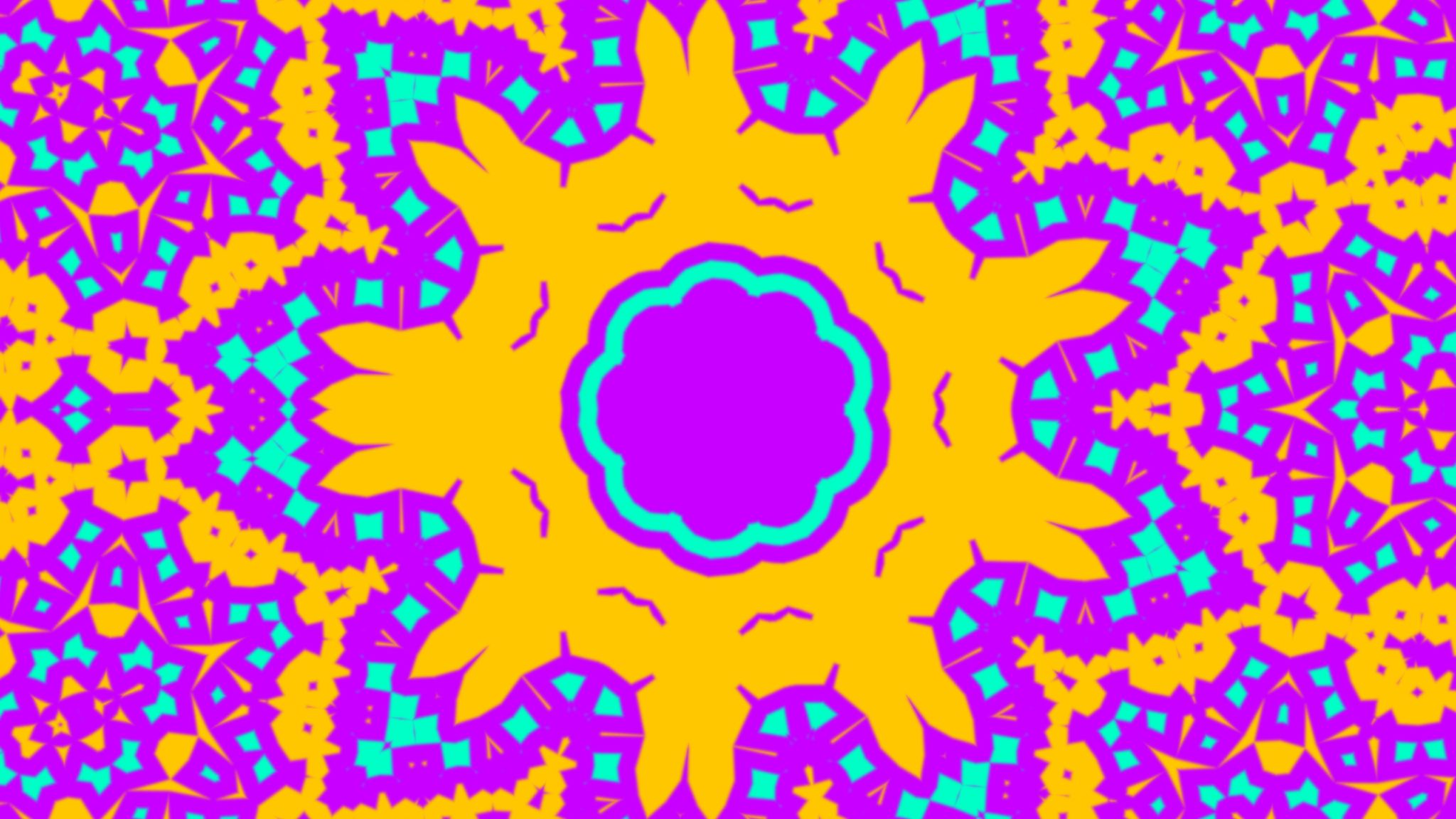

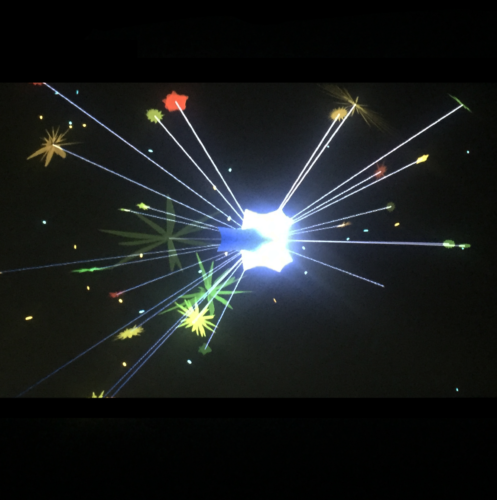

The mapping system was probably the most problematic to build, this was because of two reasons. Firstly I wanted to projection map seven objects simultaneously, a particularly large number. Secondly, the visualisations would change based on each user. The projection mapping included still photos but also video (sourced from the internet), alongside a dynamic animation which perfectly mimics the auto-generated speech (speech, also downloaded from the Internet). It had to do this while maintaining a constant frame-rate above 30fps as otherwise the visualisation would appear to be out of sync.

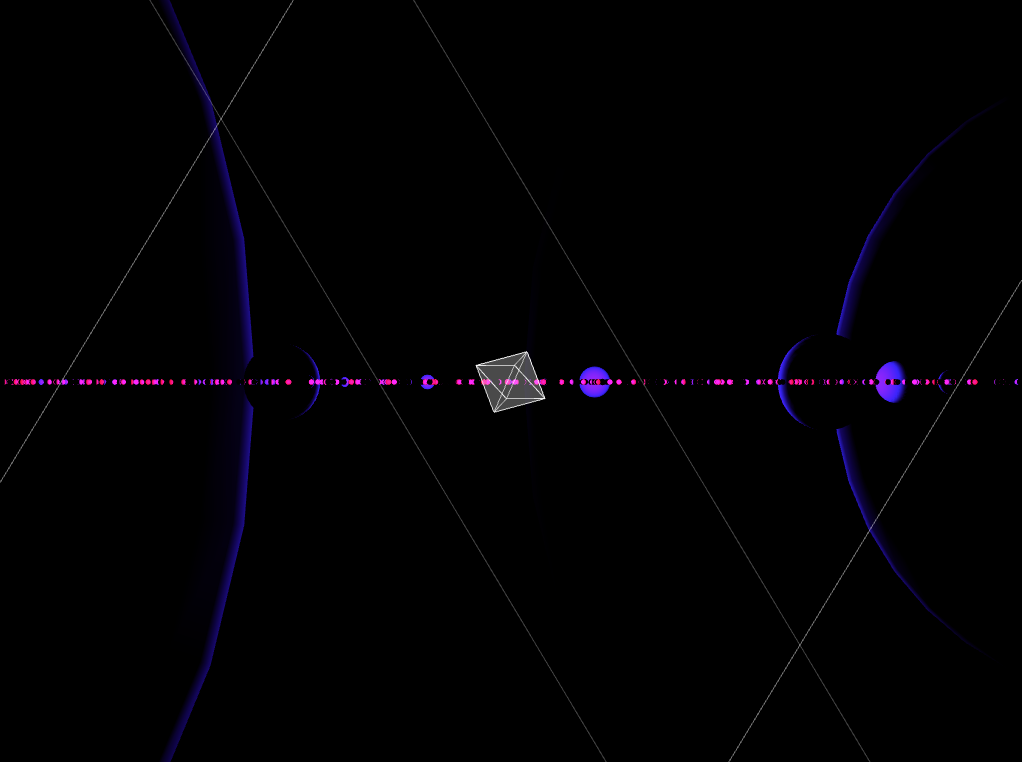

Above: The mapping mode within A.M.I's front-end system.

The first way I tackled this task was to build a system that would download the entire "payload" for each user prior to their experience. This prevented any streaming or buffering issues that have occurred in the past, additionally this was completed over ethernet for reliability. Next I looked at the options available for creating the application, I wanted to program the piece in C++ as this gave me the highest likelihood of being able to streamline the performance. After a small amount of experimentation I turned to Cinder2 due to their great and pretty easy implementation of OpenGL. By drawing every object in OpenGL I was able to really maintain a consistently high frame-rate, averaging at about 50fps on my (Early 2015) 3.1 GHz Intel Core i7 MacBook Pro. I also wrote a very easy to debug "script format" which was encoded in JSON and read into the application after being generated by the "script builder" process. This script dictates the visuals and sound therefore making sure every users experience is completely customisable.

Script Builder

This part was essential and initially quite hard to get right. The final solution utilised, python, php and Shell, and can be viewed in it's entirety here. A "script" is generated by my server every time a user interacts with A.M.I through the "interaction" system (via text message). This script is created using various analytical data gathered from the user's twitter account received during the interaction. It includes url's to all images shown, the timing of every section, the pre-generated audio files and any special states that the application should be aware of. It takes the basic form as seen below:

{

"success":1,

"script":[

{

"is_video":"false",

"preset":"",

"objects":[

{

"delay":0,

"resource":"/ami/data/users/portablestorm/images/profile_pic/original.jpg",

"spotlight":"false"

}]}

],

"duration":14.526813,

"text":"Hello joe, Don't be scared, I'm not real, I am Amy.",

"voice":"/ami/speech/cerevoice/outputs/server_render/4408683888597416706.wav",

"isolate":"",

"order":2

}]

}

The script array contains multiple dictionaries that detail every second of the experience, with the running "duration" value stating when the application should switch to the next "line". It meant that I was able to completely customise every experience using the script generated from my server and the actual mapping application its self had to do very little. This was great as it reduced the load on the mapping system3, increasing the frame-rate but also producing a system that was very simple to debug.

All the images referenced are stored locally on my server to improve reliability and are mostly downloaded within the start.sh shellscript or within the python main.py file. This is after the image url's are gathered either directly from twitter (e.g. profile or user pictures) or when they are retrieved from Bing Image Search after determining the topic this section of the script is specifically about. For example, trains or dogs. This is completed using a very basic webscraping script I wrote in Python. This allows the images shown to be truly dynamic, changing drematically from person to person and making the experience very personal.

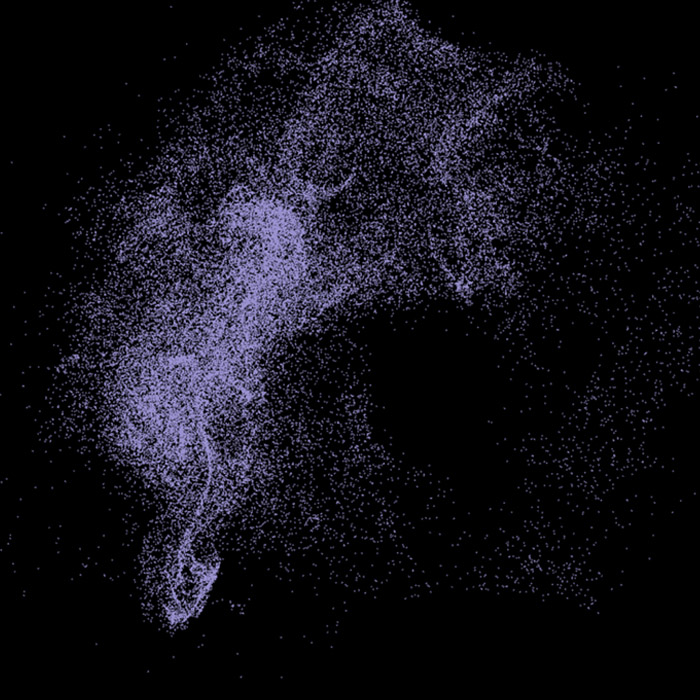

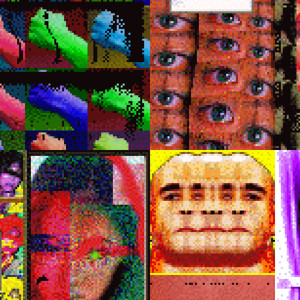

In order to determine the content for the script each user's last 300 tweets were analysed by my sentiment analysis framework "stormkit"4. Written in python, this kit can detect multiple moods using a "bag of words" model-type approach. This allows me to rank tweets based on percentage of sentiment for the moods, positivity, negativity, anger, sadness, scare and worry. I then use RAKE to extract keywords from the tweets using this information to gather a good understanding of what the user is currently thinking about/is on their mind.

In addition to this the user's latest 100 photos are scanned for faces using c++ and OpenCV 3.0, extracting faces and saving them in the folder hierarchy generated using the start.sh shell script. This is later used in the piece to show photos of people they probably know.

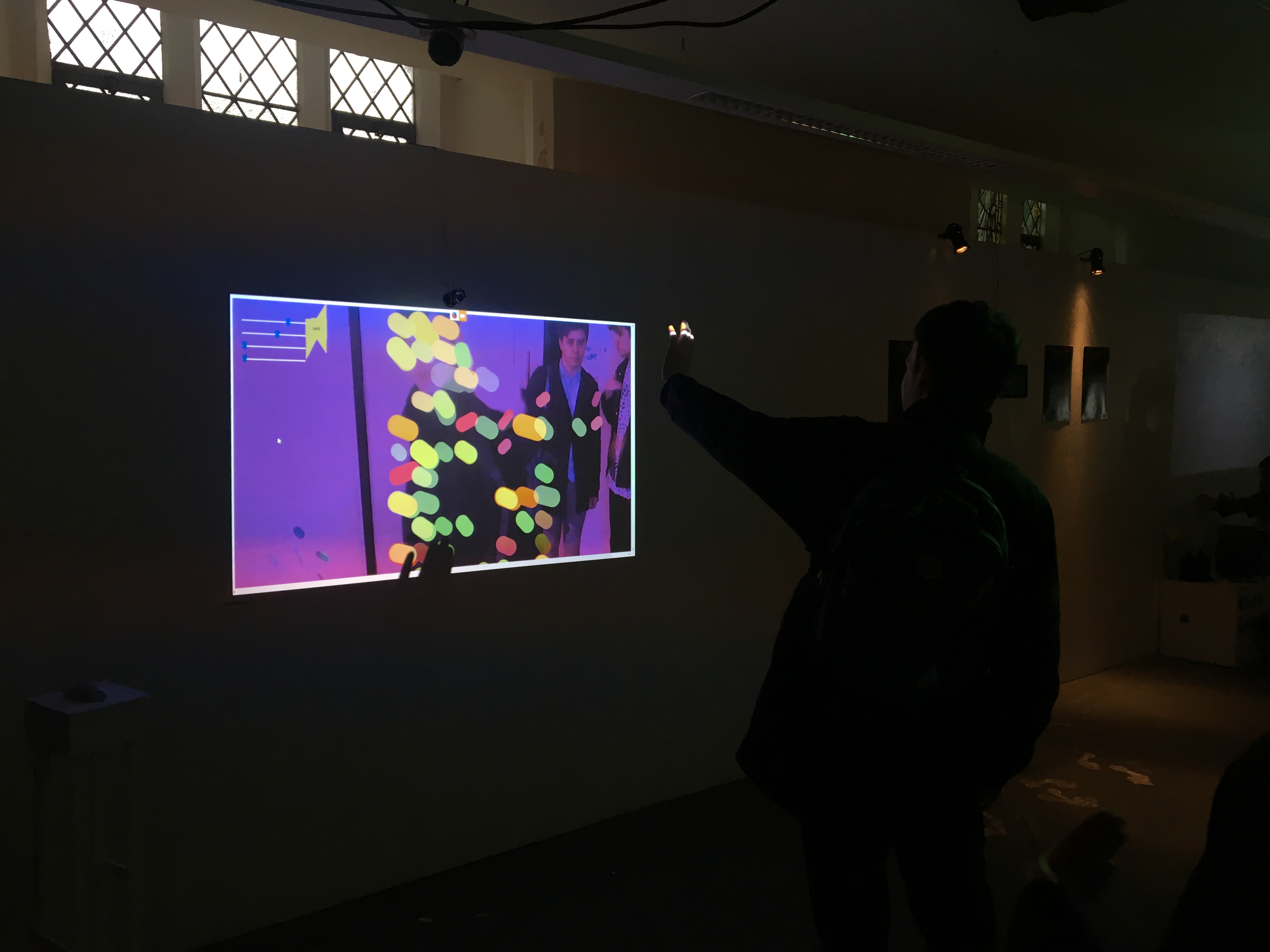

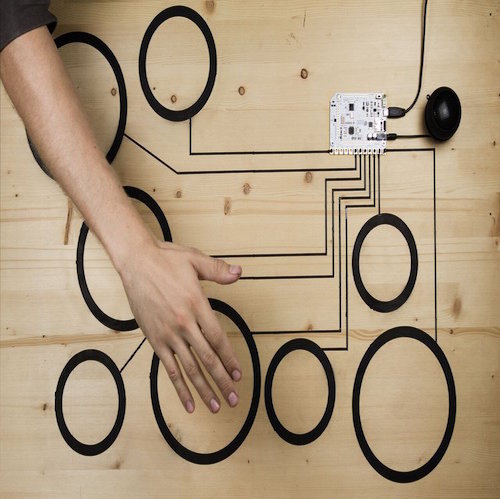

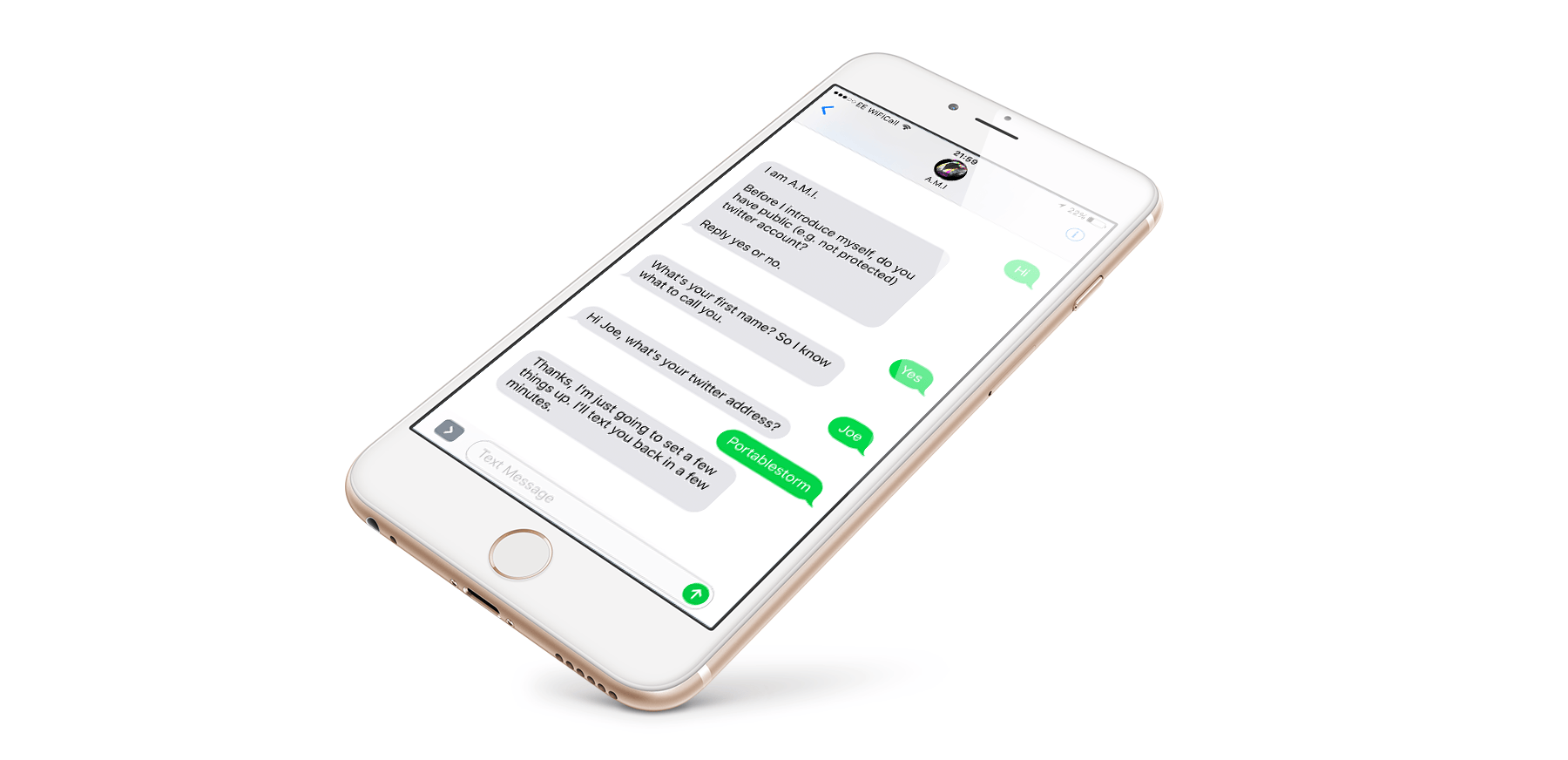

Interaction

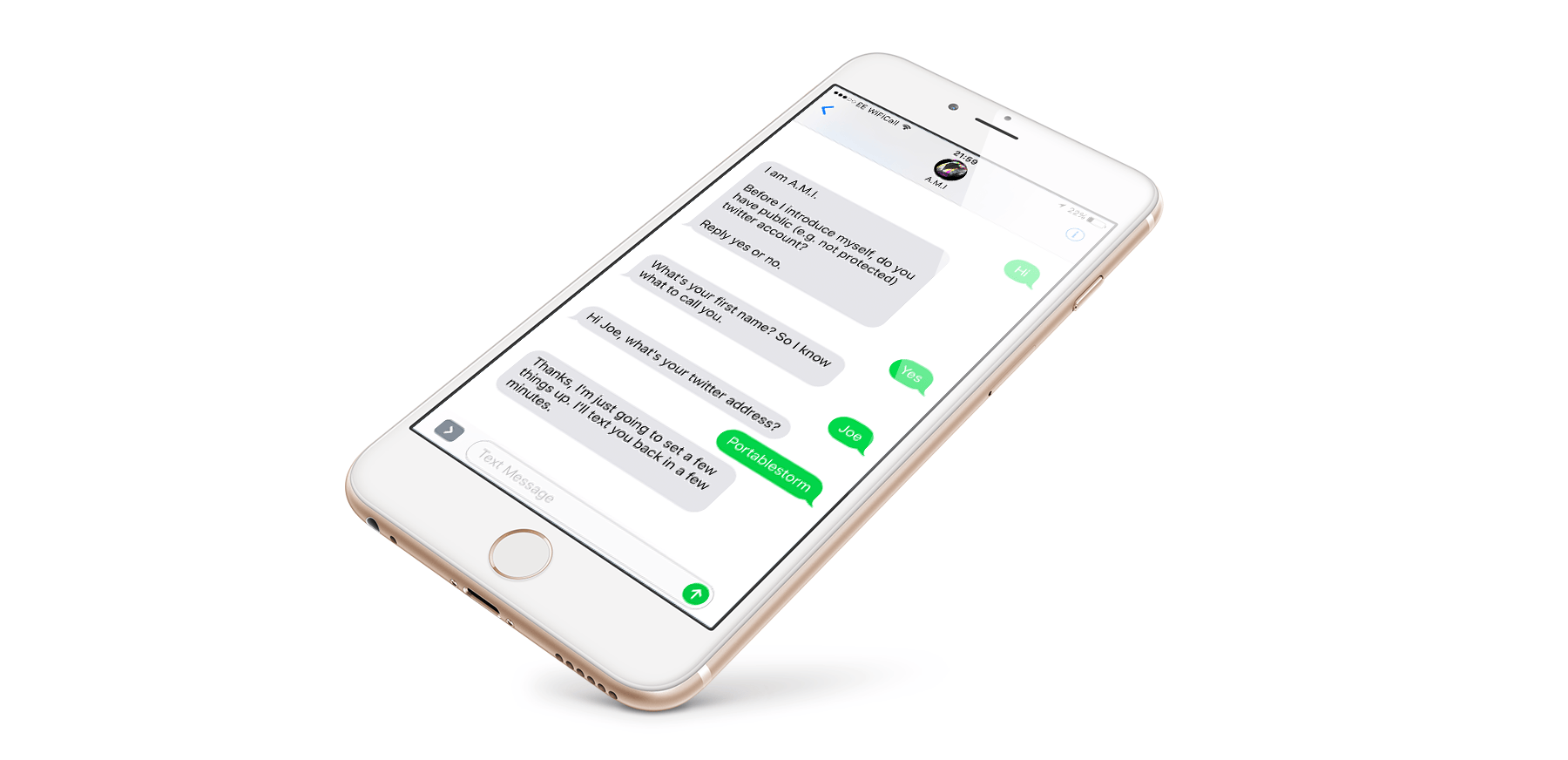

For a previous piece "The Watsons" I had used a website hosted at joe.ac to act as the first point of interaction for the piece, while this method did work well I had several issues with reception within the exhibition space, with many not having any 3G or 4G signal. For this piece I decided to utilise text messaging, something that required no internet signal and in fact felt far more personal. Leaving each user with a small momentus conversation. Using Twilio I set up a basic chat-bot in php which introduced the piece and asked the user for their twitter account. This would then be analysed by the "Script Builder" process and turned into a payload the "Front-end mapping system" could consequently download and run.

During the exhibition people really enjoyed interacting with the piece through text messaging, the personal touch increased the level of intimacy with “A.M.I”, each becoming very invested in what she was saying as it felt like it was very much directed towards them. It also was logistically very good for me. I created a queue system that would text the user what position they were in the queue and remind them to come back shortly before the piece started. This meant user's were not required to stick around until it was their turn and they could instead go and look at the rest of the exhibition before coming back when needed. This reduced congestion around the piece. Something I saw in particular with "The Watsons".

Demo

Final words

For this piece, intamacy was intergral to the expierence, I wanted the user to feel a set of emotions, to be overwhelmed with information, the almost droning monotone voice constantly stating facts and information; the glaring bright visuals quickly changing, leaving little time to gather their contents; the backing sounds' booming heart beat and anxious ticking causing the viewer to feel on edge and more alert. The response I got from the opening night was overwhelming positive, people found this piece to be incredibly adaptive and emmersive, allowing the user's to really relate to the piece, with personal images acting as a way to draw the user in, making them feel a part of the artwork.

It was an incredibly daunting task to create this piece as not only was it techincally challenging and physically difficult, it was much more openly personal than my usual pieces. This is why, for me, how the user-related was of such importance.

Gitlab Repos

http://gitlab.doc.gold.ac.uk/A.M.I (most work in progress done here in seperate repos)

http://gitlab.doc.gold.ac.uk/jmcal001/a.m.i (mapping system)

http://gitlab.doc.gold.ac.uk/jmcal001/a.m.i-server (server-side system)

Sources

1 https://joemcalister.com/the-watsons

2 https://www.libcinder.org

3 https://github.com/paulhoux/Cinder-Warping

4 https://github.com/joemcalister/stormkit