Double Composure

by: Daniel Sutton-Klein

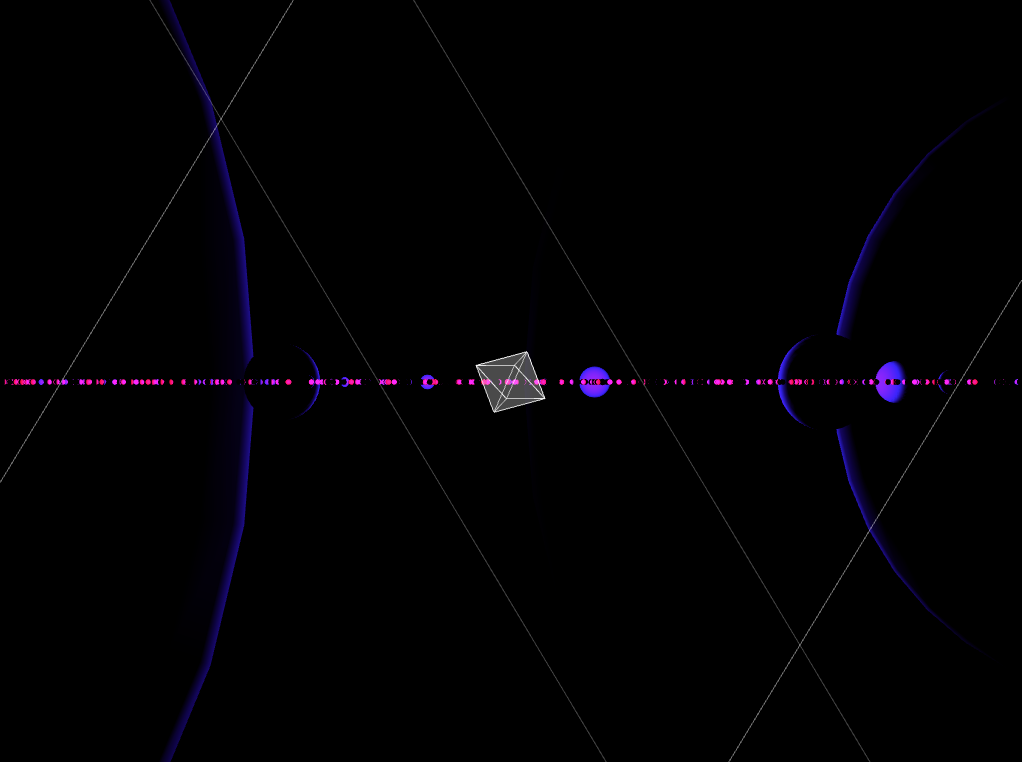

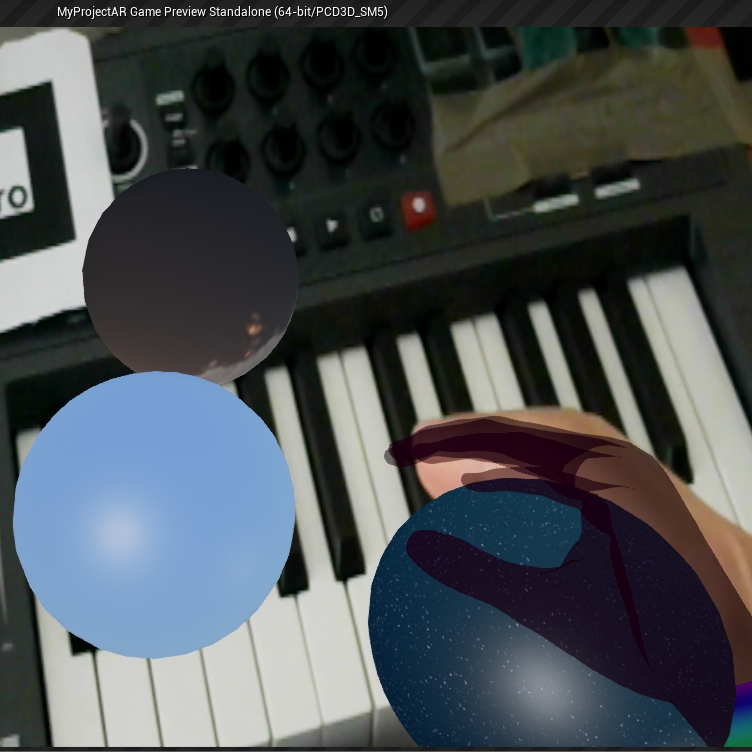

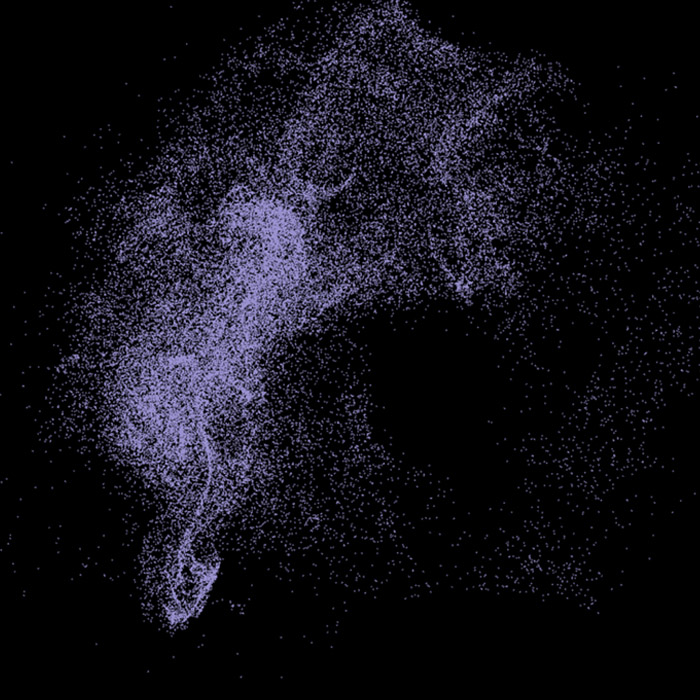

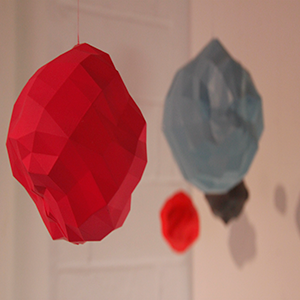

Double Composure is a 'low-poly' style digital media transformation tool designed for artistic effects. Typical usage follows three steps: opening a photo or video, customising cells and then reintegrating them back into the original media. Within each of these are ways to control the output; however the processing is entirely deterministic from the moment buttons are released. The primary editing menu is the 'cell editor' which employs a variety of tesselations and other options. Editing external media is inspired by the way printers and displays use dots and pixels; you paint resolution in the 'iteration editor' which Double Composure uses to reconstitute the loaded image with shapes. This feature allows a subject to be obscured or swarmed by the geometry leading to the final method of realisation; augmented reality. Cells can be stuck onto any surface in a video making them truly part of the environment. The project set out to recontextualise computer science and mathematics but ended up producing a digital reality aesthetic. Self-reference continues down to the fractal multiplication of cells which uses a simple iterated function system. Here on this blog I demonstrate the main developments (or effects) although not all were successfully refactored into the end result:

http://gitlab.doc.gold.ac.uk/dsutt001/double_composure/tree/customTiling

Influences

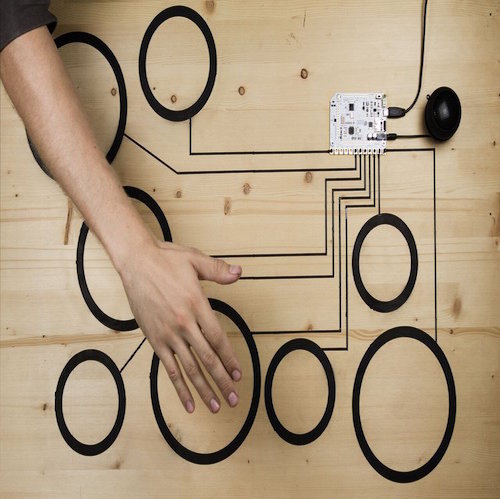

There were 3 initial influences for my project: the increasing use of biofeedback in art, the nexus of computer vision and augmented reality, and the techniques and possibilities of image transformation.

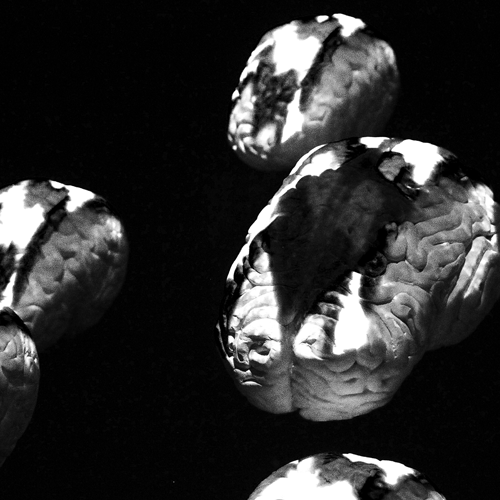

Biofeedback: The electrical activity of skin was utilised in a 1965 solo musical performance by Alvin Lucier [1] within decades of the phenomenon being first observed [2]. It was also deemed useful by sci-fi author/church founder L. Ron Hubbard [3] for more controversial purposes. A recent artistic biofeedback piece sees a brain sculpture illuminated by the participant's brainwaves [4]. Experienced as a "constantly changing biofeedback loop" it inspires the idea of combining data into a single output.

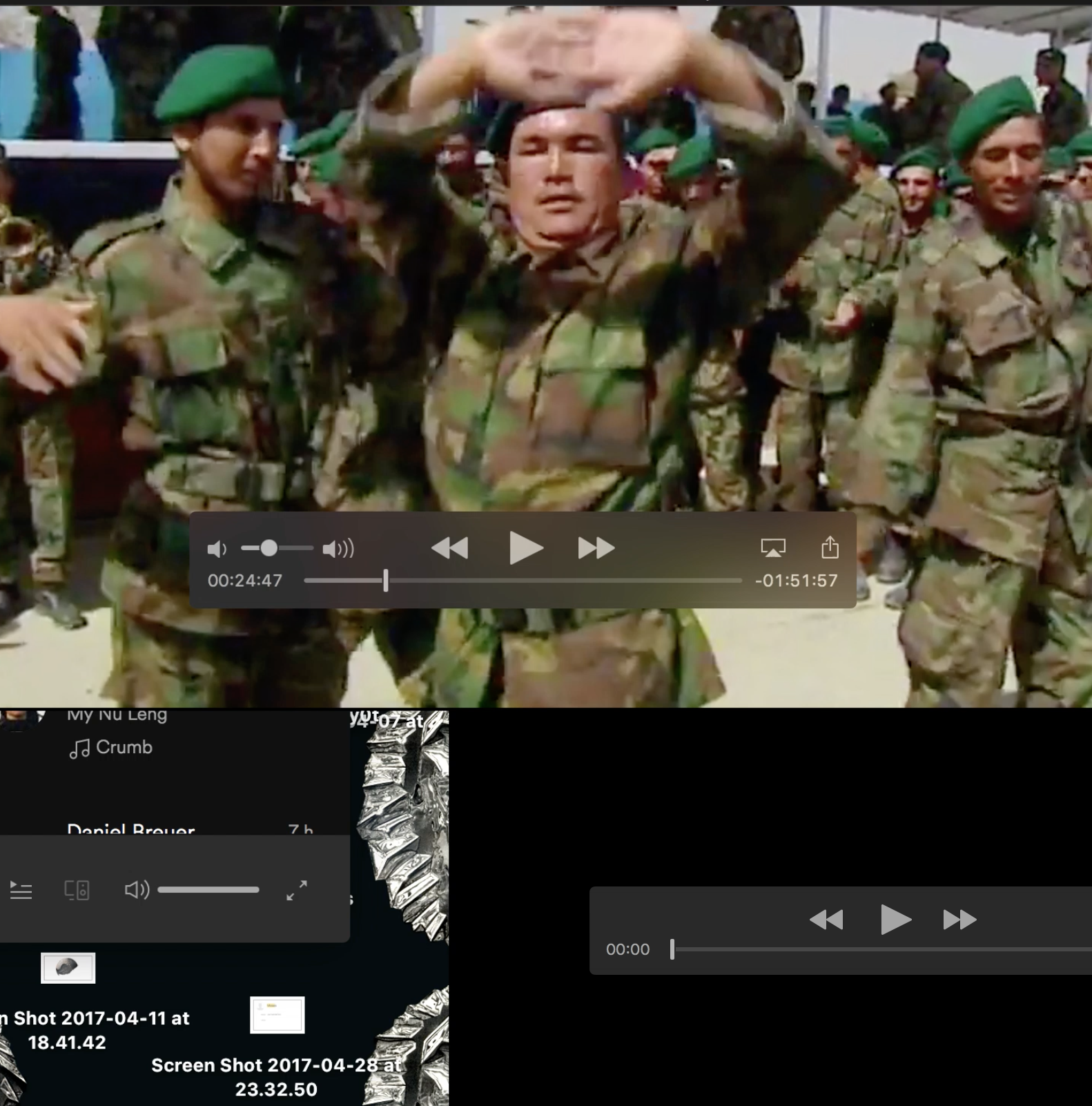

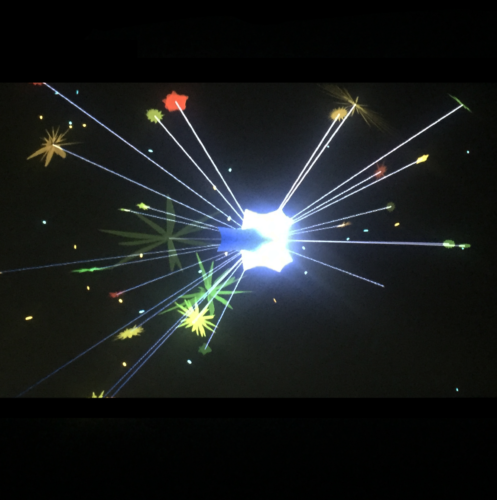

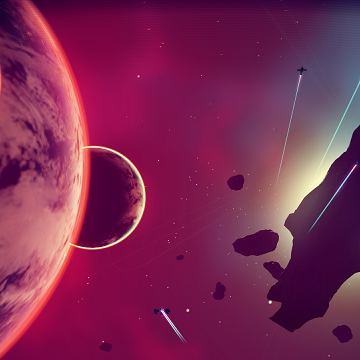

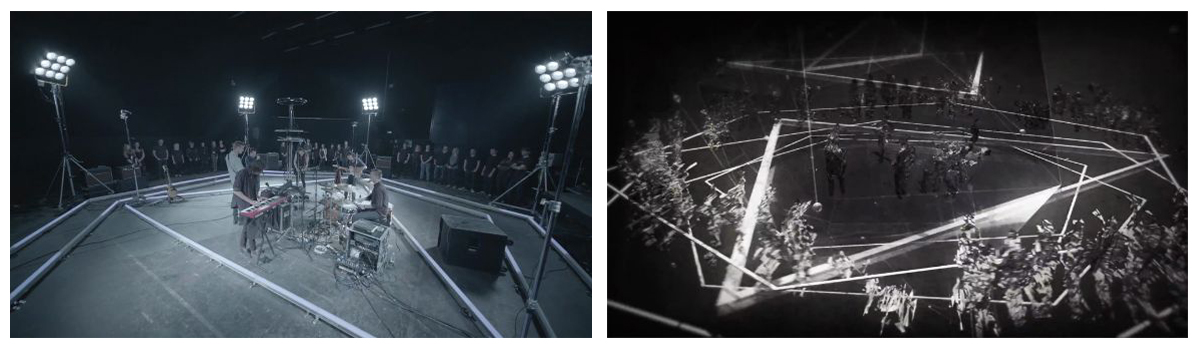

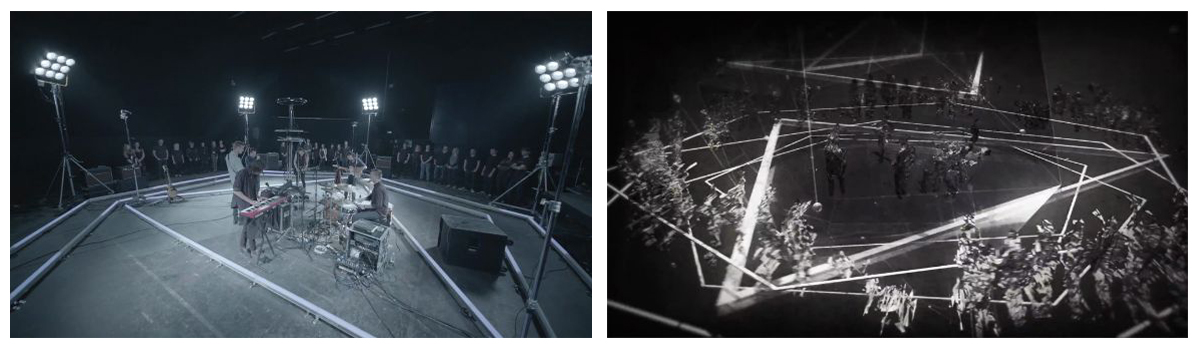

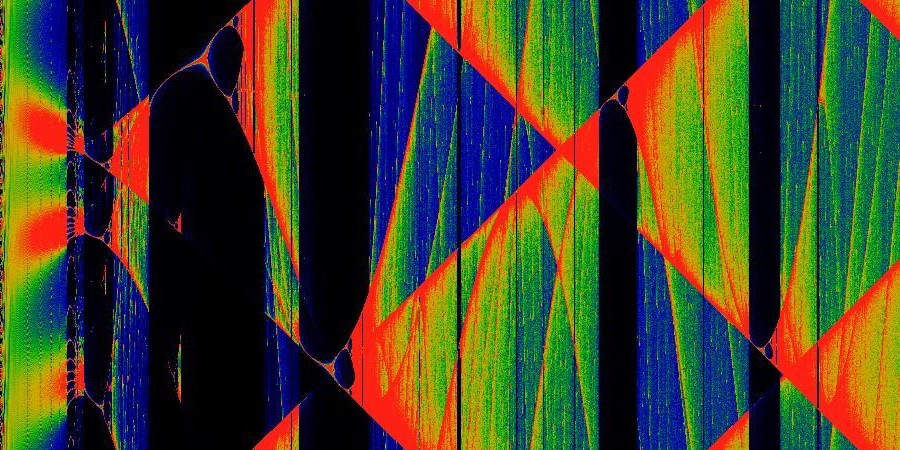

Depth cameras being used in The Maccabees - In The Dark

Computer vision (CV): CV encompasses tasks that, when solved, offer a different type of data to biofeedback. Common in military robotics, selfie apps and medical imaging alike, these tasks try to 'see' using algorithms and maths. Application of CV has changed the film industry by rendering the traditional technique of rotoscoping, amongst others, virtually dead. Augmented reality (AR) superimposes computer generated graphics over real image with the help of CV. Gamers use it every day to interact with virtual entities without the sensory dissociation of normal 3D graphics. In the video for The Maccabees - In The Dark [5], regular cameras are synchronised with depth cameras and the data combined in fluctuation with the music (above). The video crosses the gap between reality and virtual reality; showing completely impossible events take place in seamless transition to the band. I suspect there are even more possibilities with raw CV and creative code.

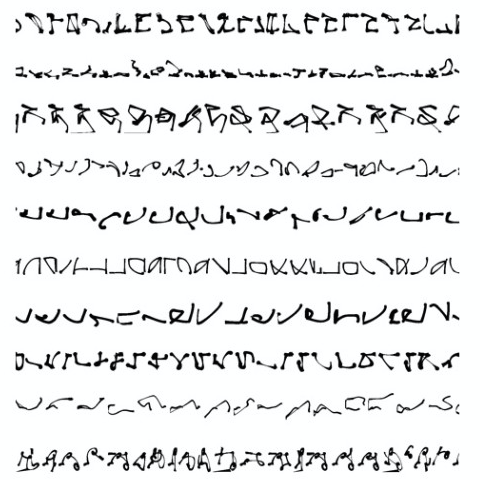

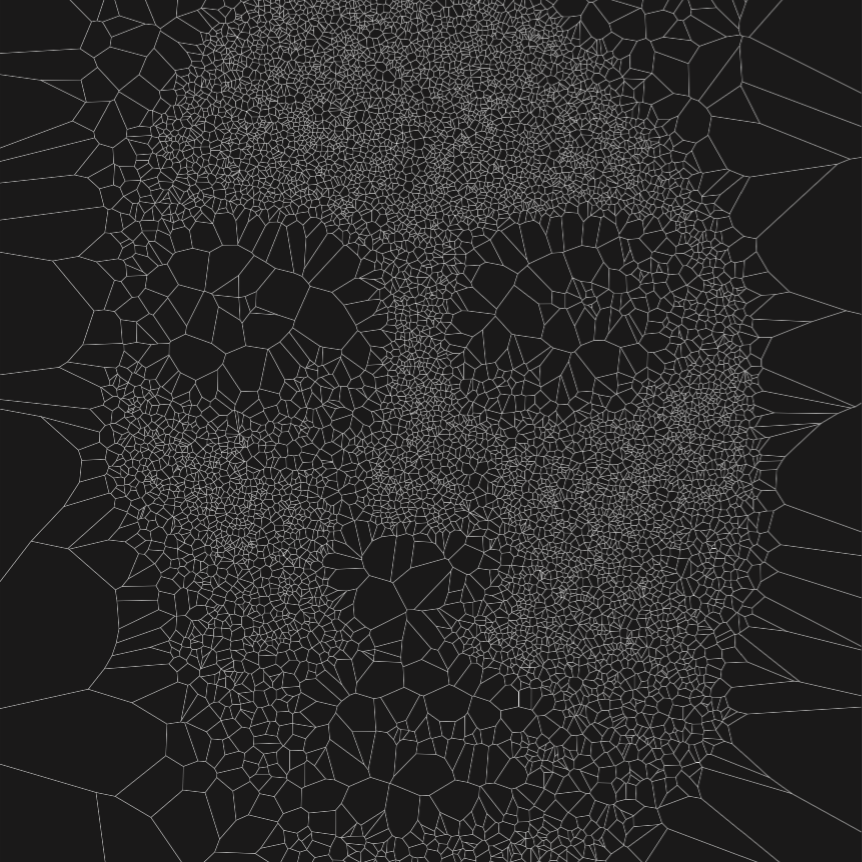

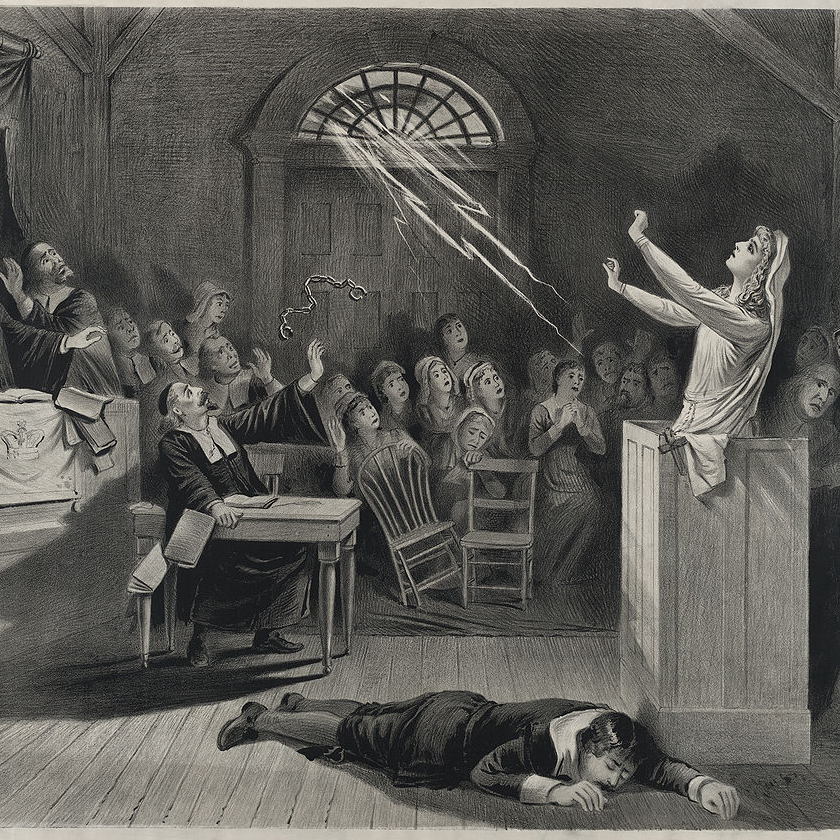

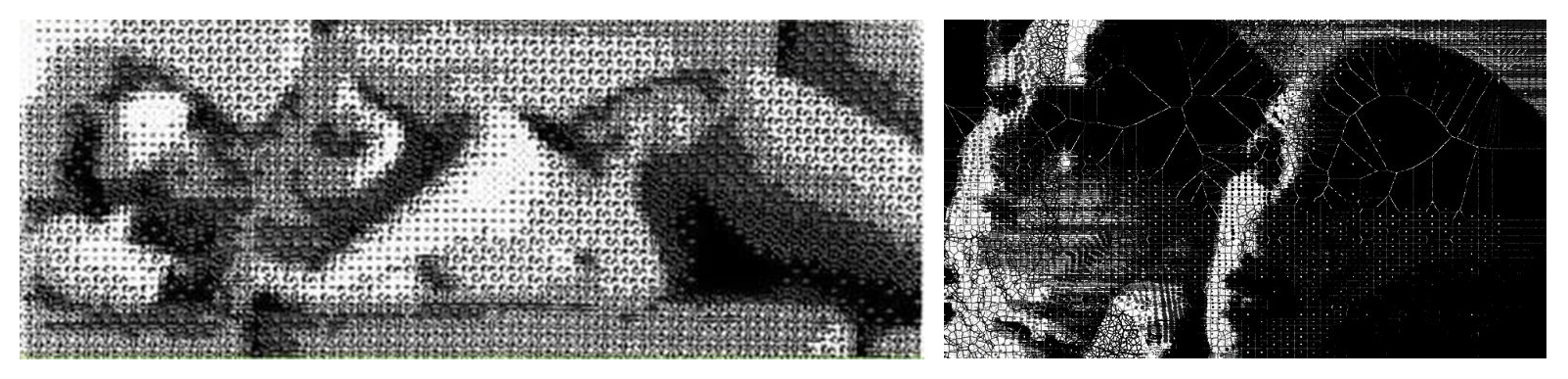

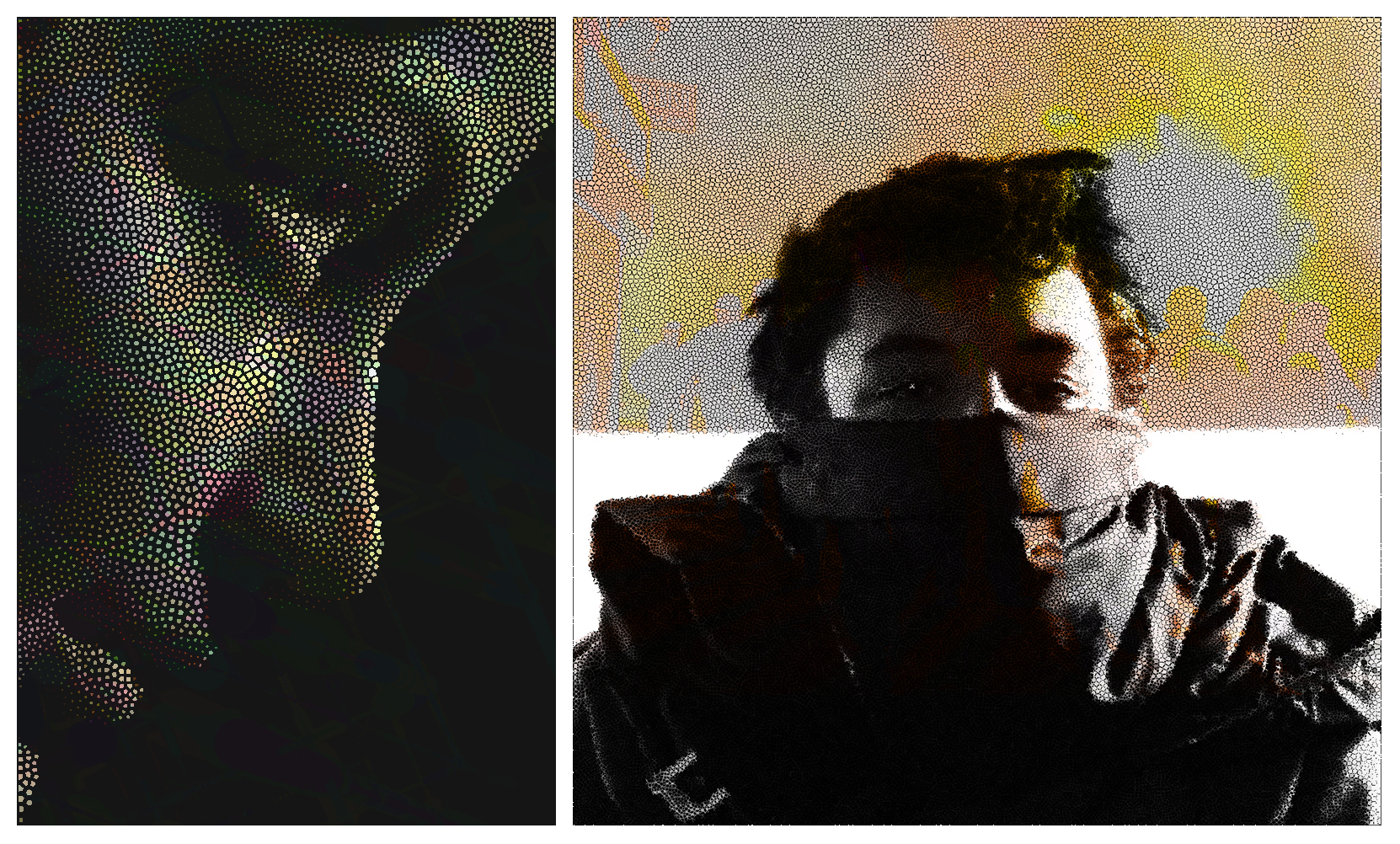

ASCII based image experiment from Bell Laboratories (left) and Voronoi diagram being used in a music video [7] (right)

Image transformations: Digital imaging took place long before today's 3-channel 256-level high resolution displays, the 1966 nude above being one such example. It represents the first symbolically reconstituted image from a scanned photo [6] and was made at Bell Laboratories. Using 12 ASCII characters it approaches the minimum amount of visual information needed to see, and consequently how much information can be obfuscated or removed. It also demonstrates the property of data to be transformed and represented in different ways, effictively demonstrating the subjective nature of visual perception.

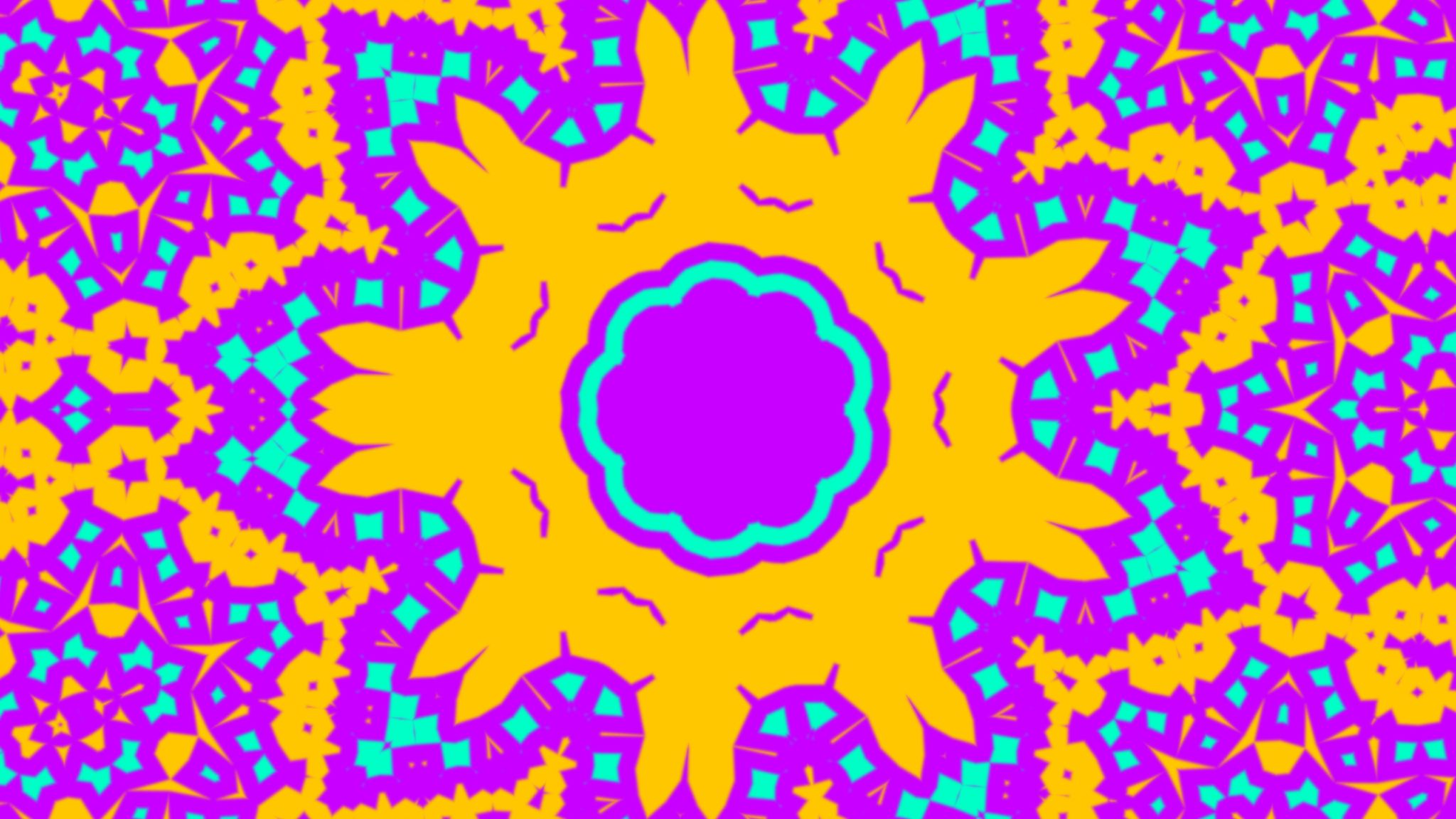

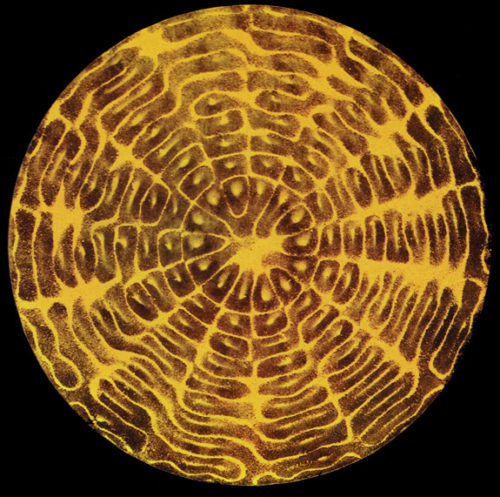

Generative images are also produced in mathematics, for example in the beautiful and well known area of fractals. For thousands of years cultures around the world have studied and displayed geometric tilings. Voronoi diagrams, seen above in Unknown Archetype - TRIPP [7], are produced by partitioning a space following rules simulating natural phenomenon. The video uses brightness to offset Voronoi nodes, organically transforming real subjects into mathematical form. Organic qualities are found in other areas of maths, like in the bifurication diagram below; derived entirely from numbers [8]. I am inspired by deterministically generated patterns because they seem to emanate themes of their creation, even without the background knowledge being understood.

'Bifurication diagram' derived mathematically by Linas Vepstas

Of these three influences, while biofeedback was the starting point, ultimately the possibilities of image transformation were pivotal.

Intentions

The original intentions were set out as follows:

New media providing an experience was the most consistent theme of inspiration, so this project aims to encompass that with a focus on recorded/realtime visualisation. Leaving the format of the end piece open for exploration aims to free creative development.

Digital interpretion of an input will be visually incorporated and the output should resemble each element of its composure. The aim to avoid total abstraction is an aesthetic choice and one that should recognise maths, biology and computer science applications that usually sit behind the scenes. Collaboration at a later stage would increase the accessibility of interdisciplinary elements in the project, diversifying the format and audience even futher.

The project will incorporate the strange phenomena of self-reference in attempt to make it stimulating. This theme could take several forms as it is ubiquitous in language, mathematics, philosophy, programming and art. The theme might additionally involve areas of maths as a more tangible point of discussion, aiming to reflect the lag between physical and immaterial worlds. Implications of this lag can affect everyone, for example in the current government's proposals to ban encryption and to become "the global leader of the regulation of [...] the internet".

The final piece will be realised as a collation of intermediate experiments; 7 tests in the first weeks should precede 3 extended tests building on the most effective and viable ideas. Time to explore a possible secondary element (biofeedback, collaboration or mixed-media) is also planned.

Realisation

High quality saving was of course one of the first developments and challenges I faced. openFrameworks's graphics library (OpenGL) efficiently doesn't draw offscreen pixels, so saving images larger than my small laptop screen required a few extra lines of code utilising the frame-buffer object (FBO). Video saving used the FBO and FFmpeg (wrapped by ofxVideoRecorder) which threw me back to the first time I used FFmpeg and any sort of GUI-less tool 4 years ago [10]. I also implemented lossless saving by describing circles in the SVG specification and adding them to a text tile, which later became useful after realising FBO's limitations with huge (print resolution) images.

Early on I also looked into 'shaders' as an alternative to openFrameworks graphics, offering much faster performance and a conceptually different 'parallel' style of programming. Simply feeding and playing back video with GLSL fragment shaders took me a week, proving a steep learning curve. After considering various factors I chose not to continue with shaders, essentially feeling my ideas could be developed further in C++ without the new language. As a consequence I then shifted focus specifically to pre-recorded media as opposed to realtime.

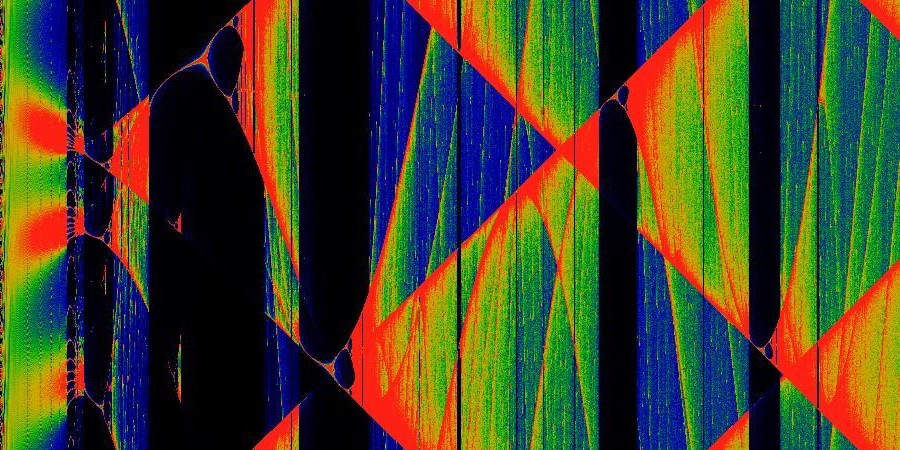

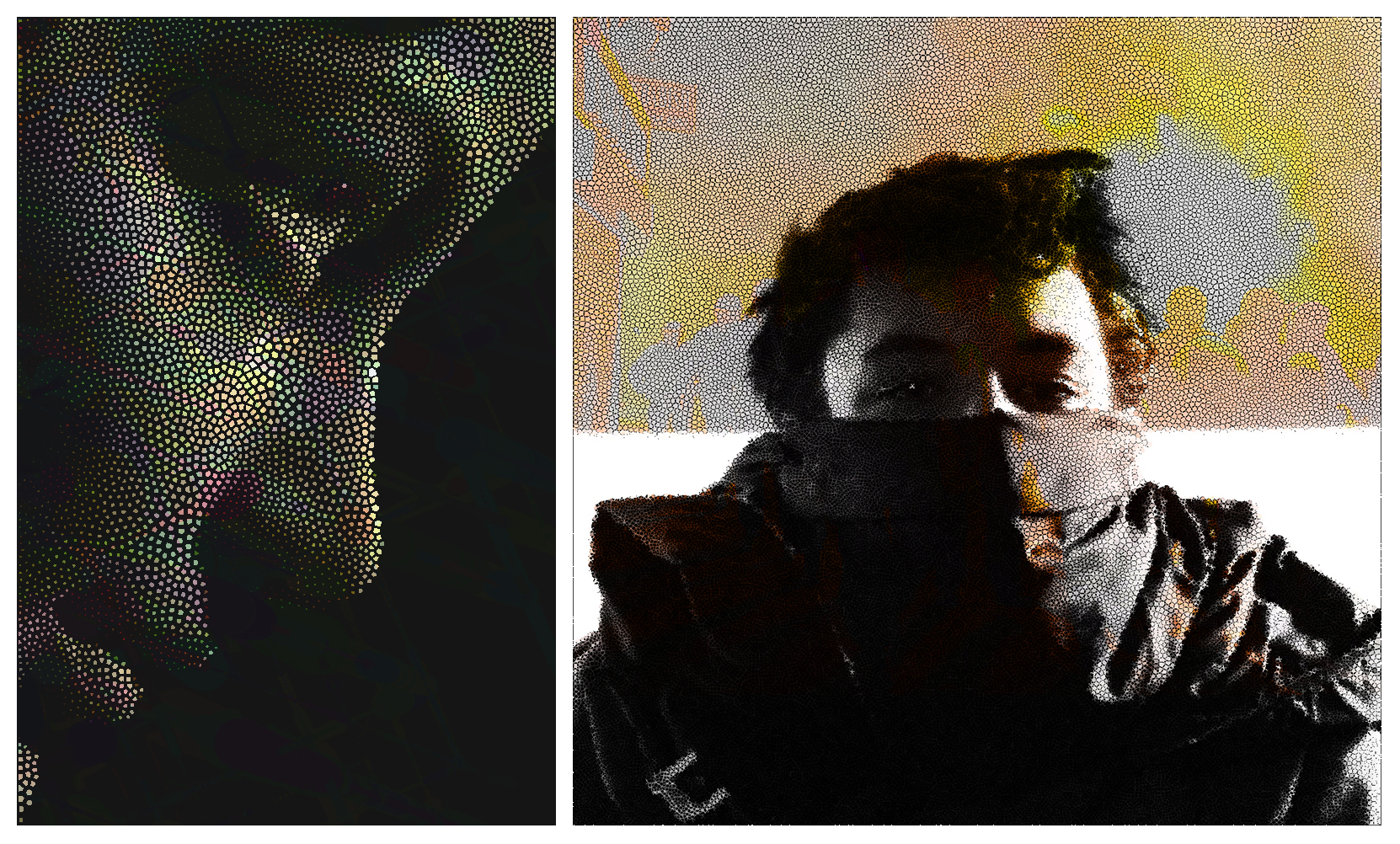

First tests I carried out using Voronoi diagrams (using my own photo on the right) with colour added in post

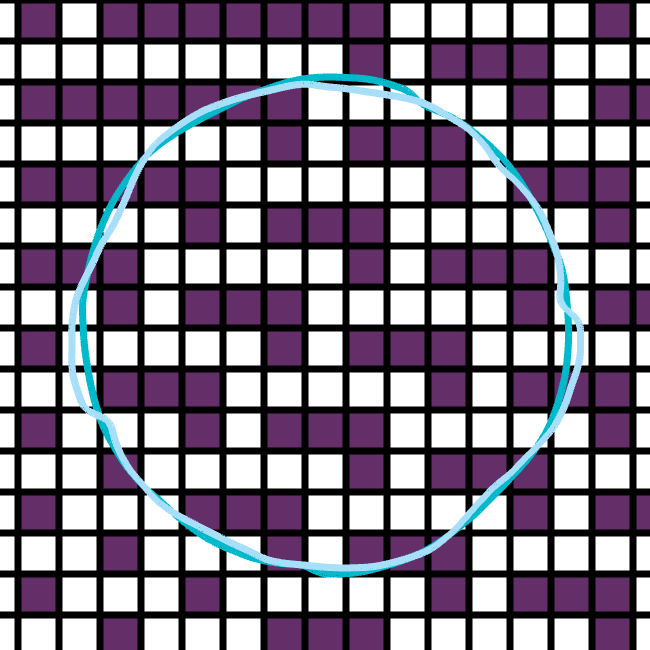

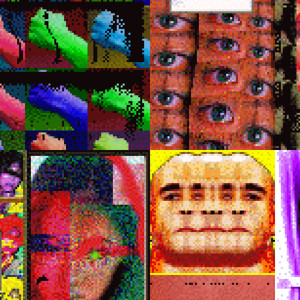

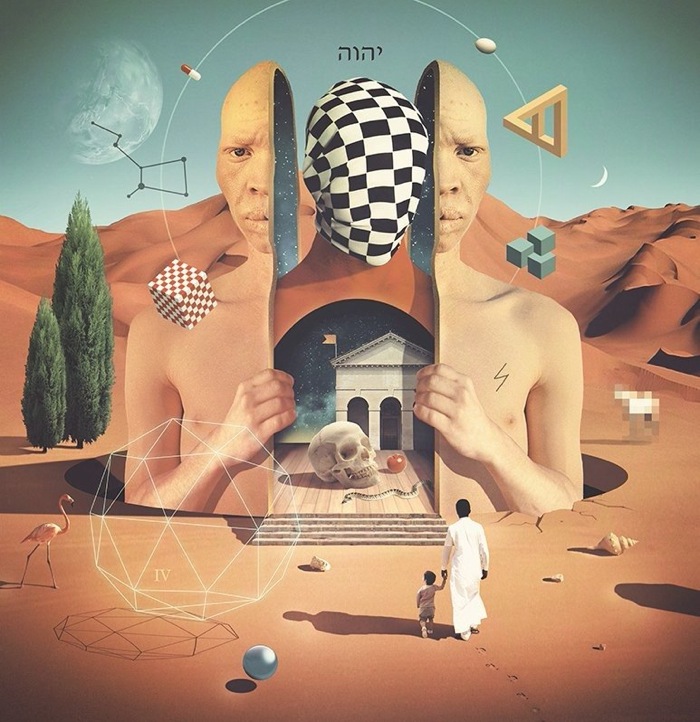

Scaling Voronoi cells by luminance in a jpeg (above) marked the first and last experiment without challenges. The next implementation (below) was developed till the end and was the focus of difficulty throughout. Shown in the image, apart from Jim Carrey unveilling the truth of his fabricated reality in The Truman Show [9], is a function iterating around a 2D grid deciding whether to draw or divide into smaller copies of itself. The draw or divide decision is controlled by incrementing a brightness threshold every level which the regions of the image have to beat. Crashes when using such iterated function systems (IFS) are likely without limiting the maximum number of divisions; a few levels too deep can see infinity (or in my case several million which was too much for an 8GB laptop) circles.

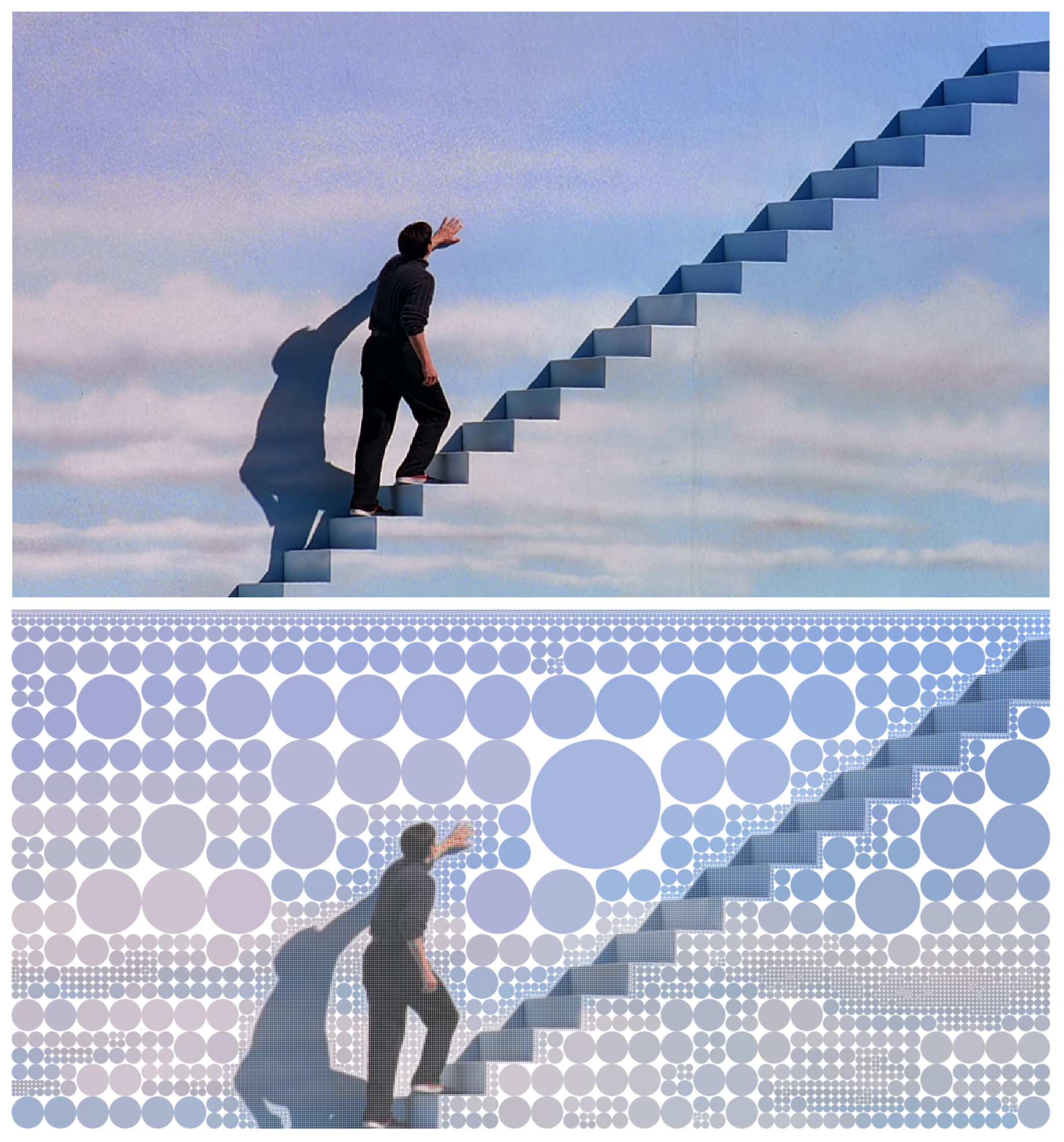

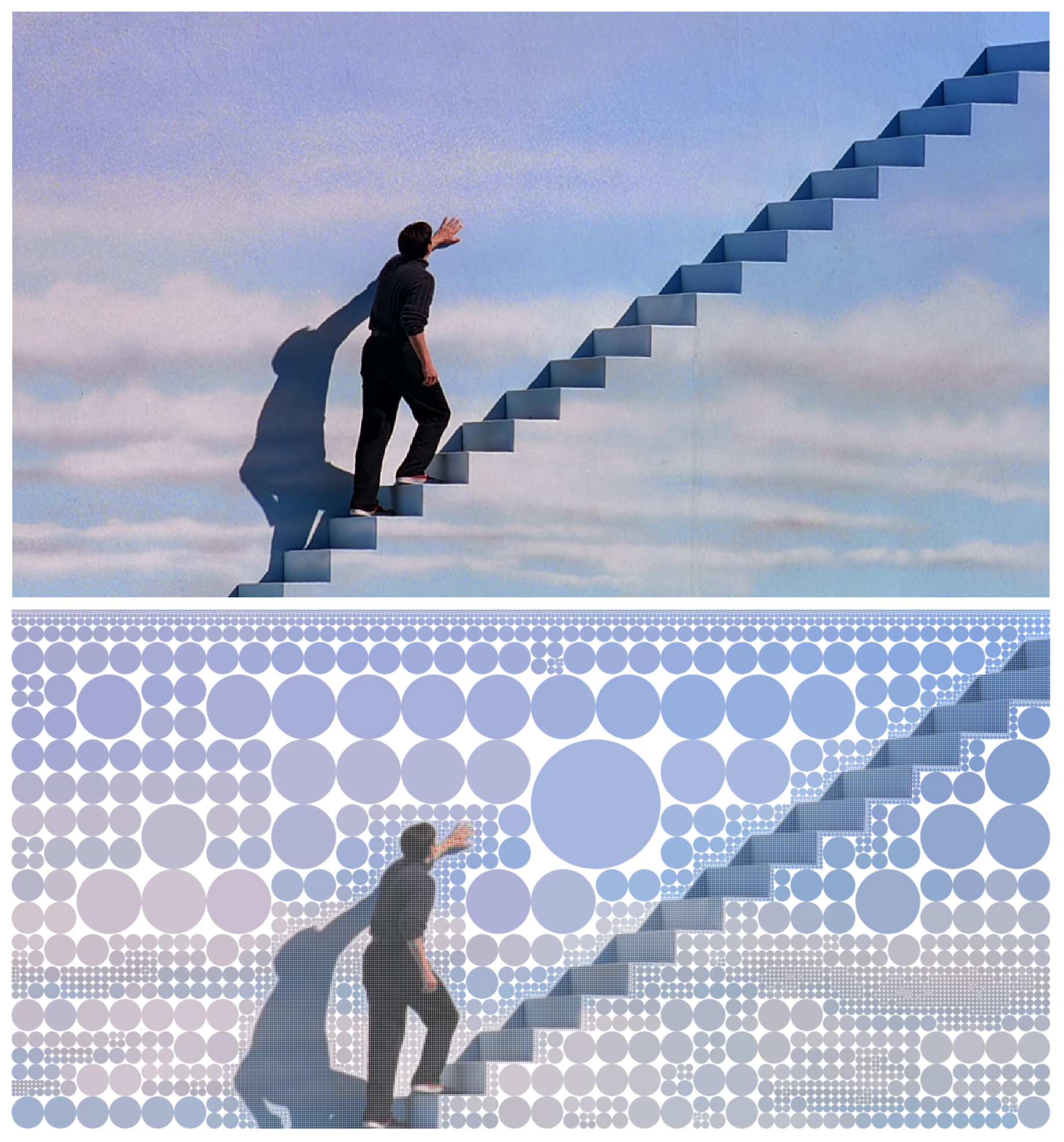

Dynamically thresholding cellular iteration using perceptual qualities (top: original frame from The Truman Show, bottom: transformed by Double Composure)

Using other shapes to the same effect was inspired by 2 well documented areas of geometry; uniform tilings (repeating tiles on a plane) and rep-tiles (shapes which can be divived into copies of the same shape), as they specify shapes identical in infinite scale and direction. Changing shapes while running the application required polymorphic 'cells' and a new cell object structure. I reimplemented the draw or divide logic into a 3 pass algorithm starting with cells on the deepest level. Later on I added 'divide modes' that enabled shapes to divide into different sets of offspring. Pointer-to-function-pointer arrays (with syntax as messy as the name) resolved changing the divide modes while running the app.

The next big development was a separate 1-channel (greyscale) image to threshold the draw-or-divide logic, which I called the iteration depth map or 'imap'. It gave fine user control over the cell resolution, seen in the umbrella demo below which used a simple radial gradient imap. I later built it into the 'iteration editor' with features for layer editing including paint brush, fill and gradient (linear and radial) tools, blend modes (normal, addition and subtraction) and more.

Video experiments showed visual discontinuity in the form of cell flickering. In the umbrella demo below it is a result of how colour is set; from a single pixel on the cell's centroid rather than from an average of pixels in the cell's area. Visual discontinuity didn't end there however; animating the imap caused cells to instantaneously switch level because there is nothing between each division (seen as the cells jumping in the 'h' demo below). To solve it I tested interpolating a rep-tile into its children with a 'floating point iteration' value. After implementing floating point iteration into the main application, changing the cell objects and draw-or-divide algorithm, I was frustrated to see visual continuity end again when mapping over 3 cell layers to the imap (I didn't quite get the right relationship between the cell object's floating point values, the draw method and the cell container algorithm). Without knowledge or experience in algorithms I cut my losses and continued with my other ideas.

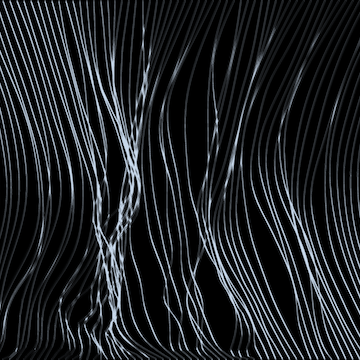

Dynamically controlling tile resolution in an 'imap' separate to the original media (left) and custom cell design with imap animation (right)

Visual continuity in the yin-yang demo below comes from cell state (instead of iteration layer) and the beautiful properties of the Buddhist symbol of balance. Further experiments with draw modes (beyond solid polygons) took a lot of time so I set about making a GUI custom cell designer. I prototyped a separate Bezier curve editor program which output values to the console to copy into a special cell draw mode in the main app. The challenge required polar mapping points to the rep-tile, which wasn't enough due to the order of coordinates reversing every iteration. Eventually, after changing the cell objects and divide functions to use polar coordinates too, it worked (demonstrated in the 'h' demo above). Another cell drawing idea used the principles of colour theory and the halftoning effect; I wanted to represent images using components of their dominant colours. To generate a dominant colour palette I made a function interfacing a k-means example [11] online with openFrameworks, parsing an ofImage and the number of colours to return. Development moved on before much palette draw mode experimentation, however a small sample based on the idea can be found further down. Like the divide modes I had previously implemented, cell draw modes can be changed at runtime using the pointer-to-function-pointer array feature of C++.

Using brightness to geometrically interpolate the state of the yin-yang Buddhist symbol of balance

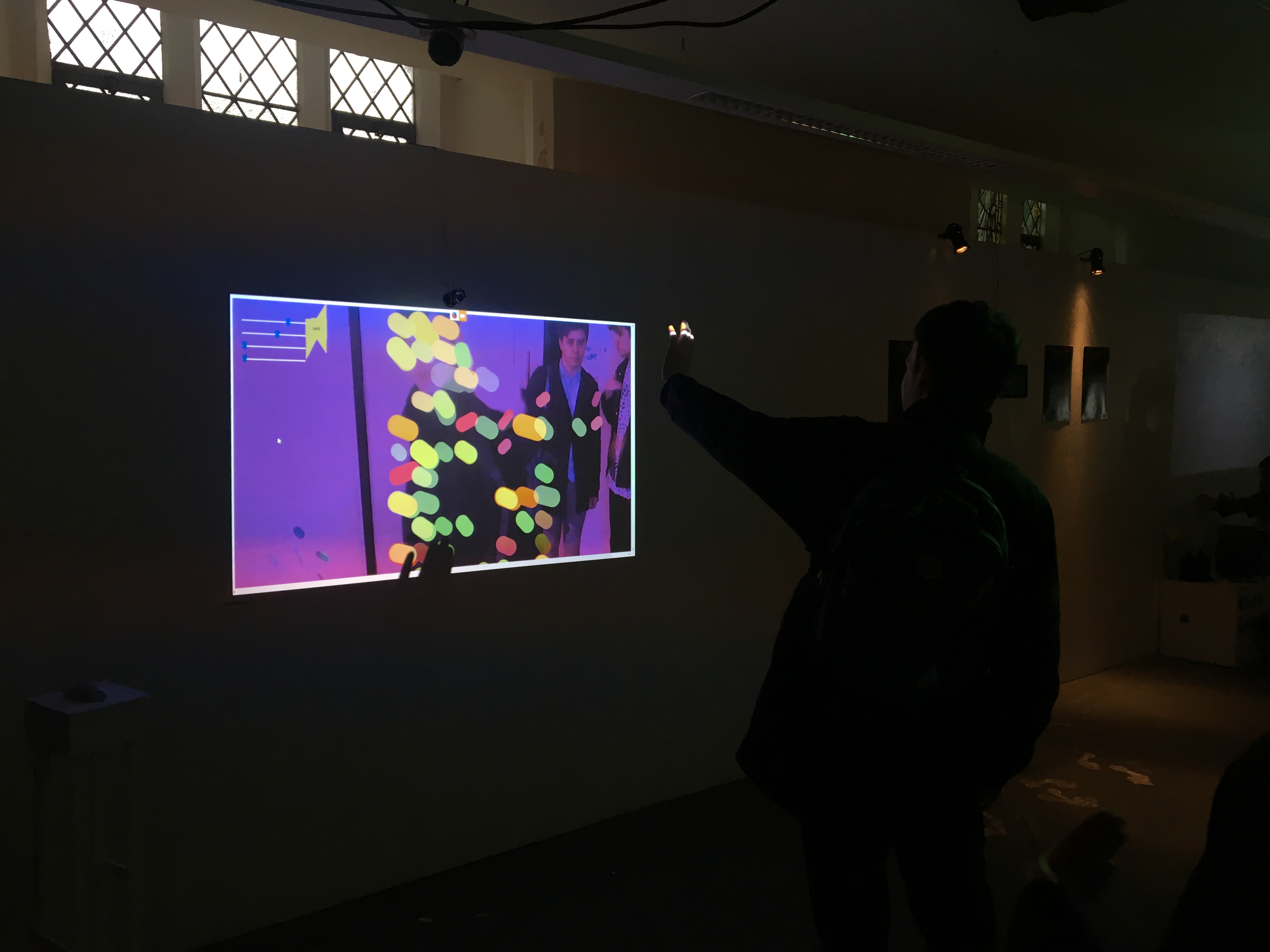

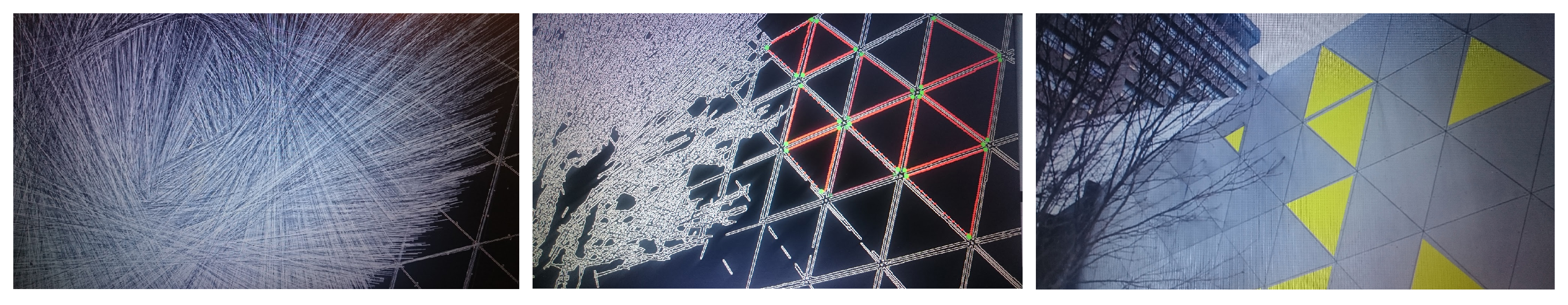

Augmented reality (AR) was the last challenge in integrating the cells into images. I started by specifying two tasks within the general scope of AR to solve: image registration (matching the cells into the scene) and homography (the actual perspective transformation). A test transforming one image onto another succeeded after realising the importance of order (isomorphism) between each image's corners using OpenCV's findHomography function.

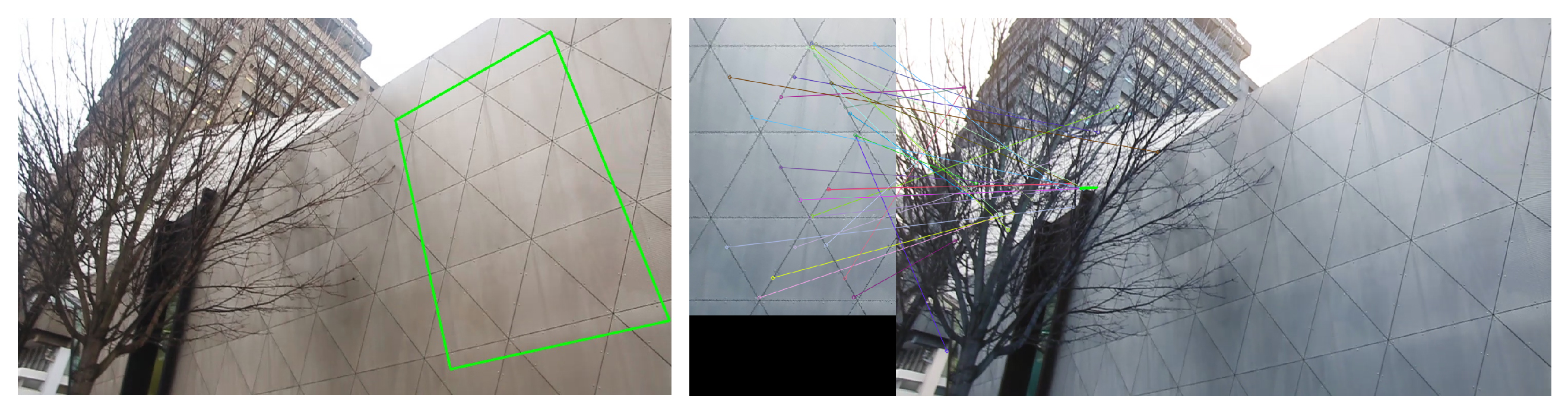

Manual surface selection and homography (left) and the first automatic matching attempt (right)

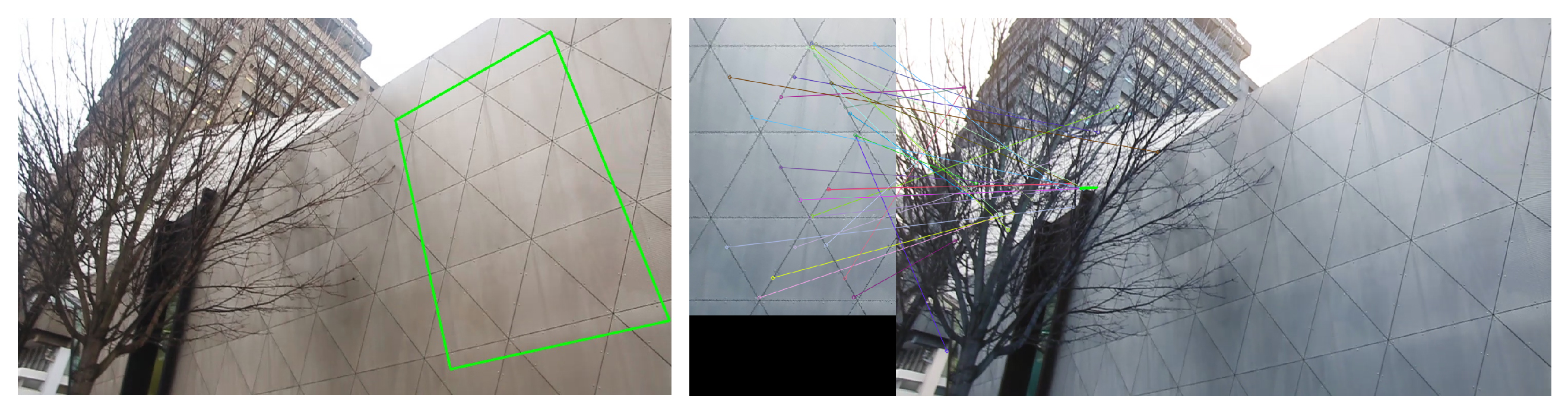

That manual experiment would produce AR if repeated for every frame of a video, so I found a tutorial [12] automating it. After switching SURF for ORB (the former feature detector was removed from OpenCV in respect of IP) I saw the unsuccessful result with lots of lines below. ORB feature detector was picking up so many outliers that the 2 images couldn't be matched in the next step. My hopes of OpenCV's brute-force matcher being unsensitive to noise compared to FLANN were let down (see result with even more lines) so I then tried reducing the number of features by only detecting them from shapes and lines. Canny edge detection and HoughLines successfully found triangle panels (below) but closer inspection showed unaccuracy and instability that would be detrimental to effective AR.

Snapshots of progress towards the augmented reality feature

On further investigation I saw suggestions that parts of OpenCV were outdated and poorly implemented. I also wanted to avoid parameter dependence so as to more wittingly solve the task (successful triangle detection required multiple arguments in ORB, Canny and Hough) so I looked into CV implementations elsewhere. Dozens of 'simultaneous localisation and mapping' (SLAM) and 'structure from motion' (SfM) libraries looked suitable and generally did not require parameters (some use camera properties like the 'principal point', apparently found by pointing a laser down the sensor[13]). I looked for monocular (single camera) solutions for RGB (not RGB-D) format data, finding ORB-SLAM. After failing to link the external library I realised that the 'simultaneous' aspect of SLAM was a drawback in my case anyway; robots and self-driving vehicles need realtime safety whereas my priority is precision.

The first apparent success linking external libraries was with coherent point drift (CPD), a parameter-free point set registration algorithm. Helped on the openFrameworks forums [14] and by the library's author on its Gitter chatroom I ran my test. The result was empty however, leaving both myself and the library's author confused (I'm sure it was my implementation).

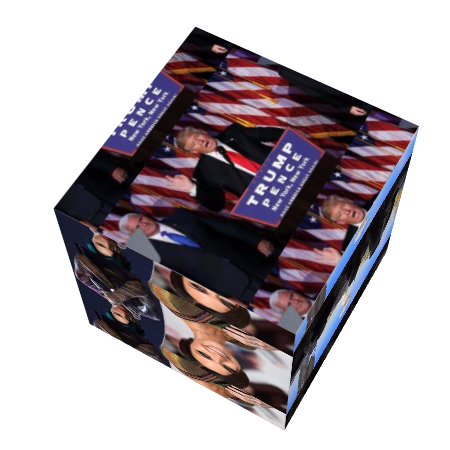

In this video the moving traffic has been picked out automatically by the motion tracking feature and transformed

With a stroke of luck I came across openMVG which implements ACRANSAC; a parameter-free version of RANSAC line fitting (similar to Hough) that I had previously come across in OpenCV. Kindly helped by the library author [15] I was able to change types from openFrameworks to OpenCV to Eigen to openMVG. Then I made a function to interface their 'robust homography' example with ACRANSAC and quickly tested it producing the video above.

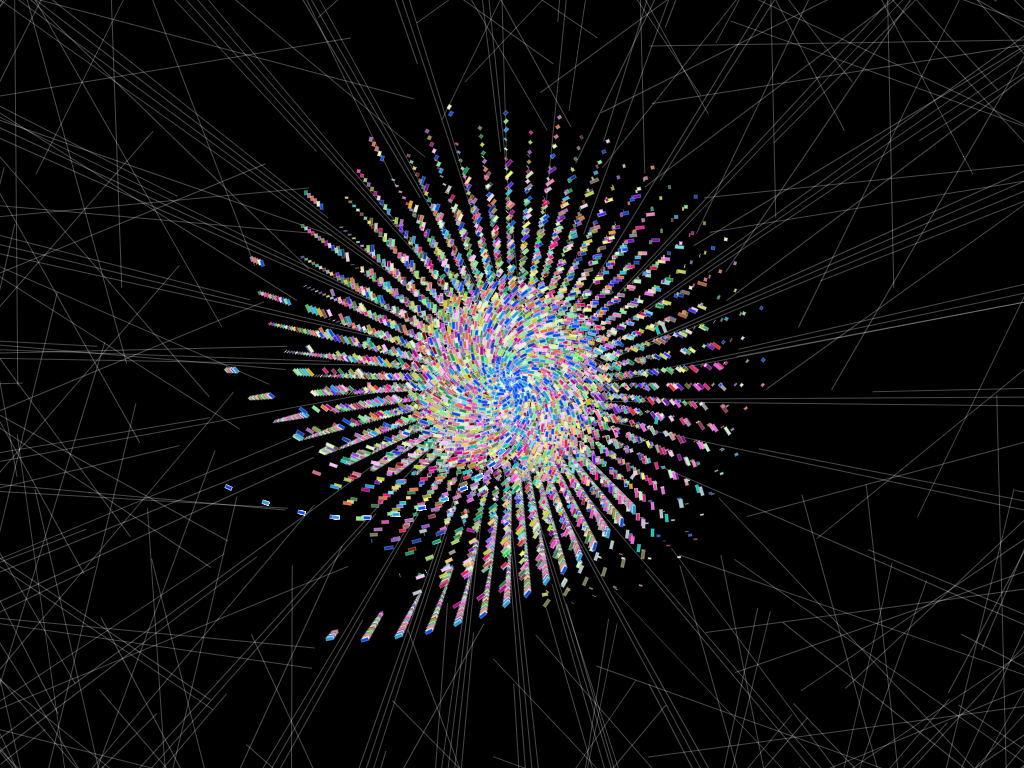

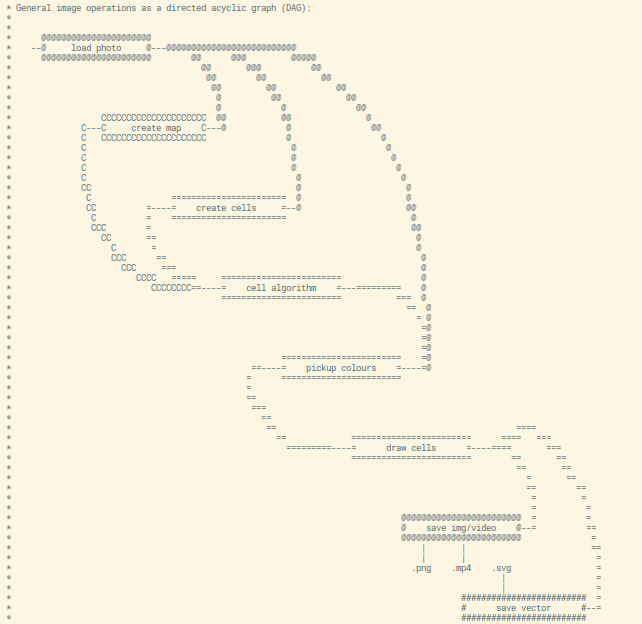

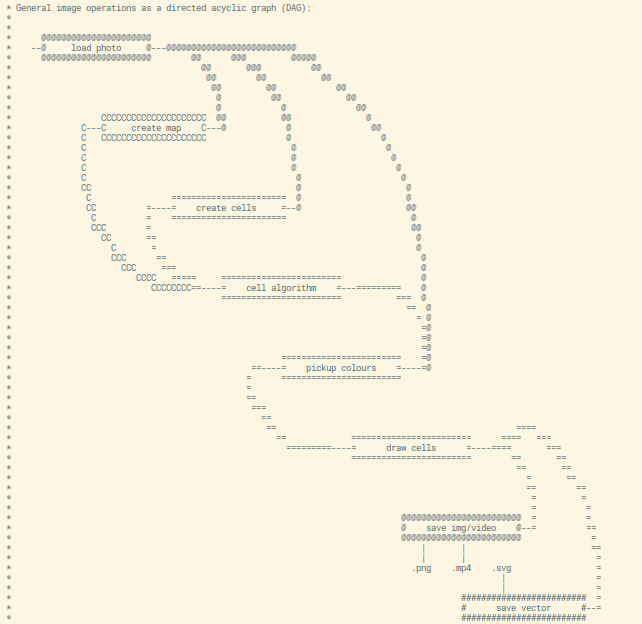

Schematic of my 'directed acyclic graph' design (later features maintained the topology and acyclic-ness)

The last stage involved implementing all the best developments into the main app. The cell and imap editor were already collated because of earlier problems trying to develop, implement and maintain everything simultaneously. The design was based on non-linear editing systems (NLEs) which follow directed acyclic graphs (DAGs). Shown above, each node represents a function and each edge represents data being passed on. The encapsulation made adding features much more manageable but in the last weeks I was faced with a personal issue not planned for in the contingencies, setting me back 2 weeks. Some bugs started occuring and were very difficult to identify due to me using raw pointers for the cell structure and in other places, further complicated by the complexity of the application at hand. I branched off old commits as a last resort, consequently losing progress as the bugs weren't noticed right away. I also tried using smart pointers, constness and unordered_set (with custom hashing and comparator functions) in new cell structures but ended up running out of time before finishing.

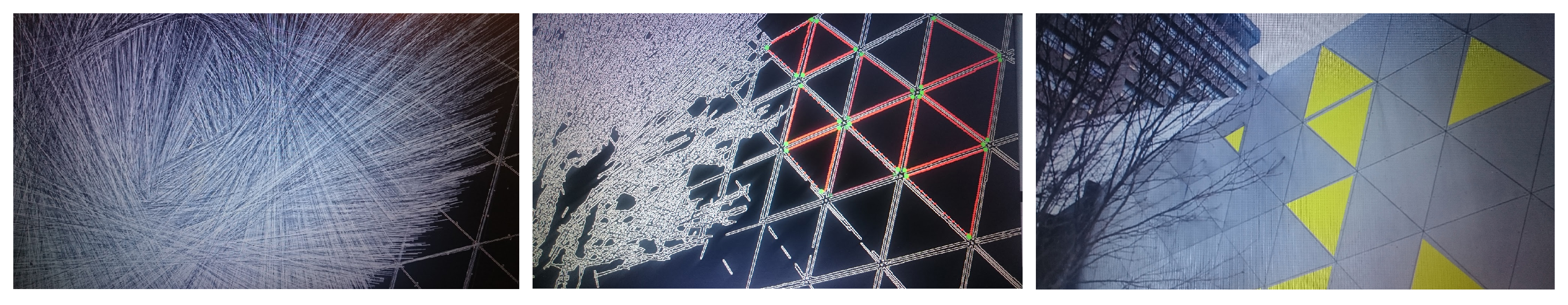

Other images and video produced during the project

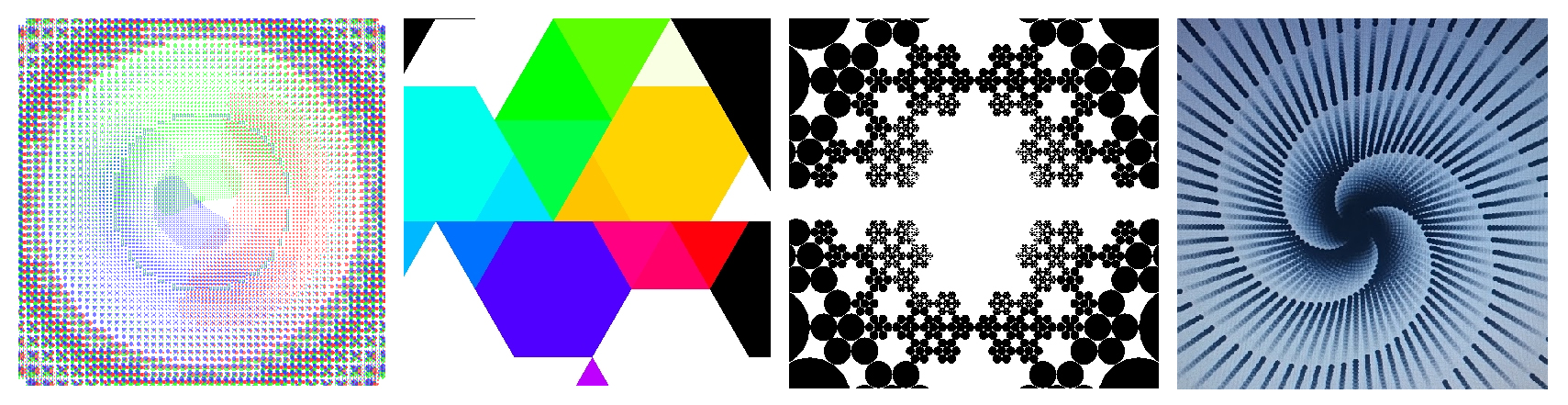

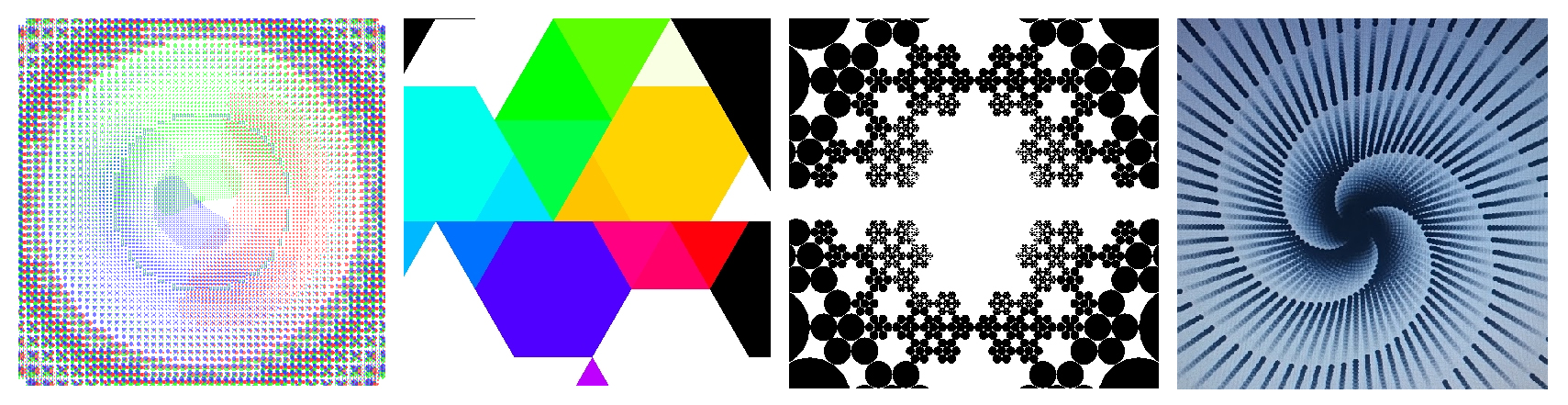

From left to right: dominant colour composition draw mode, random divide mode, optimum circle packing, logarithmic spiral

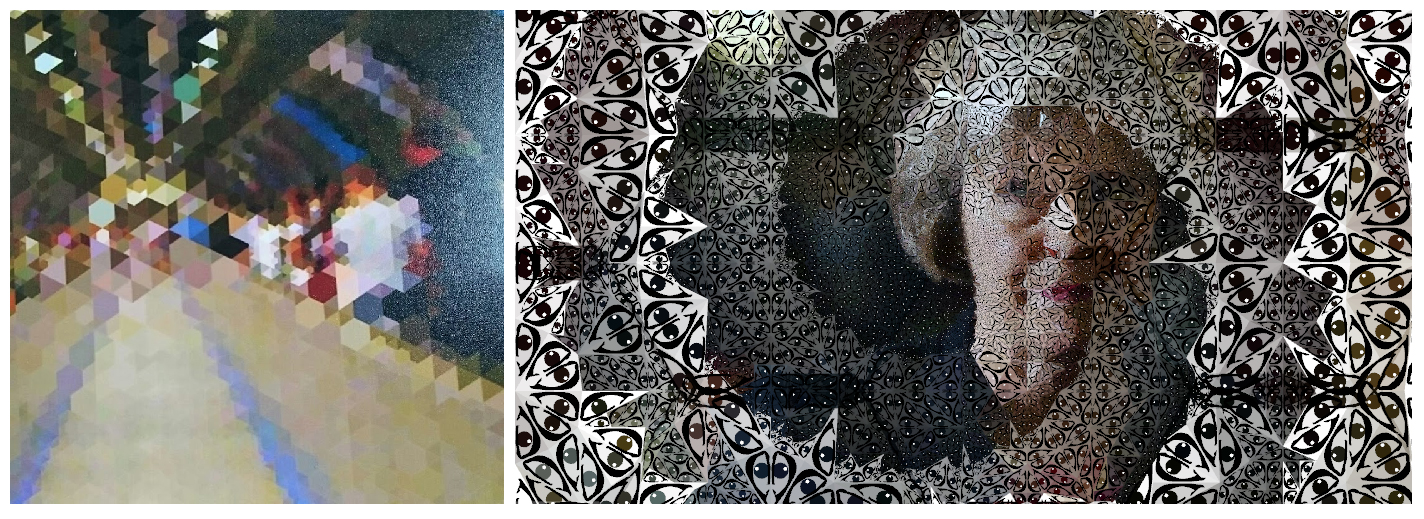

London Underground logo inspired draw mode (left) and random draw mode inspired London Underground (right)

Close up of printed above image (left) and custom Bezier draw mode and descending iteration spiral (right)

Testing AR robustness with panning, arc and yaw, and handheld movement clips

Evaluation

My major learning point is that I was too ambitious which led to me running out of time. Consequently the current version of Double Composure does not yet incorporate some of the promising ideas that I experimented with. However I do believe I generated some interesting effects valuable both artistically and technically.

In total I completed 14 tests: basic stippling/image analysis, image based iterated function system (IFS), vector saving, rep-tile geometry, video saving, iteration depth mapping, shader video playback, dominant colour palette generation, composite cell design (yin-yang, TfL), custom cell design (Bezier curve editor), polyphorphic rep-tile division, floating point cell iteration/interpolation, shape detection and video tracking (augmented reality). Many thousands of lines of code were split between 17 repositories and over 250 commits, with over half of the latter in the main application.

Several proof-of-concept features were disappointingly not implemented into the main program in time, notably custom cell design and floating point iteration. Custom cell design would have given much more creative control at run-time; currently only simple shapes are produced by available drawing modes. Floating point iteration solved visual discontinuity with videos and animation, without it the illusion of cells being real is broken by instantaneous changes in cell colour and size.

Lack of time meant that I was unable to take some promising concepts, aimed at diversifying the results, forward. Generative line drawing would have made totally different aesthetics to the cells. Dynamic slit-scanning would use the iteration map for temporal video warping. Third-party software integration would have combined automatic beat detection and video timeline markers to edit a sort of generative film.

The most crucial test was the image thresholding IFS which inspired many further developments. That concept evolved into the first of three main features: the cell editor, the imap editor and augmented reality. The iteration map editor extended my original intention of combining data by implementing a full (not quite Photoshop-par) graphical editor to control cell resolution. Augmented reality was the most extensive area of development taking hundreds of hours of research and experimentation to realise. It paid off with flawless tracking in the results and a personal element of nostalgia [16].

A final comment is that this project has elements of experimentation (as I learnt on my art foundation course) and goal-directed software development. I feel that I have not yet fully worked out how to combine these two methodologies so as to achieve a result that is simultaneously creative and goal-directed. This is an area I hope to become better at, particuarly through my summer placement with Random International.

References

[2] Boucsein, W, 2012. Electrodermal Activity. 2. Springer.

[10] Vimeo. 2017. Contort on Vimeo. Available at: https://vimeo.com/87452642. [Accessed 23 May 2017].

External code base

[2] OpenCV 2.4.1

[3] openMVG