Project: Herald

by: Joshua Hodge & Patricio Ordonez

Git Repository: http://gitlab.doc.gold.ac.uk/jhodg011/ourAwesomeProject

WHAT IS PROJECT: HERALD?

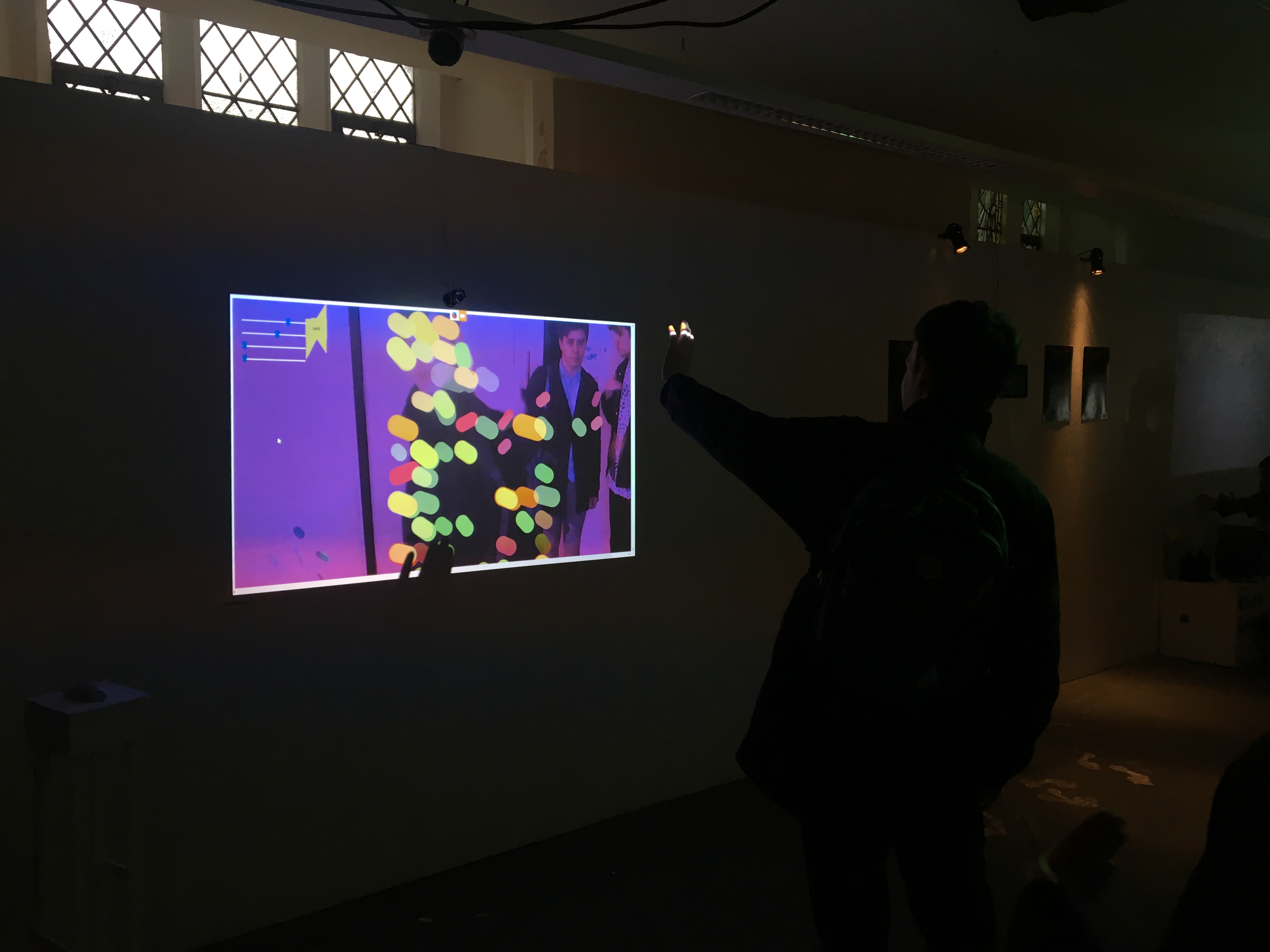

Project Herald is a musical instrument specially designed to be played with one hand, catered (but not limited to) those who may have physical limitations which prevent them from playing an instrument with two hands. Herald uses the power of machine learning to allow a user to express themselves musically, with no prior musical training, and is designed to be played with little or no instruction.

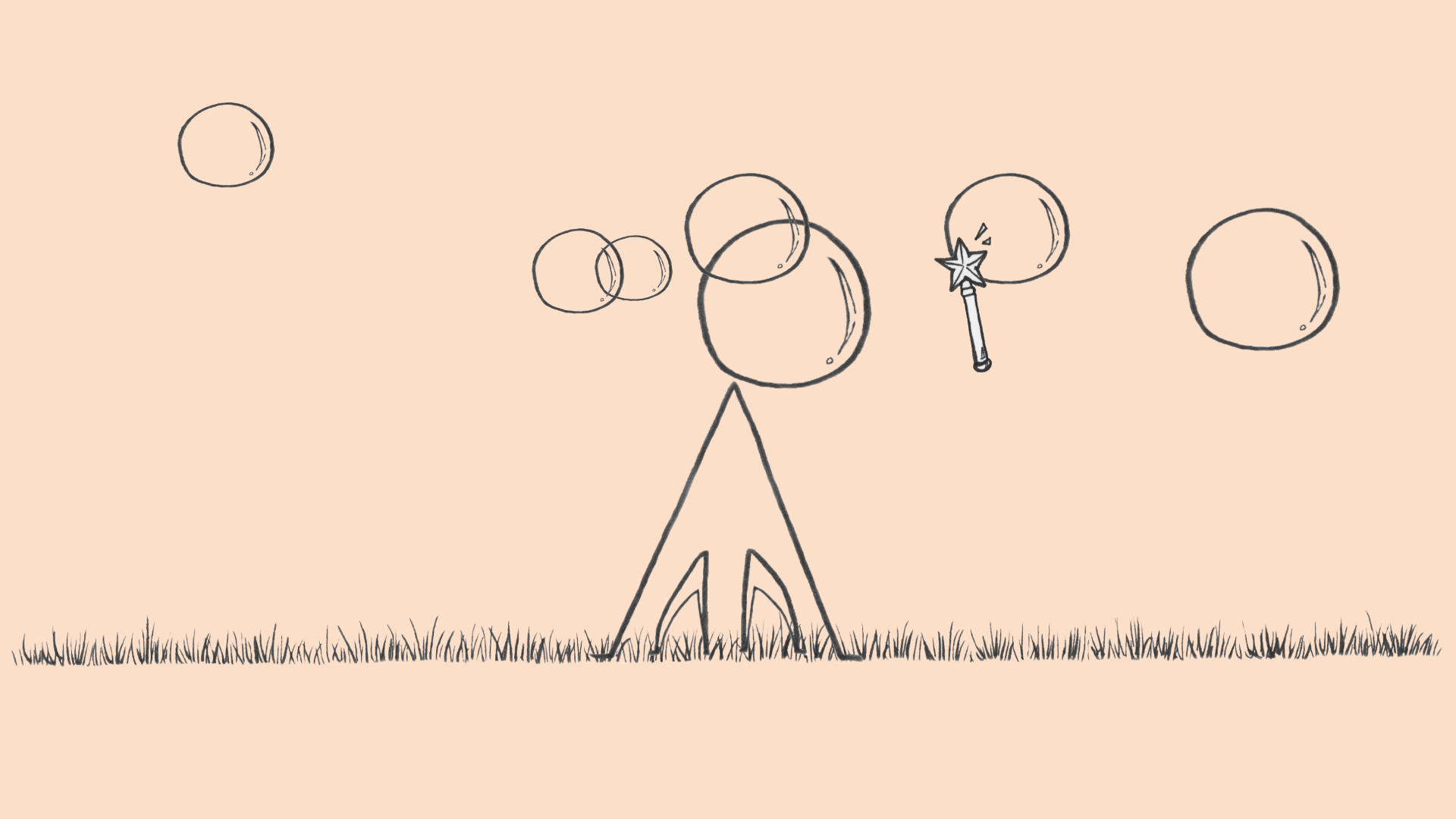

Once a trainer has prepared the instrument, the user can play by simply hovering one hand (or limb) above 1 of the 4 player nodes, and moving at their leisure between the nodes (which can change the speed or sound of the instrument), or alternatively remove the limb completely from any of the nodes, which stops the musical notes completely. This feature offers the advantage of allowing the user to play the instrument without a need to press down on any keys or strings, and create a focus around expressivity, rather than technicality.

One may ask, “What notes does the instrument play, and how does it know what note to play next?” The notes are determined by analyzing a midi file (which is like sheet music for a computer, telling it which notes of a song to play in order and for how long). The instrument uses the midi file to create random notes which follow the tendencies of the analyzed song, allowing musical expression which is similar but not exactly the same.

WHO IS PROJECT: HERALD FOR?

When considering the design and functionality of Project: Herald, we knew that we needed to consult experts to find how this instrument could be of use for people with disabilities. In retrospect, building around one user or a specific group could have provided different, and possibly better insights, however we made a choice to focus around creating a very basic framework, and from this basic functionality we could present the instrument and then cater the design and functionality towards a target group or even one user.

SPEAKING WITH THE EXPERTS

In our research we consulted with several experts to gain insight into which design would work best for Project: Herald, as well as functionality considerations we should take into account.

First we consulted with Pedro Kirk, a post-graduate pursuing a PhD at Goldsmiths University researching digital and musical therapies for stroke rehabilitation. Pedro was very encouraged by our ideas, and suggested allowing a stroke rehabilitation group test the instrument and a questionnaire to record our results. He also suggested a possibility of coinciding his PhD work with our 3rd year project if we felt it would suit.

Another expert we consulted was Rebeka Bodak, PhD Mobility Fellow at Aarhus University in Denmark, specializing in applications of audio-motor coupling and music therapy for stroke recovery. In a Skype conference with Rebeka, she saw potential in the project but said that we were at a critical crossroads, in which we need to define whether the instrument is to be designed as an outlet for expressivity, or if its main function is rehabilitative, which would require a different approach than our current trajectory.

Finally, we consulted with Marc Barnacle, head of TIME (Therapy in Musical Expression), a music therapy group based in Essex. Marc and his colleagues were very excited about the instrument and also saw potential, having looked at alternatives already on the market and seeing ways that Project: Herald was different.

They made several suggestions that we have found useful for consideration in later development of this instrument. One interesting suggestion was that the system could have pre-set chords, like a guitar, giving the user the ability to strum without the technical labor of needing to know how to play traditionally. Another good suggestion was the possibility of implementing amplitude into the instrument, allowing dynamic expression, as the instrument currently plays at one set volume. One more suggestion alluded mainly to the experience- they see the best results when the users feel they are producing melodies harmonically related to the songs played by the hosts, and that the instrument should be as minimal as possible, directing attention only towards the nodes, and removing the circuit board from the top of the instrument, as this could serve as a distraction for the user.

POPULAR INTERFACES FOR DISABLED USERS

In our research we considered the advantages and drawbacks of two of the most well known instruments catered towards disabled people- Soundbeam and Skoog.

Soundbeam is a comprehensive solution which offers a variety of methods to interact musically, using ultrasonic sensors to detect motion via interruptions in its light path, translating these inputs into sound. This “sensor interruption” served to be an inspiration for Project: Herald, however in speaking to experts there were several drawbacks to Soundbeam. First was the price. According to the Soundbeam 2016 price list, the cost of an entry level package is over £2,000.00, which is out of the price range for most music therapy groups (Soundbeam, 2016). One of the experts we consulted with also told us the light beams sit on mic stands, leading to confusion in which users tried to sing into the light beam, mistaking it for a microphone, rather than its intended purpose.

Skoog is another popular option for simplified musical interfaces designed for disabled people. Skoog is a musical interface developed in the shape of a foamy cube, which contains a large sensor on each side (except the bottom) and is sensitive to touch and squeeze. It is fully programmable with an accompanying app. In consulting experts on Skoog, some felt that the instrument was too difficult to customize and that it amounted to a cube with the possibility of only creating 5 sounds, not allowing for long lasting experience.

Bearing this feedback in mind, we approached the project with two objectives. First, we wanted to emphasize simplicity by presenting the possibility to play the instrument with little or no musical training, and foresaw a scenario in which we could place the instrument in front of a user without instruction, and allow the user to explore the possibilities of the instrument.

Our second objective is to create an environment designed for deep user experience. We researched several methods for tracking user interaction, including tongue, finger, and brain tracking. We concluded that with the given time and financial restraints, our best option would be to develop our interface with the use of a low cost circuit board, such as an Arduino or Raspberry Pi. We decided to cater the instrument to be played with the use of one limb, but without the need to use fingers to play individual notes.

HARDWARE DESIGN

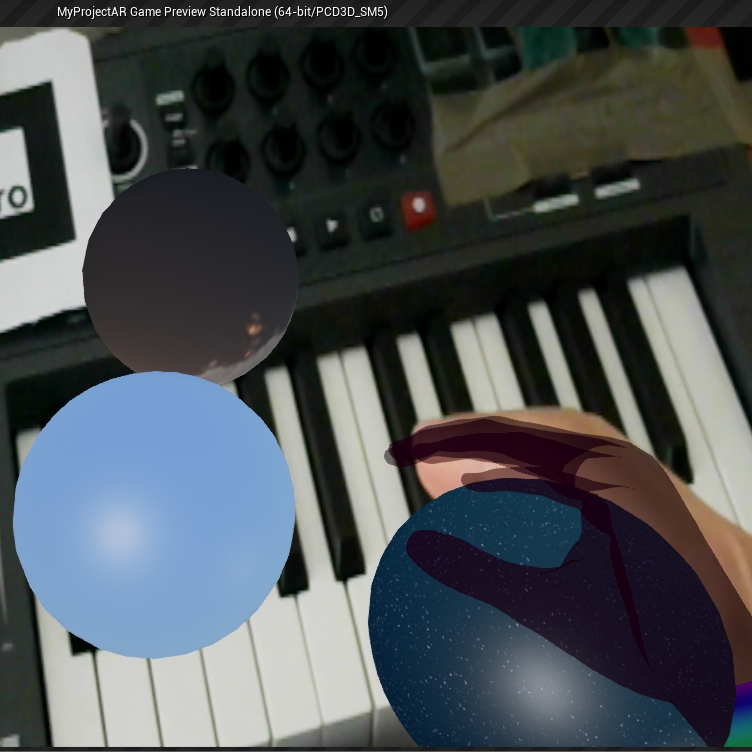

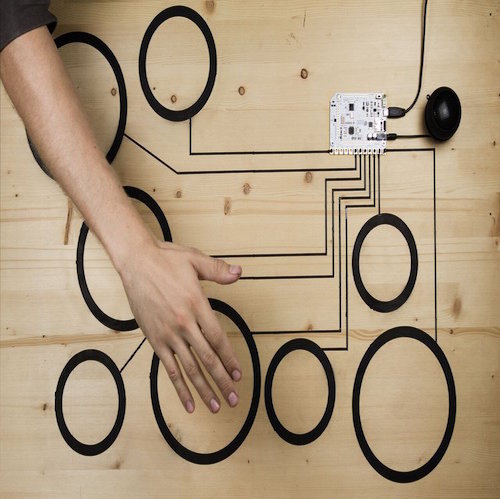

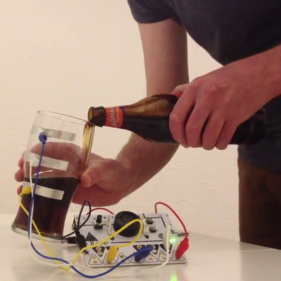

When considering a starting point for Project: Herald, we found a customized Arduino Board called the Touch Board, created by Bare Conductive. The board allows conductive sensitivity, which allowed us to surpass the limitations of on/off midi control messages by measuring the distance of the user’s hand in respect to each of the instrument’s nodes.

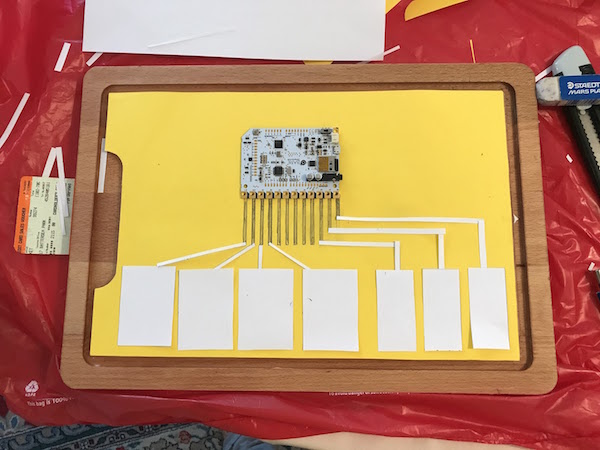

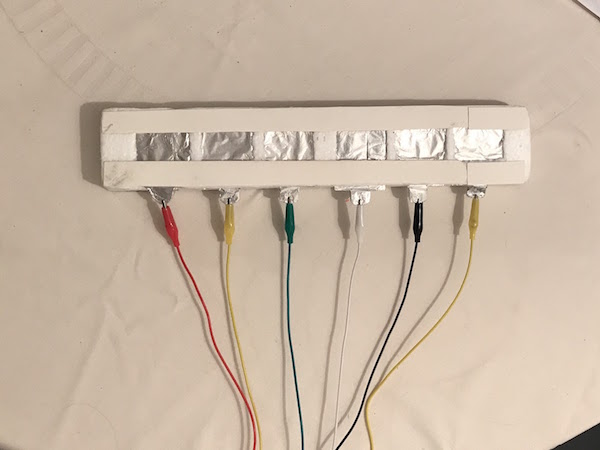

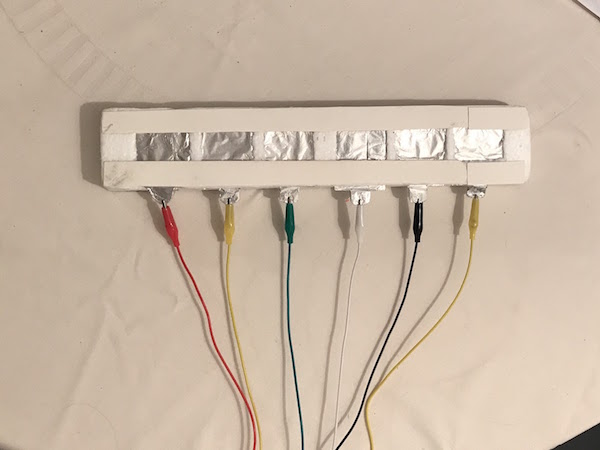

After developing the initial functionality of the board and extensive testing using pieces of foil as conductive sensors, we built the first prototype using a piece of polystyrene, and inserting cardboard pieces coated with kitchen foil. Each piece was connected to the Touch Board with alligator clips. While working as expected, the design was light and not practical for transport.

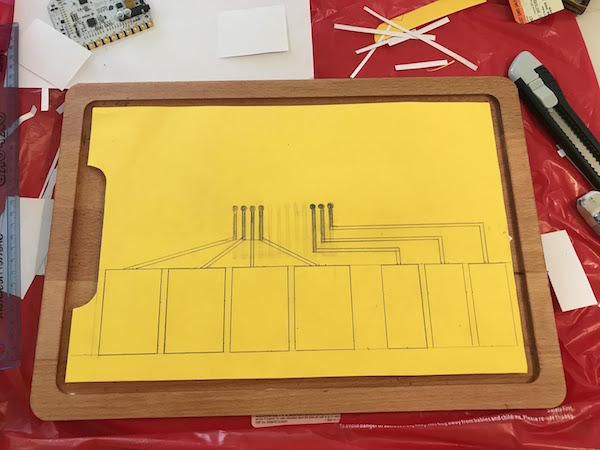

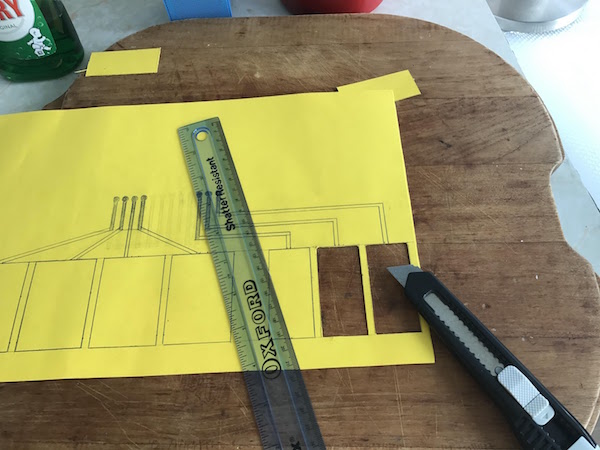

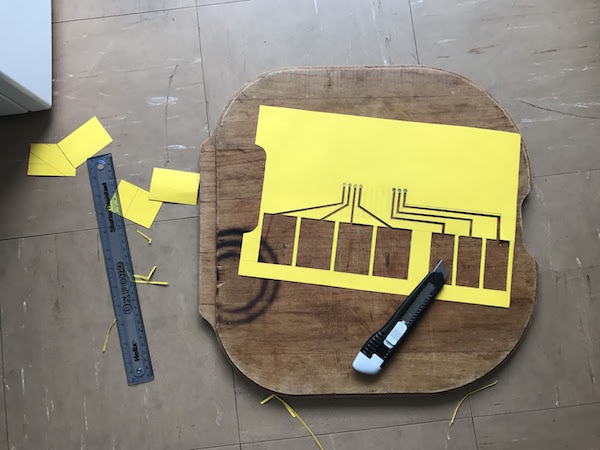

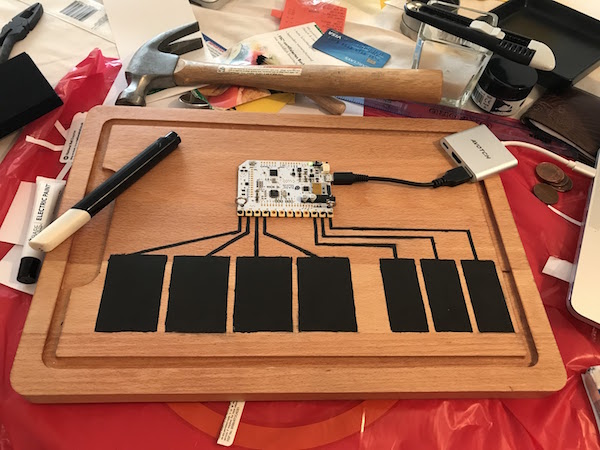

Gathering inspiration from other instrument builders such as Human Instruments (Humaninstruments.co.uk, 2017), and combining this with the feedback we received from experts mentioned above, we decided that a flat design would work best for now, as the user only needs a minimal range of motion to use the instrument. We decided that Bare Conductive’s paint (which can be painted on any surface and, once linked with the board, serves as a sensor) would be of great use on a wooden board, as it provides a solution that is solid, clean, and robust. This was created by first mapping the template for the design. The template was then cut to create a stencil.

Once the stencil was ready we painted the board, ensuring that the circuit connections were isolated from one another. After painting, we screwed the touchboard to the wooden panel and connected each node to the corresponding electrode on the Touch Board, covering it with a protective silicone case.

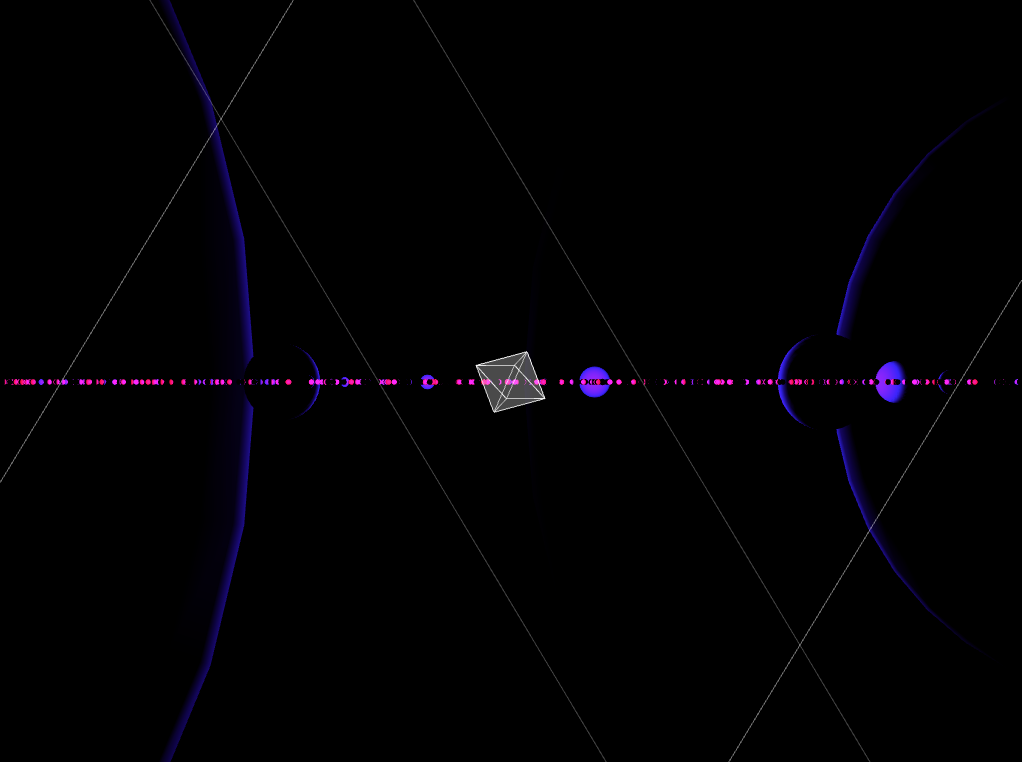

SOFTWARE DESIGN

The first obstacle of designing the software was to figure out how to get the data of the Touch Board from the Arduino code into openFrameworks. Fortunately, Elaye created an add-on for the Touch Board for this use (ofxTouchBoard, 2017). After developing the board’s basic functionality, we then calibrated the board thresholds to find the most useful sensitivity.

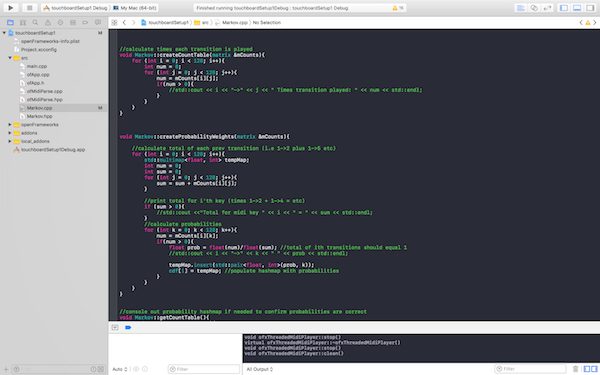

We then moved to implement a basic audio engine, using the ofxMaxim library. We created a simple synthesizer adapted from one of the example projects which came with the library. We then hard-coded a 1st order Markov Chain, using “Happy Birthday” to create randomly weighted notes, producing the initial model for the instrument’s functionality.

We knew it would be important to allow the user to base their musical expressions off other songs, so we decided to embark on creating a function allowing the user to import a midi file of their choosing. The goal would be that this midi file would automatically parse into the Markov Chain, which could subsequently be played.

To achieve this, we first implemented the ofxMidi add-on for openFrameworks (ofxMidi, 2017) which provides MIDI IO capabilities.. Then, we integrated this with the ofxThreaded Midi Player (ofxThreadedMidiPlayer, 2017) for parsing preloaded MIDI files, enabling us to read on/off note values, pitch, velocity and delta.

We then ported a Markov Chain algorithm to C++ from JavaScript, given to us by Dr. Simon Katan and with some help from Stack Overflow (referenced in the code). Once we had the midi import, midi player, and Markov Chain processes working individually, we then combined them into one fluid process, in which upon compiling the code, the selected midi file automatically gets read and parsed into the Markov Chain.

PUTTING IT ALL TOGETHER...HOW DOES PROJECT: HERALD WORK?

To use Project: Herald, first import a midi file of your choosing into the program (best results come from monophonic files). When compiled, the instrument will analyze the midi file to create a chart based on note transitions within the song (for example, how many times note ‘C’ transitions to note ‘G’ vs how many times ‘C’ transitions to note ‘F’).

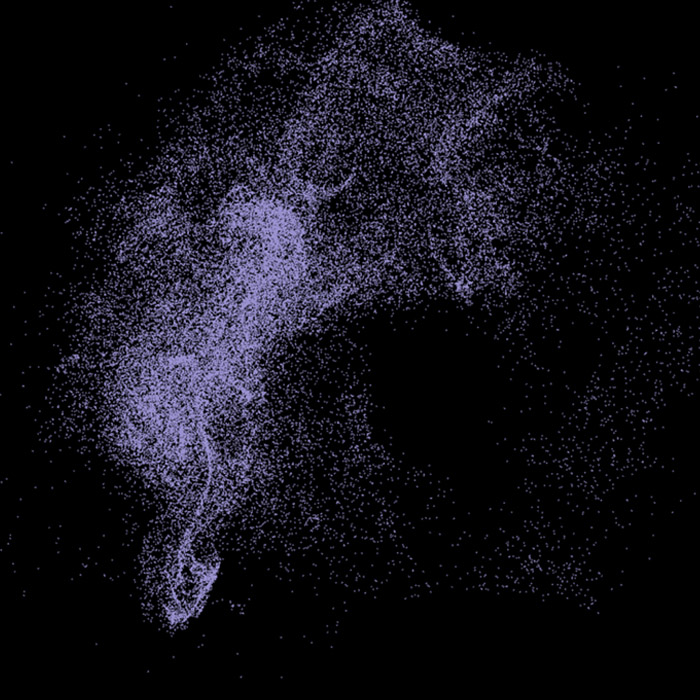

Once Project: Herald finishes analyzing the file, it is then time to train the instrument by picking several sounds you prefer and saving them. To select a sound, press “rand” while hovering your hand over one of the nodes. The resulting notes that play are based on weighted probabilities determined by the midi file which was input earlier. Pressing random results in a randomization of several factors to give the resulting sound a distinct speed and timbre.

When you find a sound that you like, hold your hand still over one of the nodes and while doing this, press and hold “save” for 2 to 3 seconds. This will allow Project: Herald to associate the position of your hand with the sound which is currently playing. When you have finished saving your first sound, press “rand” to select another sound that you like (preferably a different speed and sound from the first sound), and save a second sound using the same process as the first. Do this once more, and after saving at least a total of 3 sounds with varying speeds and timbres, press “train.” The instrument is then ready to play.

Place your hand over the nodes and the music starts playing through a set of note sequences. Moving your hand completely away from the pad stops the music, while moving from one pad to the next pad seamlessly changes the sequence speeds and timbres.

PROBLEMS AND DESIGN OBSTACLES

As this was our first foray into C++ as well as our first adventure in software/hardware integration, we knew there would be challenges. Luckily we were able to surmount many of them. One of the toughest (yet most enjoyable) hurdles was creating the midi parsing/Markov Chain functionality. Though we had been given a model to follow in JavaScript, the implementation in C++ was very different and we had to consult several times in Stack Overflow, though we really enjoyed the challenge and the payoff once it all worked together.

Implementing the Rapidmix API into the instrument was another fun challenge. The API is fairly new, so there isn’t a lot of documentation on the web to walk us through, so we just needed to peruse the API itself and make sense from the code and the examples it provided. Implementing this into the instrument was a great accomplishment for us.

One feature that we tried several times to implement was the ability to use a sampled sound rather than a synthesizer. We encountered errors in the sample player in ofxMaxim and unfortunately needed to make do with the synthesizer.

Outside of this, we encountered challenges and obstacles at every turn, but nothing that we couldn’t figure out with a little (sometimes a lot of) brainstorming, determination, and time.

EVALUATING PROJECT: HERALD

We feel that we accomplished a great first prototype for Project: Herald, and have received feedback that it would be worth developing even further, which is an aim we look to take forward. We have seen a lot of potential in the instrument and we are excited for the future. We believe that the instrument to this point has served well as a “first look” into the potential of this approach.

This being said, there is a lot of work that can and will be done in order to make this a truly playable instrument with the depth we strive for. First, we would like to integrate timing into the Markov Chain somehow. This is a complex question as some of the timing is determined between the user’s movements combined with the varying speeds of the trained nodes. We would also like improve the Markov Chain to a 2nd order.

Another aim is polyphony. This would be a fantastic feature as it would really enhance the expressivity of the instrument. Once again this is a complex question we have discussed- some instruments (piano, strings, etc) work well with polyphony, and others (some synth patches) will sound discordant.

We have ideas on how to develop the design further as well. We envision the instrument remaining on a flat surface, but want to place the Touch Board itself inside or underneath the design, away from the user and keep the playing surface as minimal as possible.

We have other ideas on other functionality that we would like to introduce, such as the ability to drag and drop another midi file onto the gui and automatic parsing without needing to recompile, and the choice of several instruments (randomization features would need to be different for each instrument).

All these improvements considered, we know these additions and more are possible with more time, and are looking forward to seeing how far we are able to develop Project: Herald in the future.

Bibliography

Soundbeam. (2016). Price list 2016. [online] Available at: http://www.soundbeam.co.uk/new-page-3/. [Accessed May 2017].

Humaninstruments.co.uk. (2017). Digital musical instruments for the disabled.. [online] Available at: http://www.humaninstruments.co.uk [Accessed Feb. 2017].

GitHub. (2016). elaye/ofxTouchBoard. [online] Available at: https://github.com/elaye/ofxTouchBoard [Accessed Oct. 2016].

GitHub. (2017). danomatika/ofxMidi. [online] Available at: https://github.com/danomatika/ofxMidi [Accessed Feb. 2017].

GitHub. (2017). jvcleave/ofxThreadedMidiPlayer. [online] Available at: https://github.com/jvcleave/ofxThreadedMidiPlayer [Accessed Feb. 2017].