Music Leap

by: Francesco Perticarari

Project Description

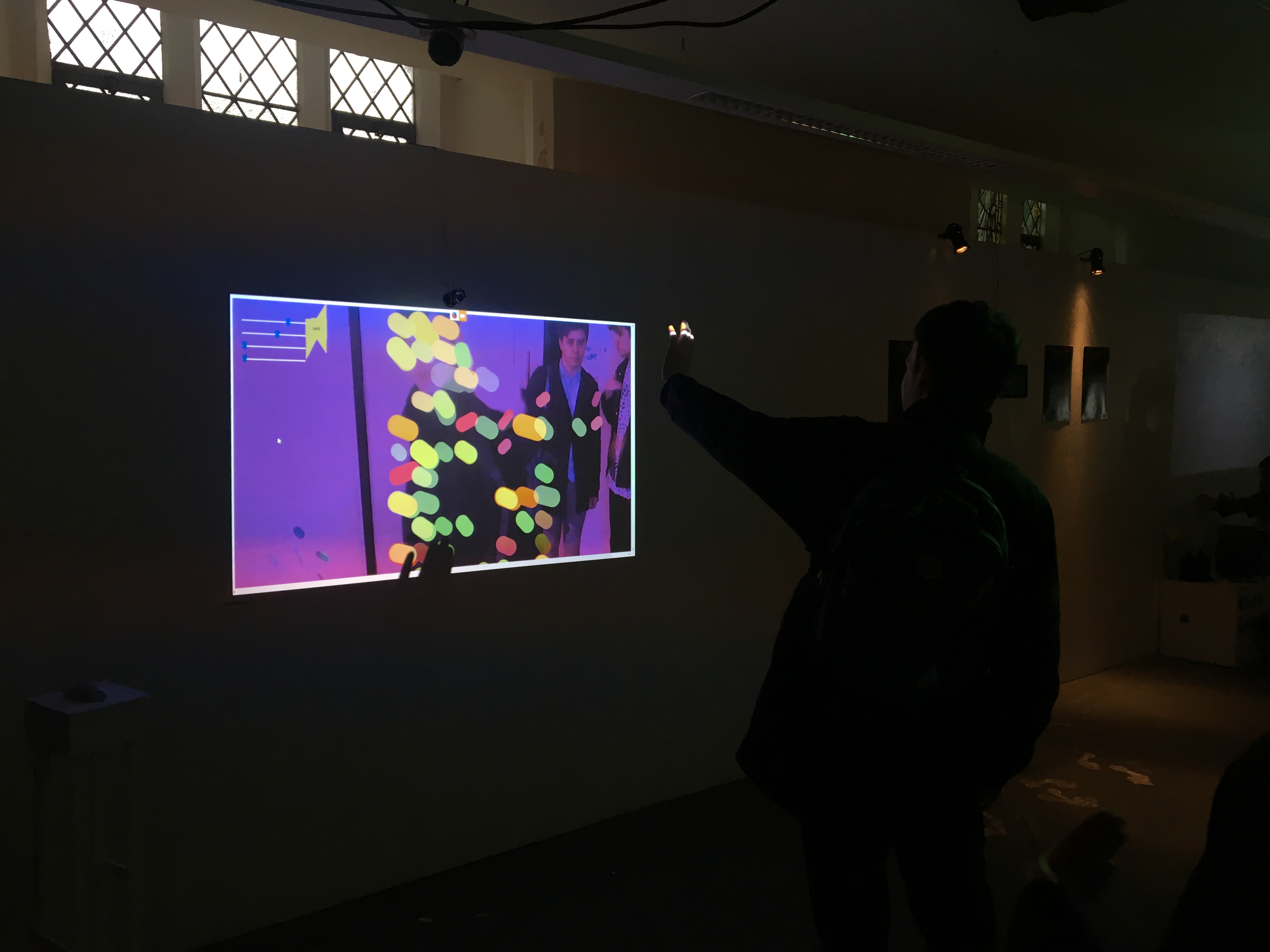

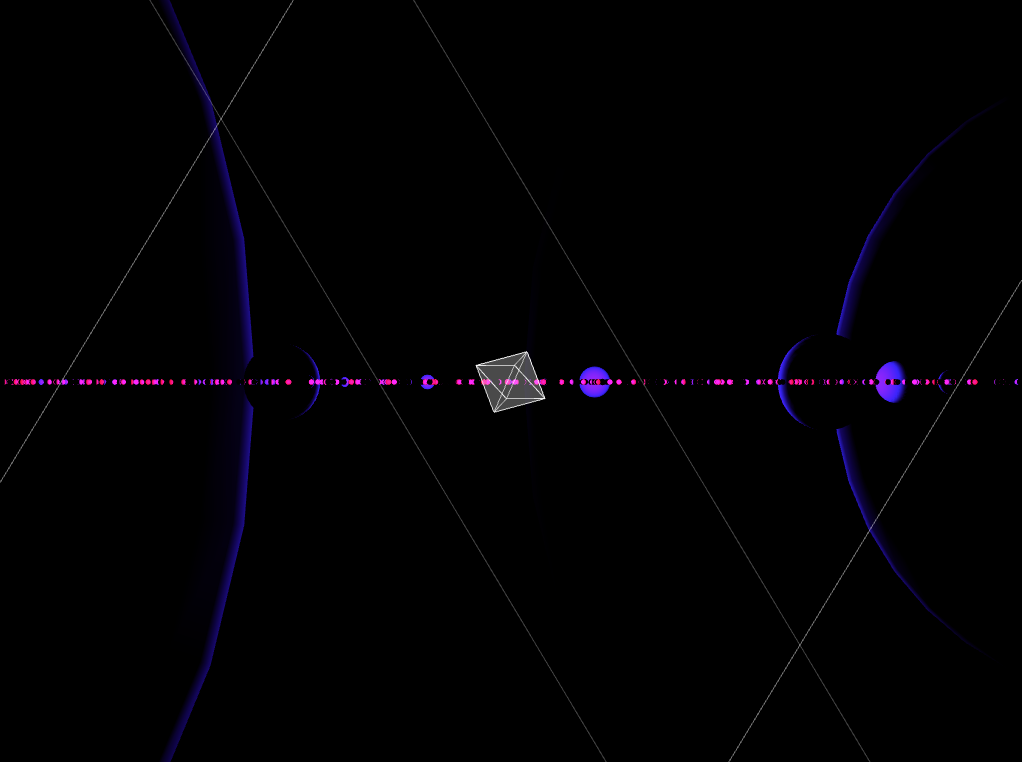

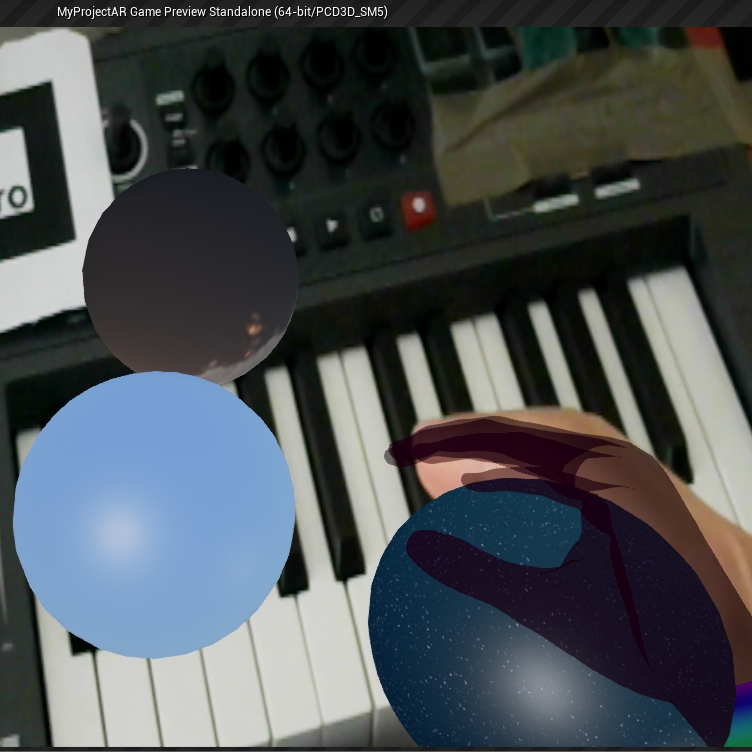

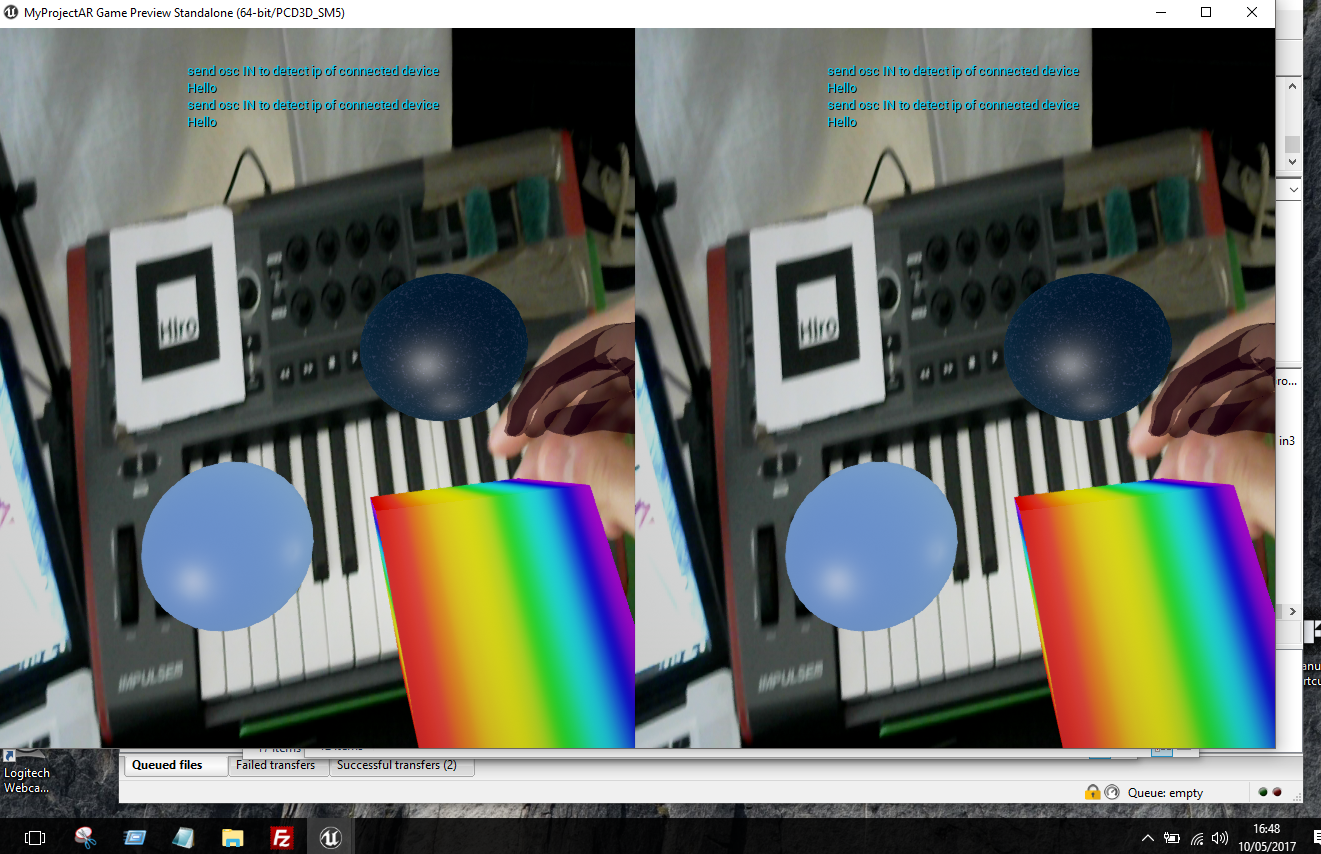

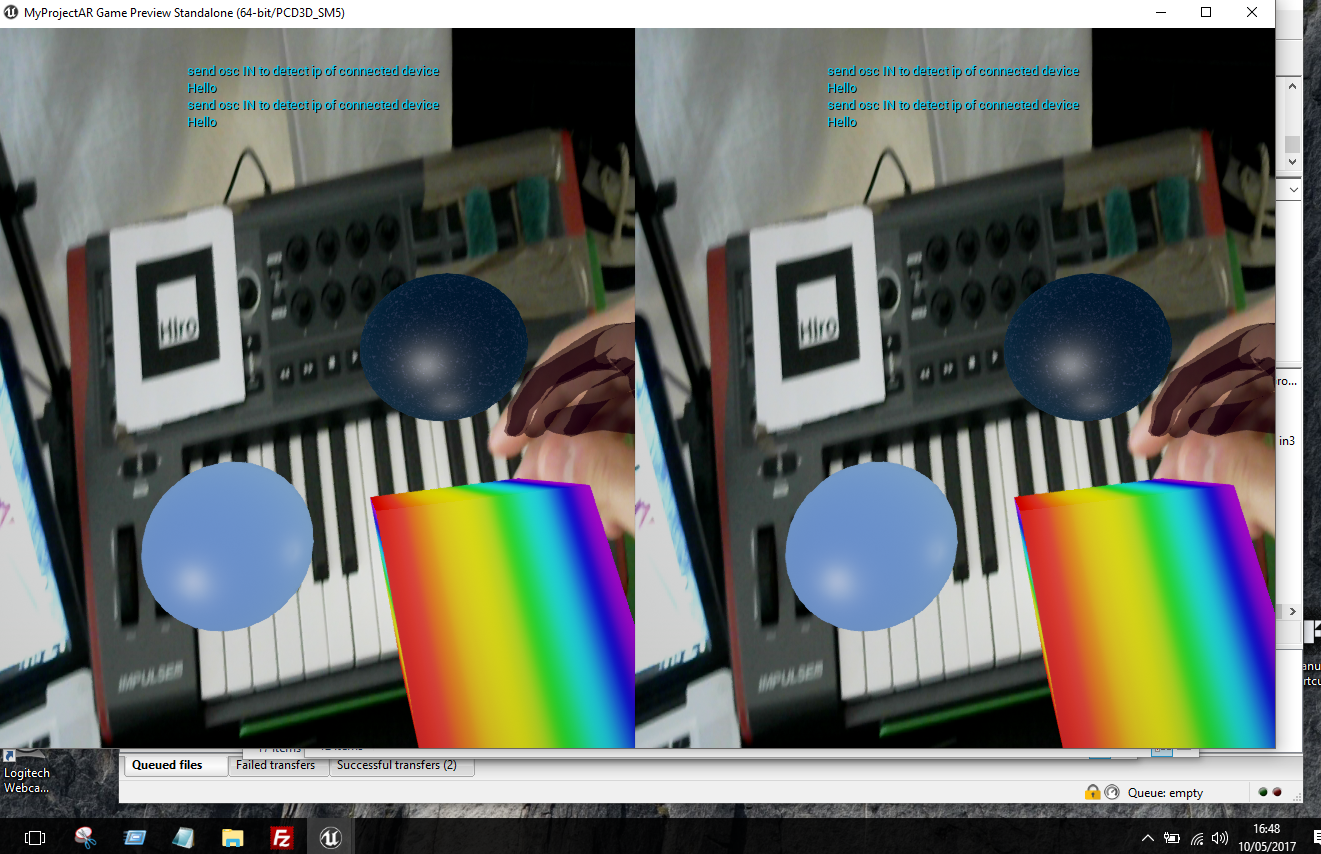

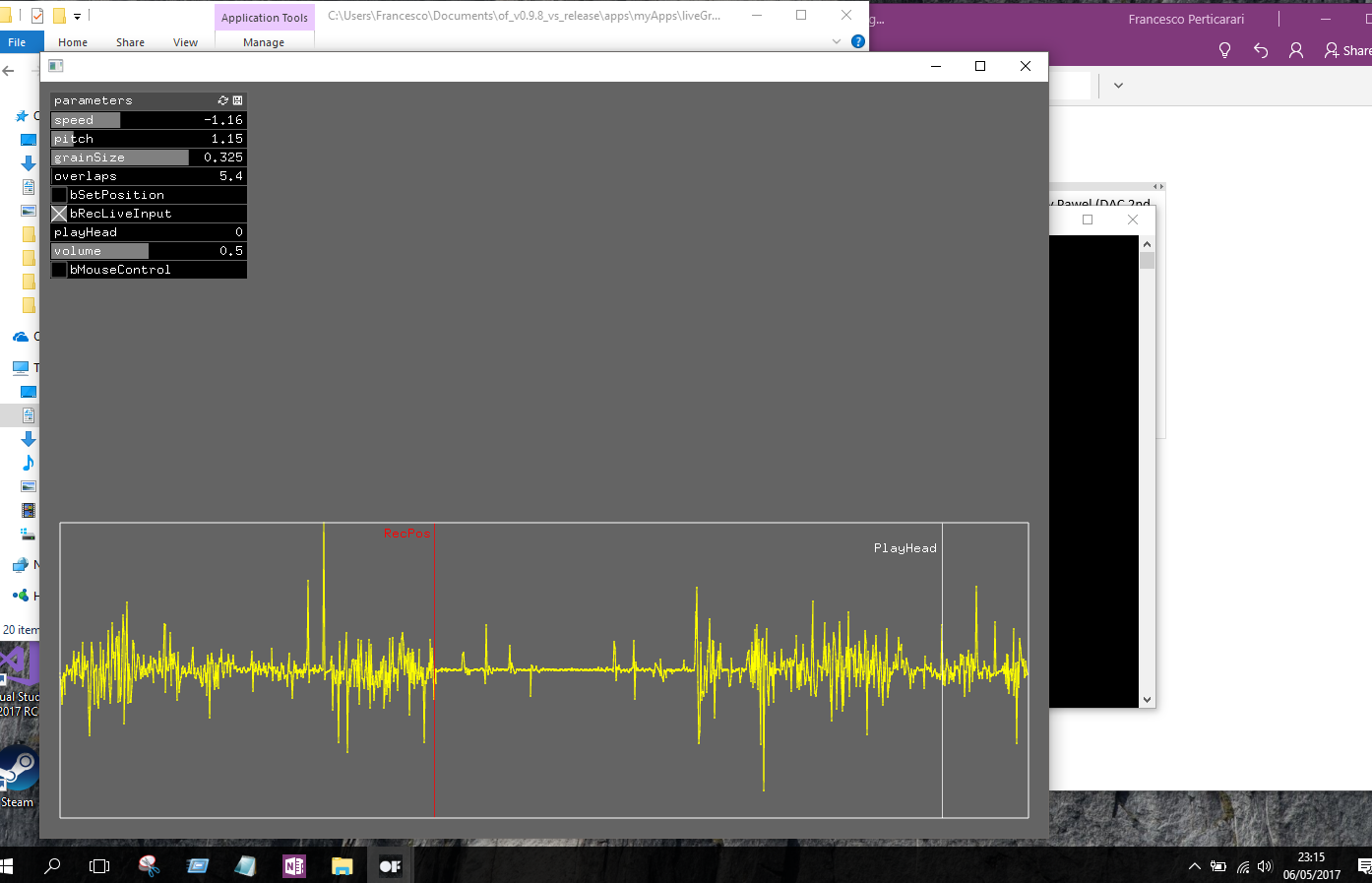

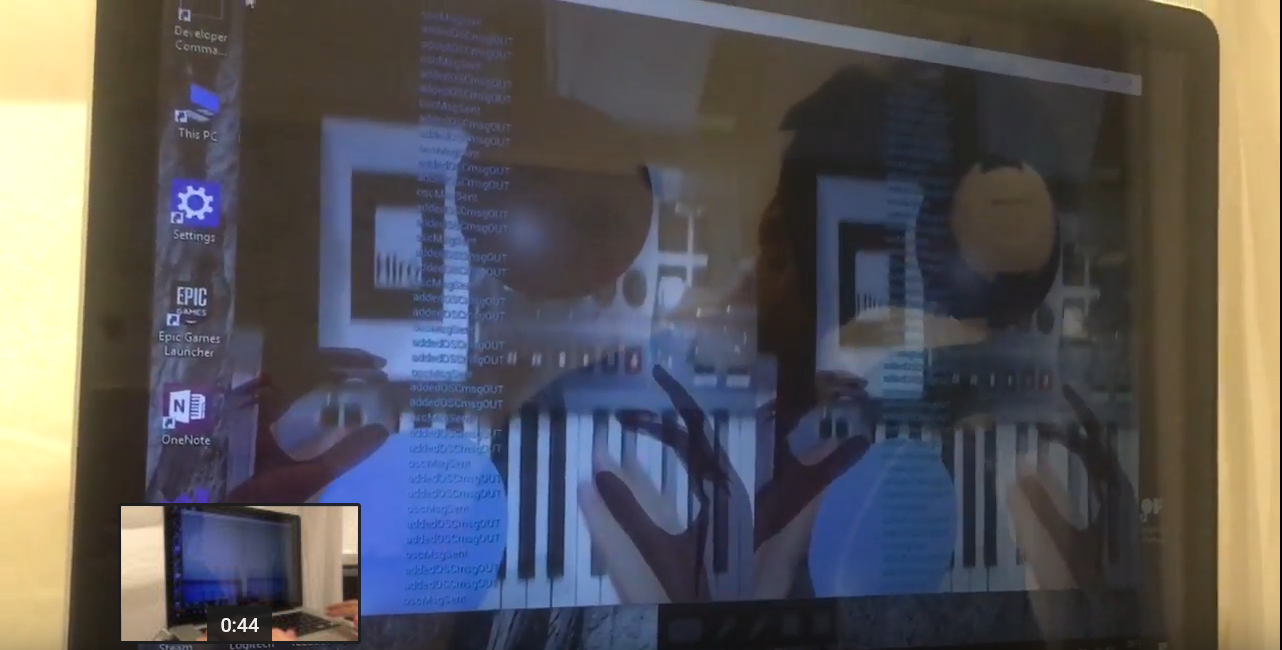

Music Leap is an Augmented Reality controller for music software. It has been developed to add flexible virtual control devices onto a real-world music setup by means of controlling a simple granulation-based audio engine.

The project features the use of several bits of software and a couple of hardware tools to achieve an effect similar to Microsoft’s HoloLens®. However, rather than just a limited and “wired-up” imitation of the AR goggles, Music Leap should be considered a stepping stone towards achieving affordable and immersive AR through mobile phones. It is my view, in fact, that once depth-cameras start to spread across smartphones (two are already out), this work could be easily converted into an integrated system that could do away completely with a host computer and extra hardware.

In its current release, Music Leap is a fun prototype that has allowed me to explore a different way of performing music. What’s more, it pushed me to explore creative ways for integrating a virtual interactive environment with the world around us. Its main downside is due to its complexity, breadth, and bulky nature, which would force anyone wishing to try it to get hold of several bits of equipment. This also meant that I had to spend a lot of time orchestrating the different components and adapting several programs across platforms rather than developing one functionality in great depth.

Music Leap is available for download on my GitHub page: https://github.com/fpert041/musicDream

Background, Research, Testing & Evaluation

The initial idea for the project was simple but ambitious: creating a Virtual Reality application that could take the power and flexibility of digital music making into an embodied 3D space. The intended audience was tech-oriented musician who wanted to play with their digital instruments in real space.

Originally the plan was to buy an Oculus Rift® SDK and develop a ‘mappable’ VR interface for music control. The aim was to craft a product that could position itself between the highly complex VR DAW Sound Stage® and the VR music games such as PlayThings®: something akin to touchOSC® in a VR space rather than on the flat surface of a phone or a tablet.

The main problem with the above idea was that getting hold of the Oculus SDK posed problems both in terms of shipping time and money. The other biggest issue was the need a powerful Windows PC with a top end graphics card to support it. Furthermore, the project itself changed from a pair effort to a solo work due to other problems. Ultimately, I found myself in December with a solid understanding of OSC communication, low level programming and hierarchical referencing within a C++ project, but without any practical work for the actual project.

Up until then the partner I was initially meant to work with had done some experimentation with the Unreal Engine 4® editor to create virtual 3D game scenes. Graphically speaking, those early attempts had really inspired me in finding a new virtual solution for music control devices. Besides, I had conducted a brief research highlighting how, from the early 20th Century until today, an increasingly growing number of people had reshaped or multiplied the ways music could be made, performed and listened to thanks to ingenious new uses of the latest technologies available to them. Since these days one of the most promising avenues of technological development is Virtual Reality, I wanted to pursue its possible application as an instrument to bring about the next breakthrough in music making.

My main goal remained the creation of a program that could allow a techy musician to enhance his or her studio or performance set with virtual controllers, without the need to be overwhelmed by a full-fledged analogue-like studio full of patching chords and complex modules. Therefore, I researched alternative VR environments and specifically Google Cardboard as a means to achieve my objective. Eventually, I discovered that one could stream any Unreal VR project to a mobile phone through Steam VR on Windows. I then looked for a possible way of interacting with virtual reality and decided to try out Leap Motion and its hand tracking capacity.

By the beginning of last January, when I was due to submit a proposal for my project, I had already covered the following on the technical side:

- · Understand and install oscpack library for a C++ project

- · Create or modify .cpp and header files to connect to UDP ports and deal with OSC messages

- · Interpret OSC messages (port/main address / msg addresses/ arguments)

- · How, when and why launch and handle separate threads

- · Access separate threads and visualise data through pointers

- · Create a demo using the visual interface provided by openframeworks

- · Understand how to create a GUI independently of openframeworks (fltk library & instructions on Programming: Principle & Practice using C++)

- · Understand better how to code on Unreal Engine 4 in both C++ and Blueprint scripts

- · Install and run Windows 10 on a Mac through Bootcamp

- · Set up and run a UE4 project

- · Create a 1st person environment in a UE4 project

- · Test the VR Streaming through suitable apps: VRidge by RiftCats was ultimately chosen for Android and replaced with KinoVR® once I had to revert to my old iPhone 5 due to their reliability and lack of noticeable latency.

On the creative side, I worked hard to figure out what was the best route for my project to follow. I considered that experimental musicians had been already experimented with DIY controllers for a while, often using MaxMSP, Arduino sets, contact microphones and Leap Motion devices to create custom instruments and controllers. In particular, I drew direct inspiration from the YouTube videos from the German producer Perplex On[1], as well as from Laetitia Sonami’s Lady’s Glove and Spring Spyre custom controllers.

I tested the feasibility of using only the visual feedback of a VR environment to manipulate controllers and objects in a virtual space using the Leap Motion sample game and Blocks VR, which are included in the Leap Motion SDK package. I then went on to implement a floating-hands character powered by the Leap in a basic Unreal Engine 4 project.[2]

During the above testing I found out that, provided the virtual objects are big enough and the physical interaction with the projection of the user’s hands is well implemented, the brain is tricked into “feeling” a tactile response.

For such a test, I initially tried the Leap motion myself, using the two mentioned programs. I then repeated the same tests on several users. First among my course peers, then among people who were either a musician either a computing student, and finally among people, such as members of my family, who are neither.

As the project progressed, I was hoping to carry out as much a rigorous testing for the developing of the interface that I was crafting. Unfortunately, the scope of the whole project ended up being too vast for a solo developer with multiple commitments and a restricted timescale to achieve this. The only other test I would eventually do was the final testing of my prototype during the last week before the deadline.

In my project proposal, I had also expressed a strong will to let the user customise the OSC messages output from the app and use it with any DAW. Again, this was something else I would be forced to drop as it would have required the development of a whole new sub-program within the main app.

Objectively, I didn’t expect my prototype to be the holy-grail universal replacement for physical controllers such as the AKAI ones, the Native Instrument ones or the latest ROLI ones. My main concern back in January was that we don’t have yet holograms that feel solid just like in Star Trek. As it turned out, there was also another issue I had been repeatedly warned upon: I was no software development company. The whole project as I had envisioned had a really large scope and I would finally learn the hard way that: Every extra functionality I wanted, required developing both the specific code for it and a way to integrate its functionally with the rest of the program. Having chosen to work with multiple pieces of hardware and software together also meant a disproportionate use of CPU power, which limited the number of features I could sustain on my MacBook Pro 2011.

[5] & [6]

I did however produce something that can attract the more tech-oriented music makers and computing-savvy experimenters by providing a tool that can enhance their performance or compositional flow in a new way. As a matter of fact, I had surveyed 15 musicians [3] from Goldsmiths University of London during the winter period and found that 14 out of them believed the idea of adding virtual controllers to their sets was something they would very much look forward to. Most [4] also indicated that a mixed reality tool, which would allow them to still interact with real controllers and instruments, was the app design that would best work for their needs.

Music Leap turned out to be precisely that: An Augmented Reality playground, where hand interaction with virtual 3D objects triggers control messages for music events. In my project proposal, I stated that I would have liked to create “objects whose orientation is detected, or more experimental items whose location (probably relative to the user) could send out other control-messages”. Eventually, I managed to achieve functionalities along those lines, but the actual usability of the app in practice ended up being limited by four main shortfalls:

- · Requirement of many different pieces of software and hardware

- · Fixed audio engine: the controllers only work by interfacing with the tailored made instrument I coded in openframeworks

- · Occasional lags and glitches due to extreme CPU usage

- · Virtual controllers only visible if the AR marker is completely visible

After completing my final prototype version, I managed to have five Music Computing students test it. They really liked the concept and appreciated four specific aspects of the product:

- - It empowers you to step away from the computer like a physical controller but is almost as portable as a digital device (only a paper print is needed as a marker)

- - You don’t need to look at the computer screen and the position can be easily adjusted

- - It has a cool “sci-fi” appeal to it

- - The granular audio engine is a nicely sounding and versatile device

They also noted three major areas of improvement:

- - It should really be untethered as this could give it a selling point over Oculus Rift and HTC Vive apps.

- - It takes a little to get used to the perspective and the distance of the virtual objects

- - It should be more stable and ideally most of its separate parts integrated into one device

Three of them left the following comments:

“The idea is really useful because it allows musicians to create a bridge between digital software and analogue controllers in real space. I would definitely buy it if it was a more stable release” - Dimitrios

“Seems cool. I’d like to see further developments on the graphical aspect”- Zac

“I really enjoyed the immersive experience but think more flexibility on assigning the parameters would greatly improve its usability” - Tal

In the end, Music Leap ended up being more of an experimental “hacky” instrument than the multi-purpose controller it was meant to be. It does feature most of the core functionalities I wanted to explore, but it has serious limitations in its practical use. Mostly this is due to its being a jigsaw of components, which I had to hack together in substitution of a comprehensive environment such as the HTC Vive SDK, Microsoft’s HoloLens or (possibly) the futuristic Google Tango SDK for coming up phones boosting integrated depth cameras.

Music Leap in its current release may not be a comprehensive AR app ready for release, nor a stunning portfolio work for a creative computing freelancer. However, it provides an interesting experimental tool to play with if you want to add a sci-fi twist to your current live set. It also explores several solutions that could become very useful when smartphones are able to capture both a video stream and a depth point cloud. Among these solutions we find: texturing object with a camera stream, virtual hands animation matching real hands position, and non-conventional control devices.

Project development: issues, solutions and learning

A quick video run-through of the project can be found on my YouTube channel:

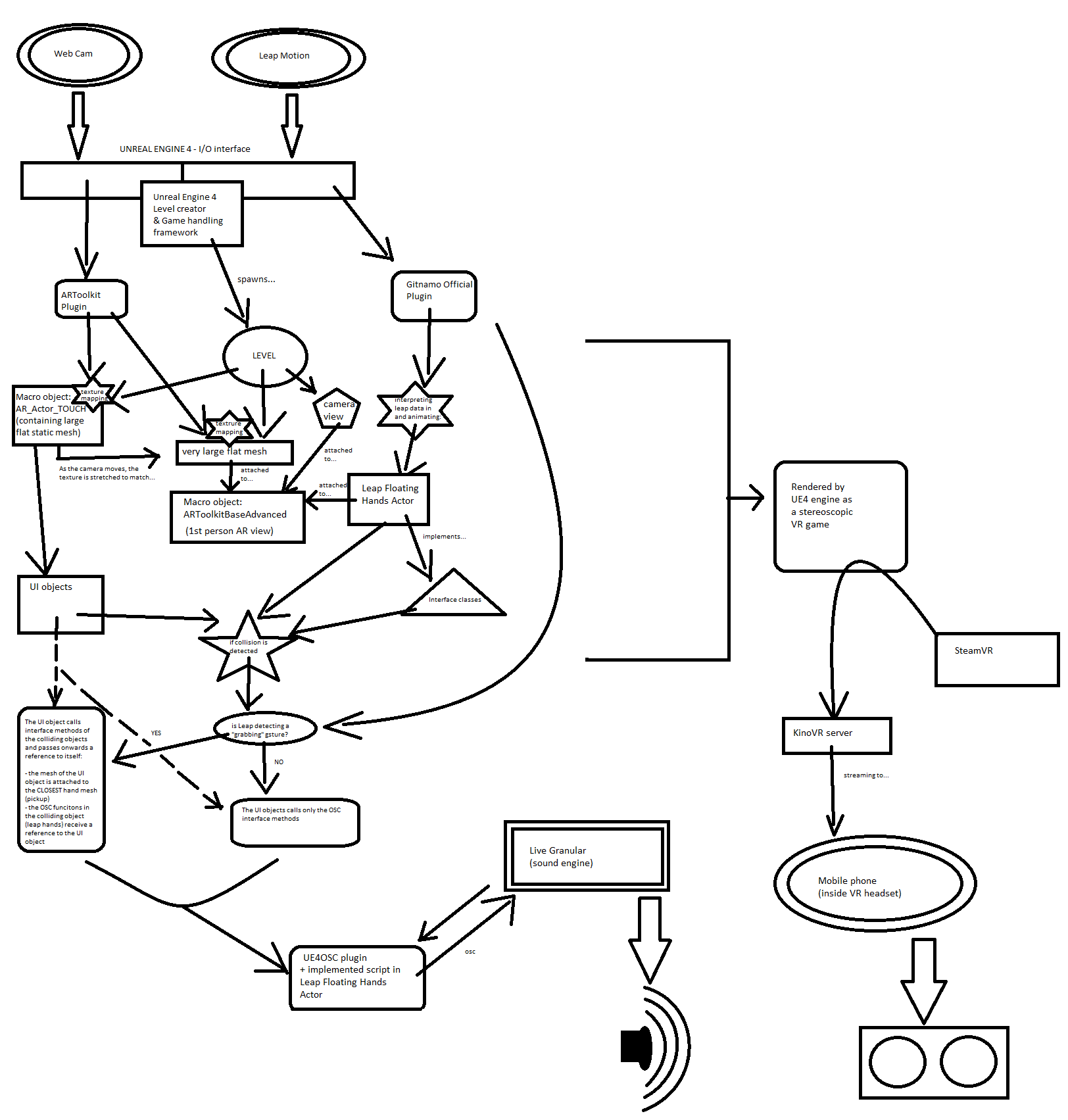

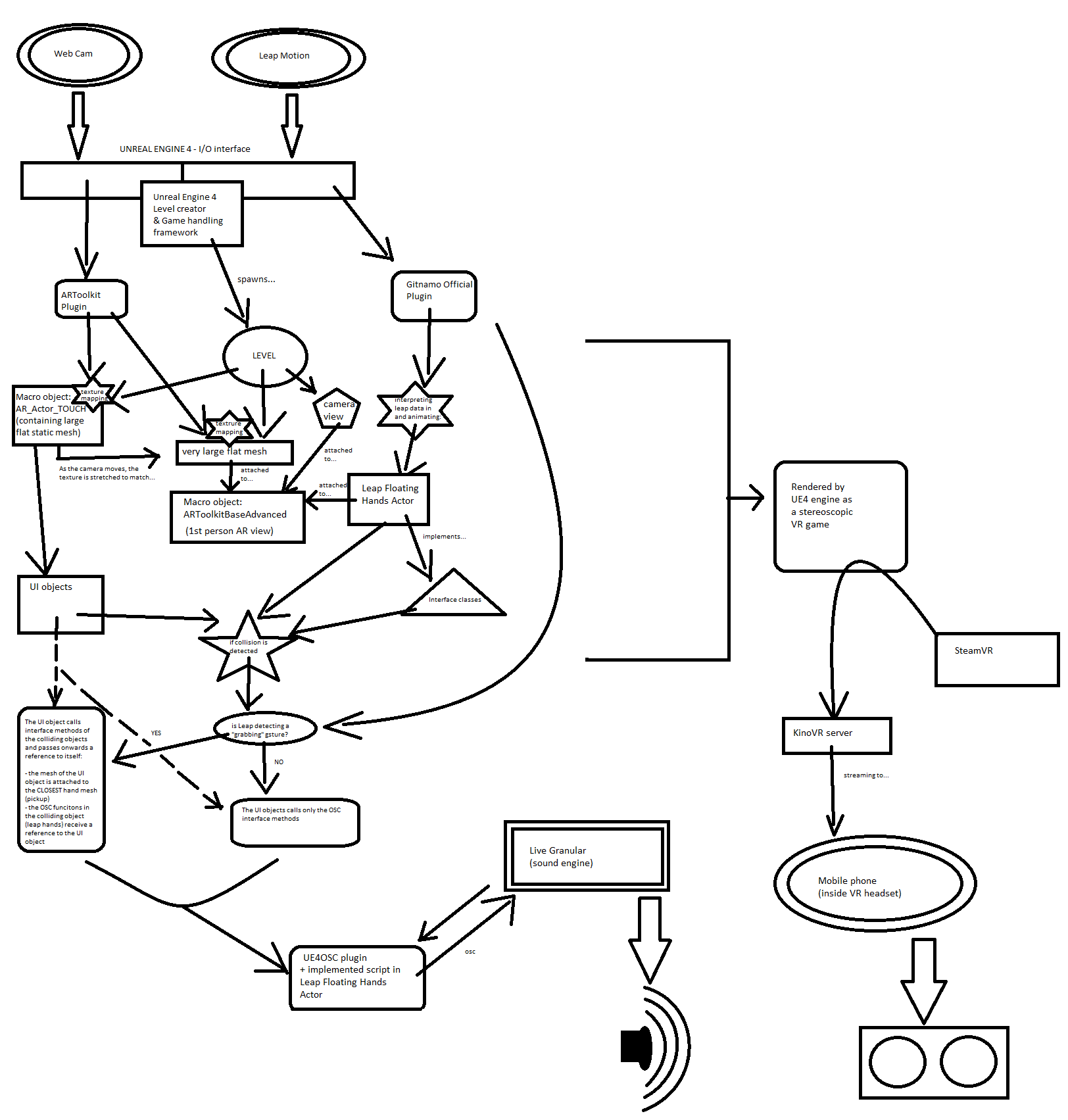

Whereas here you can find a flow chart of the project elements and their interaction:

Since the submission of my proposal for this project, I stuck to following the timetable I had set for myself. As the time went by, I annotated where I was at as opposed to where I had planed to be. Other than this document, I also kept a diary with the major problems found, the solutions I worked out for them and the progress or the changes of plan I made throughout this experience.

Both of these documents are available online at the following pages:

- Timetable:

- Diary with chronological pictures/snapshots:

Conclusive thoughts

Developing Music Leap has been an incredible experience. I learned a great deal from it and it was definitely worth the ride. From learning the low level c++ at the beginning, to mastering source control, from understanding the dynamics of skeleton tracking to learning how to dynamically texture map a mash in a game engine.

This project also turned out to be tremendously stressful because of all the different things it tried to be. I often got stuck debugging issues due to porting code and spent most of my time making things interact properly rather than making the end product robust, user-friendly, and artistically “beautiful”. I then have to recognise that spending weeks “reinventing the wheel” just to hack away technology that already exist (but could not use) was a powerful learning experience, but probably also the worst use of my time in terms of product results.

In the end, I feel proud of what I have achieved and particularly value the grit and resolve shown when everything seemed to be going wrong. However, I will definitely need to be more specific the next time I embark on a solo project of this scope. A more specific and narrow objective will, in fact, allow me to explore the chosen topic in much more depth.

Notes:

[1] see here: https://www.youtube.com/watch?v=TCRW0oU1RO0 and here: https://www.youtube.com/watch?v=mdOcS40nkAE

[2] following this video tutorial: https://www.youtube.com/watch?v=fmtBQzD7ZFE

[3] 10 from Music Computing year 2, 3 from Popular Music year 2, and 2 from Music Computing year 1 were asked the questions: “Have you ever thought about the possibility of controlling your studio or live set by means of virtual controllers?” / “Would you look forward to using it if a software for this existed?” / “How would you design it to best suit your needs?”

[4] 8 out of 15

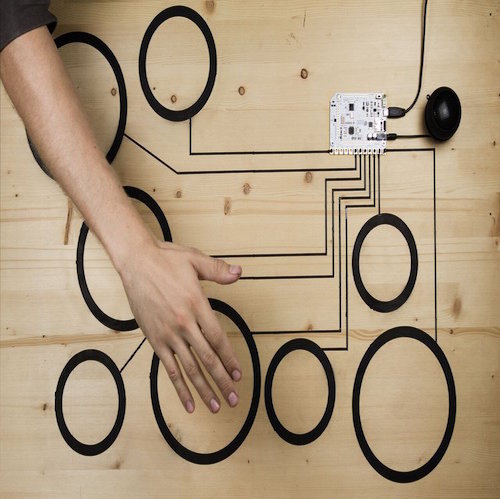

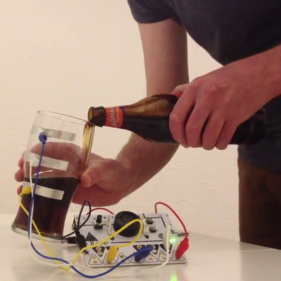

[5] image: frame taken from https://www.youtube.com/watch?v=mdOcS40nkAE

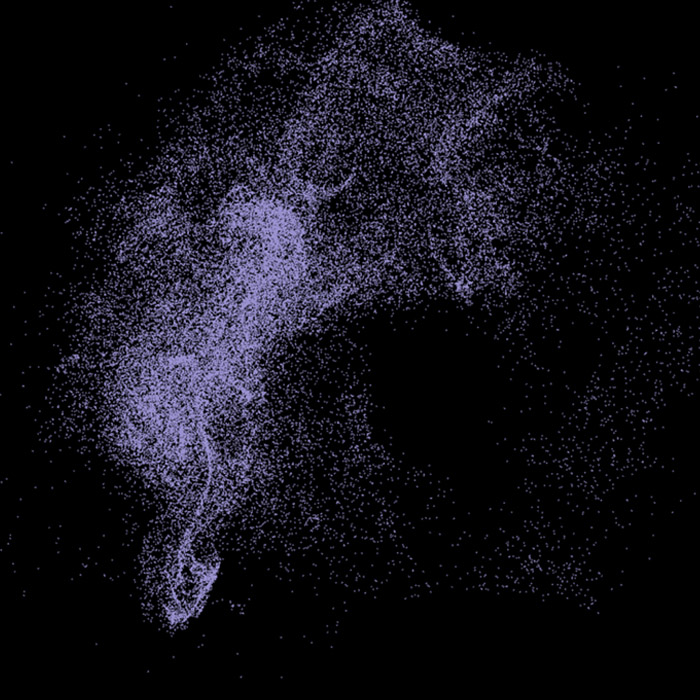

[6] image: uncredited image found on https://ibb.co/jAbvkk

References

Batty, Joshua - GrainPlayer

Gitnamo - UE4 Leap Motion VR Jenga - (including following 2nd and 3rd part videos)

Killer Bunnies From Mars - Unreal Engine VR to VRidge to Steam HTC Vive to Cardboard

Mallet, Byron - Controlling Live in Virtual Reality

Mauviel, Paul - Youtube Channel

MBryonic - 10 Best VR Music Experiences

Parabolic Labs - YouTube Channel

Perticarari, F. and Masroor, H. - DIGITAL MUSIC MAKING & THE RISE OF VR

Shirley, Dan - UE4OSC Tutorial

Stack Overflow - https://stackoverflow.com/

Unreal Engine Forum - https://forums.unrealengine.com/

Unreal Engine Answers - https://answers.unrealengine.com/

Ward, Reuben - Youtube Channel

Wikipedia - Virtual Reality (last retireved November 2016)

Special Thanks

A big thank to Pawel Dziadur for his help in coding the audio engine based on Joshua Batty's repo. Also for leding me his Leap Motion controller twice 🙂

If you want to see more of my works, visit my portfolio website: https://francescoperticarari.com/