Atelier Konstanz

The e-David Painting Robot

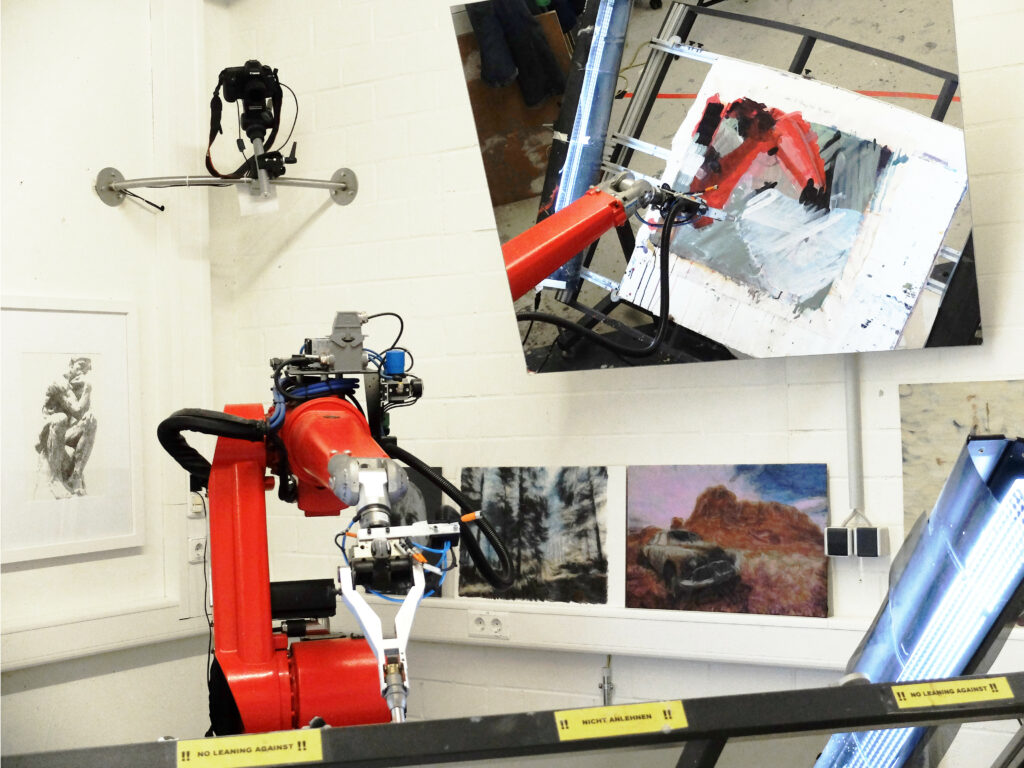

Building a painting machine that uses a brush to apply paint to a canvas, mimicking the human painting process, is the vision of the E-David project. E-David painting robots are common industrial robots that are typically used for applications such as welding or machine placement. We extend these machines, which normally only repeat fixed programmed movements, with a camera and a control computer so that they can react dynamically to environmental changes.

The system works on a template basis, i.e., a digital template image is presented to the control computer and reproduced as accurately as possible by the painting robot. To do this, the painting software calculates brush strokes that follow certain features of the original image. These strokes are then broken down into points, which are sent to the robot. The robot traces the canvas point by point and stroke by stroke, gradually creating a complete painting. Although the robots move with an accuracy of ±0.01mm, inaccuracies are introduced into the process by brushes, paint, and canvas: brush bristles get bent, paint drips, and the canvas curls.

E-David Facilities

Available Painting Machines

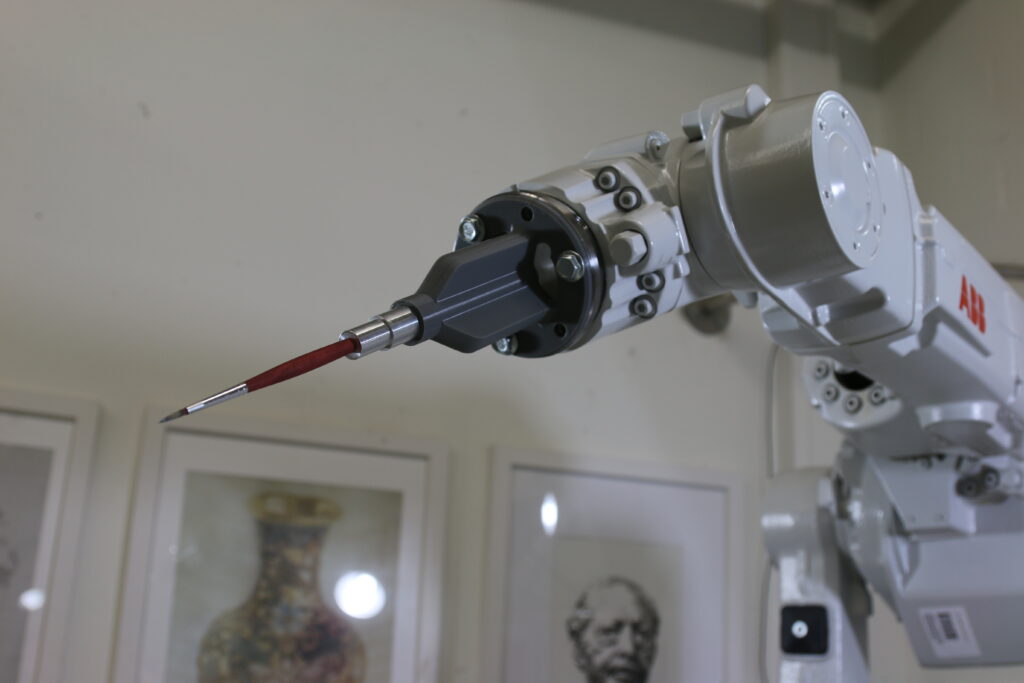

The ABB IRB 1660ID is a six-axis industrial welding robot that is statically mounted in the university in a dedicated room. It has a large painting area of approximately 1m x 1.2m. A light barrier provides safety while the machine is running in automatic mode.

Left: e-David Robot arm with the mounted brush holder system. Right: The whole setup of the main robot (ABB IRB 1660ID). Images taken by Michael Stroh 2023

The ABB IRB 1200 is a six-axis general-purpose robot part of a mobile demonstration setup. It uses the same robot controller and software as the IRB 1660ID and can be used interchangeably with the same e-David software. Its painting area is 80cm x 60cm. “Mobile” means it can be transported to exhibition venues using a large van and 2-3 people within one day. The setup weighs approximately 400kg but can be decomposed into segments of a maximum of 50kg. The painting surface also has an automatic paper feed, which can be used to create “infinite” paintings or to acquire data automatically. The robot has the same feedback system as the large arm. The robot is enclosed on four sides by transparent acrylic planes, forming a cube. The safety system is a lockable door that stops the robot when it is in automatic mode.

All images show the mobile painting robot (ABB IRB 1200) from different viewpoints with its painting canvas on the left side. Images on the left and middle were taken by Michael Stroh in 2023. The right one was by Patrick Paetzold in 2023.

We have an XY-plotter with a Z-axis for pen movement, which can also be used as an e-David device. The plotter has a painting area of 2m x 1.2m and can be used in a lying or standing configuration. Currently, no feedback system is set up with this machine, but it can be retrofitted by adding markers and a camera. Pens need to be modified with 3D-printed adapters. The machine can be controlled using grlb or via the e-David software system.

All images show the plotter from different viewpoints. Images taken by Michael Stroh 2023

Brush holding system

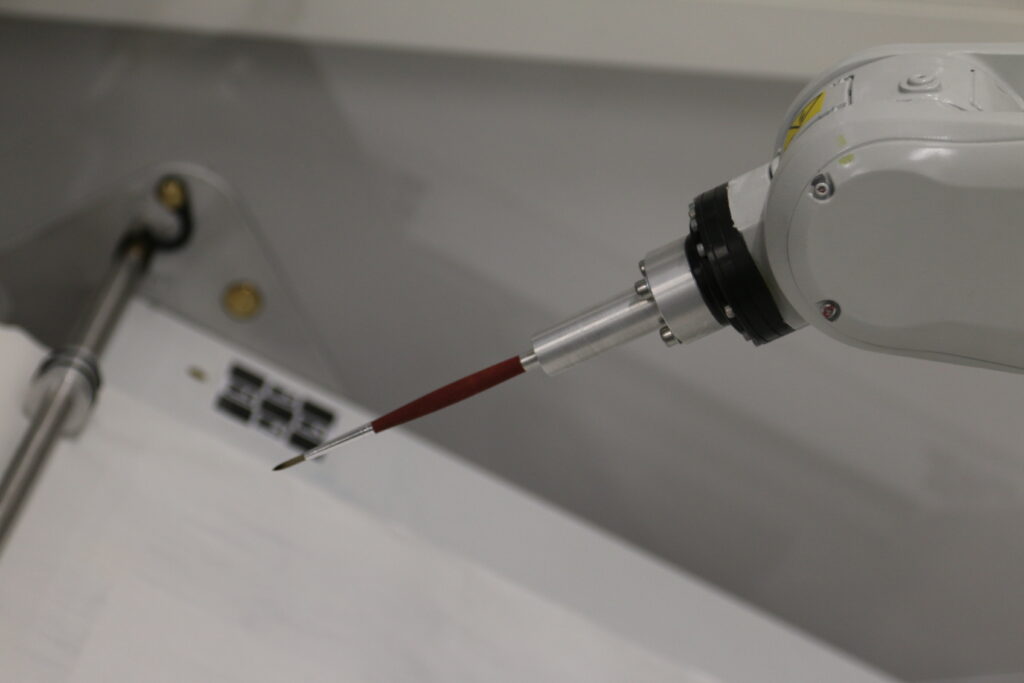

The robot arms are equipped with a tool-holding system for round brushes. These are held coaxially and must be pre-cut to a fixed length and then center drilled. Currently, the holder does not prevent tool rotation. The main benefit of this system is a very rigid mounting of the brush, with zero play. This only leaves brush hair dynamics as a variable when painting. Currently, we are using round brushes of sizes 2, 4, 6, 8, and 12. Further work is planned to extend this system to different brushes, requiring some hardware experimentation and robot programming. However, there are physical limits to the extendability of the brush holder system, and thus, it can only facilitate normal brush sizes. Anything above brush size 24 might not work as intended or fit into the current holders. An automatic brush changer is currently not enabled but is planned to be implemented in the near future.

Image showing the brush holding system of the smaller robot unit (ABB IRB 1200). The photo was taken by Patrick Paetzold in 2023.

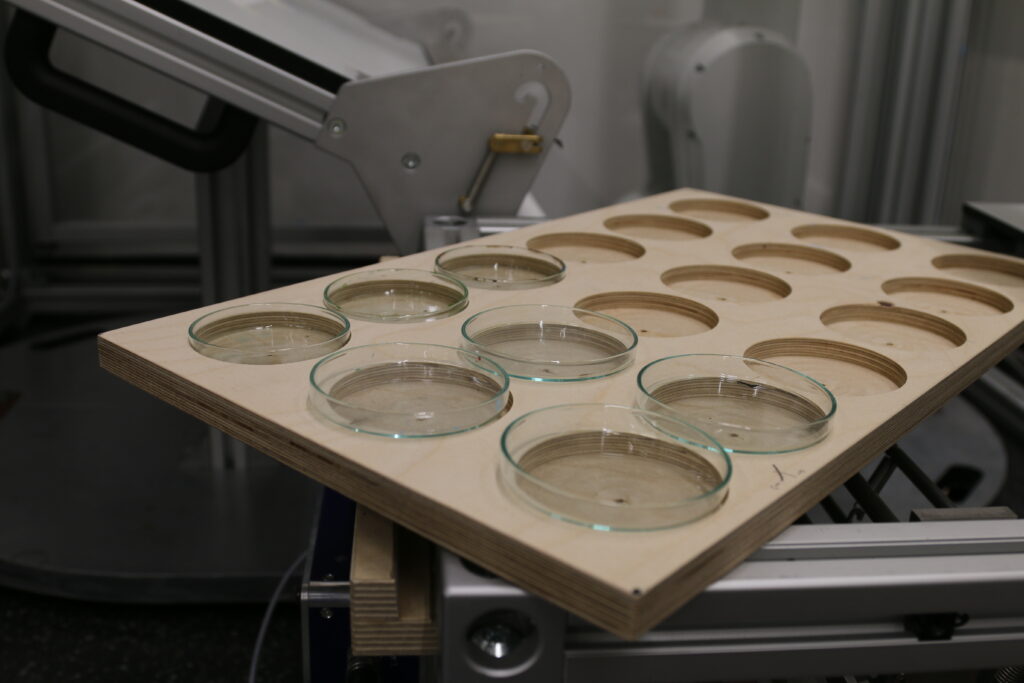

Palettes and Brush Cleaning

Both robots use a system of interchangeable palettes and cleaning devices. The palettes are wooden boards with a grid arrangement of cutouts for glass containers. These containers can be

- Nine large glass beakers for inks

- 18 large Petri dishes for general colors

- 80 small Petri dishes

The picture shows the palette that can fit 18 large petry dishes beside the mobile painting robot setup. Taken by Michael Stroh 2023.

Other palettes can be manufactured and included. In this case, a new movement for paint pickup must be created using ABB RobotStudio, and the geometry of the palette must be included in the robot station data. This process takes approximately 30 minutes. Glassware is usually obtained from the chemistry department’s storage of standardized lab products. The cleaning device is a round container that contains water and a 3D-printed grid, which is located just below the surface. The robot cleans the brush by moving it through the water and hitting the grid along the course. This effectively cleans the brush if the tool is not too contaminated. The robot can then be commanded to pick up a color from a specific slot or clean the brush.

Canvas System

The robots use a flat painting surface mounted in front of them. The painting surface can be defined by moving the robot to three corner points, defining the spanned rectangle as a workpiece. The machines can then move relative to this known plane with high precision. This calibration step is usually done only after the robot has been reassembled, and the painting surface should be understood as a static object w.r.t the robot. Canvasses, paper, or other painting targets can be mounted on these using tape or our clamping system. Painting targets must be rectangular and aligned with the axes of the painting surface and should be mounted as flat as possible. These are defined in a config file, which is described in the software section.

Feedback Camera and Markers

The robot uses a ceiling-mounted DSLR (Canon 6D or 5D) to acquire images of the painting surface. The resolution is usually 3 to 2.5 pixels per millimeter. The robot automatically takes a feedback picture after finishing a command file. The robot moves to a “hide” pose before activating the camera. Image transmission and processing take a few seconds until it becomes available as a color-corrected and rectified PNG image in a given folder.

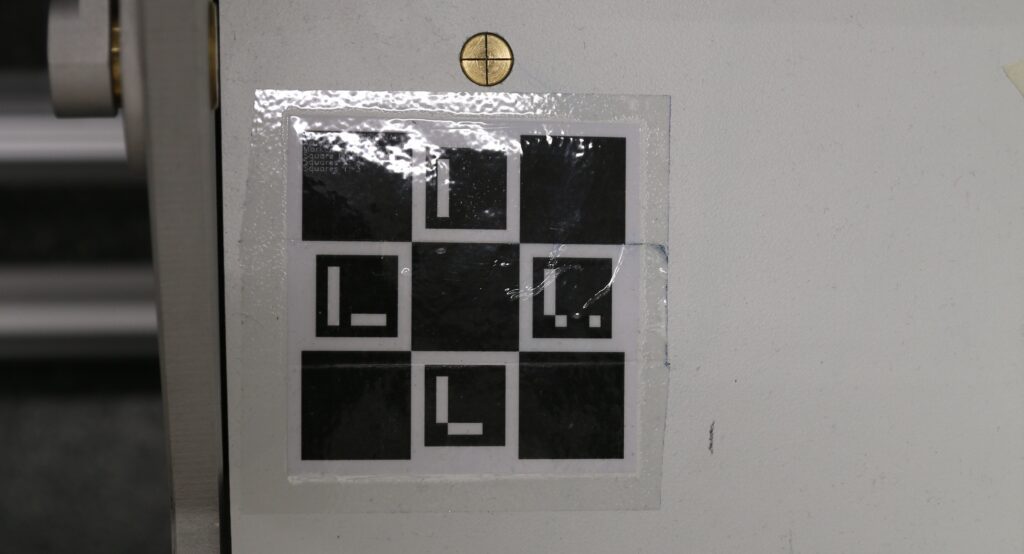

To acquire stable feedback images, the system requires the presence of Charuco-Markers on the painting surface. These are currently attached in the corners to minimize the loss of workspace. During painting with feedback, all markers must be kept free of paint and may not be occluded. Color calibration is performed whenever the camera can detect a color calibration target on the canvas. It can be left there permanently or be placed as needed.

Left: One of the Charuco-Markers used to track the canvas by the visual feedback system. Right: A mounted digital camera used for the visual feedback of the mobile robot (ABB IRB 1200). Images taken by Michael Stroh 2023

Software

Currently, there are three software components in e-David:

- Robot controller – Each robot arm is controlled by an IRC5 programmed in RAPID, a proprietary ABB language. RobotStudio is used to edit RAPID code, visualize the robot setup, and show programmed paths. Users can directly edit RAPID code to introduce new movements. The existing software on the IRC5 controller listens for command strings coming in via a TCP connection, which specify points for a stroke, a command to execute the previously sent points, to pick up a color, or to clean the brush.

- e-David driver – The main control software coordinates user input, feedback, and robot state. Several config files define the current palette, canvas objects, and default actions for underspecified commands. Users send commands to the driver by writing a command file and placing it in a folder. The command language is specified in a separate document. Commands are executed in sequence, and afterward, the camera system is used to acquire a feedback image. The driver software can provide unprocessed images, images cropped to a selected canvas object, or cropped and rescaled images to match a user-selected pixel size. Feedback images are stored sequentially in another folder. For user software, this file-system-based command/feedback structure is the primary interface to use e-David.

- ShapePainter – The highest level for e-David control accepts modified SVG images containing paintable regions with some extra information. SVG data is preprocessed, and shapes are classified as regions or individual strokes. These are then mapped to palette colors and painted in order of descending area. Each shape is realized by one or more painting agents, which paint a shape in a specific style. For example, an unconstrained agent will fill it with a given stroke pattern, while a DST agent will paint aligned with the shape’s exterior. Agents can be layered, e.g., to apply a smoothing agent after an initial infill pass. Users can create their individualized painting agents in Python within the provided framework.

Several calibration procedures are available at this level, like a pressure-width function that maps a brush pressure to a resulting stroke width with an accuracy of +/-0.1mm. - A new interface system using a typical tablet or graphics tablet as an input device is currently being developed to enable active painting onto a digital canvas that the robot can replicate.

Materials

The e-David system is generally agnostic to the used materials, but we mostly use the following materials:

- Acrylic paint by Schmicke and Liquitex

- Round nylon brushes (daVinci College and Junior series)

- White, untextured cardboard canvasses or paper

- Gouache has been used successfully in the past.

Oil paints are discouraged due to their slow drying time and difficulty cleaning up.

Limitations

- Painting with the brush held at an angle requires the user to avoid robot singularities, as these will be caught by the ABB controllers, which then stop the robots.

- Painting speed is currently limited to 100mm/s but could be increased.

- The feedback cycle takes a few seconds to complete and is a blocking action, i.e., the robot cannot perform any action in parallel while a feedback image is being taken.

- For safety reasons, humans cannot approach the robots while they are working in automatic mode. Safety mechanisms shut the machines down if a human enters a dangerous zone.

In this regard, we first analyzed the work by Faraj et al. on layered shape trees, decomposing an image into a set of geometric regions sorted based on their level of detail, and developed a digital abstraction method to serve as a pre-processing step for the robotic painting framework. This method creates a complete pipeline that starts from a given input image, abstracts it based on the depicted scene objects and supplied user parameters, and finally produces a layered shape tree. We then iteratively paint all shapes in this tree using the e-David robot to create a semi-automatic method for image painting.

To paint different regions with varying styles, we started to research and develop different methods for realizing geometric regions on the canvas that imitate human painter strategies (e.g., painting locally and expanding, sharp corners, etc.).

Like human painters who detect and correct such problems during the painting process, e-David has a camera that takes a photo of the canvas regularly. This allows the actual condition of the canvas to be compared with the default image. Differences in the images are detected, from which new brush strokes are generated. If an area is too light or dark, it is touched up with the appropriate color until a satisfactory state is achieved. This is done until all parts of the image have reached the desired accuracy. The entire process is now repeated. A feedback loop is created, which allows errors to be corrected as they occur. To complete a painting fully automatically, the robot needs 1 – 3 days of working time.

In addition to automatic painting, e-David can also be used by artists to create a fusion of human and robotic art. Instead of using automatic painting software, the artist can use the robot to apply a specific stroke pattern, for example. The high repeatability of the machine enables the artist to create structures on the canvas whose precision cannot be matched by humans. The complete painting process can be saved and painted in different variations, such as with different colors, brushes, or sizes. This creates a “distributed” work, which extends over several canvases. By combining human creativity with machine precision, new possibilities are created in painting.

Team

Development Lead – Michael Stroh

Michael Stroh (1999, Friedrichshafen, Germany) is a Ph.D. candidate at the University of Konstanz after completing his master’s in computer and information science in 2023. His position is about interactive robotic painting with AI support. He has been working at the Chair of Visual Computing in Konstanz since 2021 and focuses on applying image processing methods and machine learning to computer graphics and robotic painting frameworks. He developed systems for automatic image abstraction, both for digital art and physical paintings, creating a complete painting pipeline from an arbitrarily selected input image to a final robotic painting. He aims to re-introduce human creativity and interaction into the automated abstraction process to create an interactive framework for robotic painting.

Student Projects:

Robotic Writing – David Silvan Zingrebe

David Silvan Zingrebe is a master’s student at the University of Konstanz after completing his bachelor’s in 2022. His current research focuses on developing a modular pipeline with the goal of accurately modeling the process of human handwriting and calligraphy. The pipeline consists of a stage for computing centerlines of a given text, extracting strokes from the centerlines, and finally applying a brush model to account for the behavior of the brush.

The brush model is obtained by drawing various calibration strokes and extracting relevant information from a picture of the resulting canvas. As part of his bachelor’s thesis, he developed a pipeline consisting of purely classical algorithms but is now applying machine learning-based approaches to parts of the pipeline.

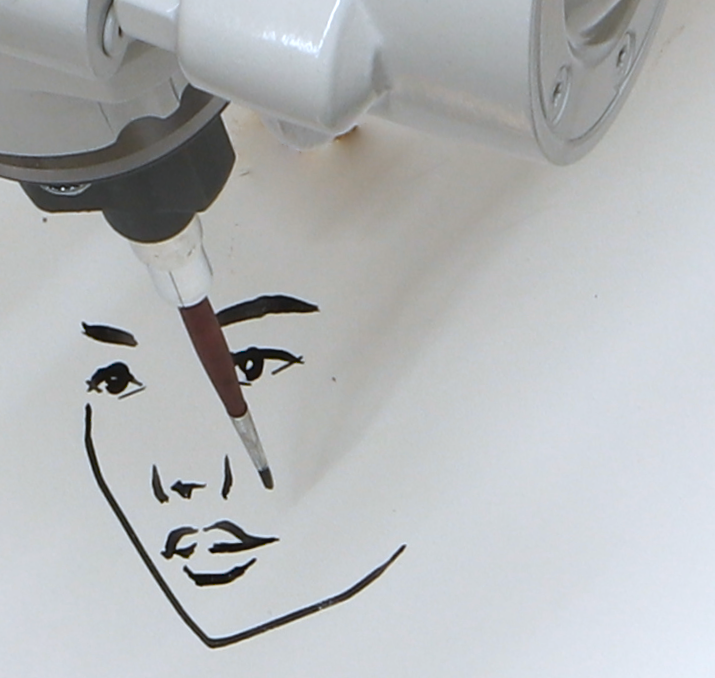

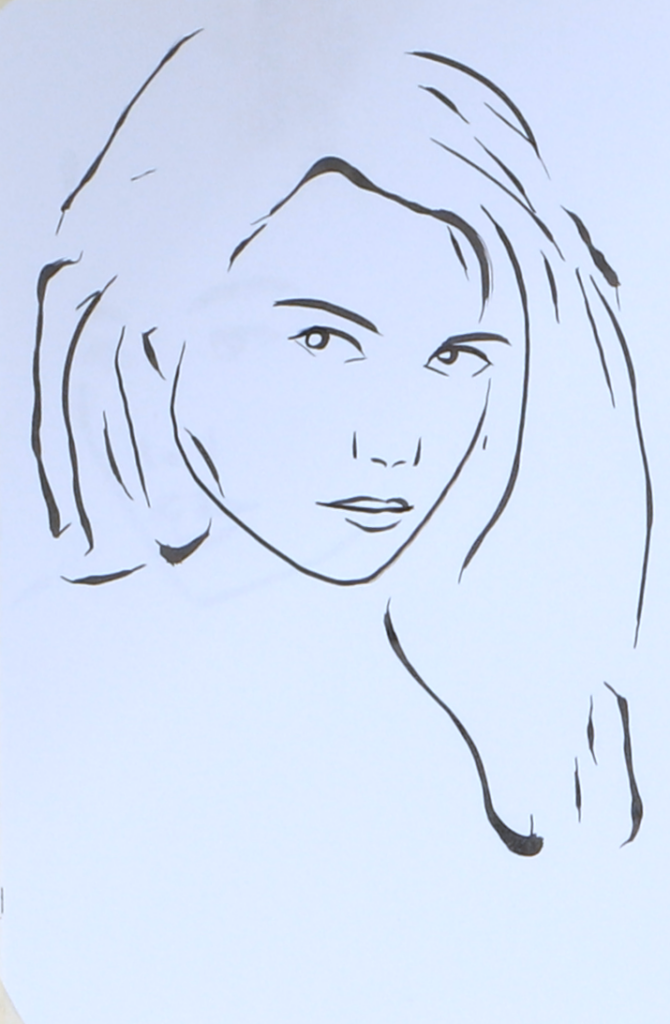

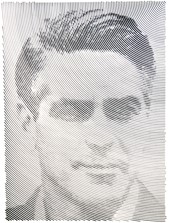

High-Speed Portraiture – Emily Bihler

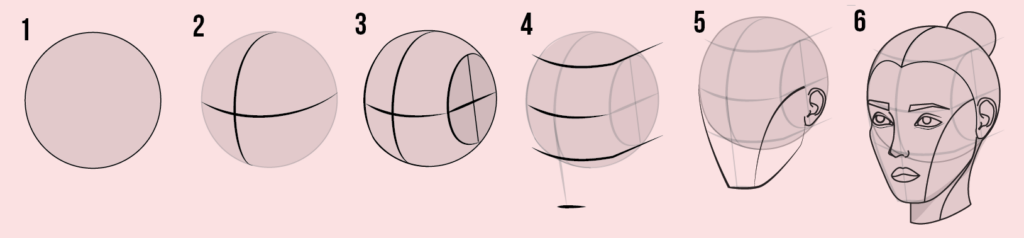

Throughout history, humans have maintained a keen interest in portraying themselves and others through the art of portraiture. Certain common principles guide artists to ensure facial recognition, whether opting for a realistic depiction or exploring more abstract styles.

This involves adhering to conventions such as correctly placing the eyes and mouth and their spatial relation to one another.

Emily’s project aimed to emulate an artistic process of creating a portraiture image established on principles outlined by the LOOMIS Method. The results of the method were drawn by the e-David robot to create a full pipeline from image to physical drawing.

History

Project Outlook

One of our main focuses now is to shift away from the initial fixation on painting a specific image but instead to start from an empty canvas that can be filled with different forms of generated media, like drawn strokes using a digital pen, image crops, or AI-generated elements and backgrounds. Using different AI guidance systems, we want to allow users to provide the system with additional hints on how to realize a specific element on the canvas and apply the current abstraction framework to allow artists to quickly get an initial abstraction of an object or a scene that they can then further enhance or modify to their heart’s content.

We want to facilitate artistic expressivity by allowing the robot to paint selected sub-parts of the canvas, making it less disconnected from digital art and scene composition design. This would also enable the artist to adjust their scene based on the actual results the e-David produces. We aim to create a new high-level input framework that can be used on any device and work with pressure-measuring display pens and touch screens to create an immediate input mode where an artist can draw on a tablet, and the robot arm realizes these strokes whenever the artist desires.

2022-Today Region-Based Abstraction and Painting

The shape tree region abstraction method has been enhanced by incorporating machine learning-based techniques like panoptic image segmentation for object recognition and developed further in a project by Michael Stroh. Using an automatic threshold detection procedure, the newly developed image abstraction framework can decompose an almost arbitrary input image into a set of non-overlapping regions with a number realizable in a reasonable time by the e-David painting framework. The method determines suitable abstraction parameters for each detected object in the input image and can thus create different levels of abstraction and detail within a scene automatically. A user can guide this Object-based level of abstraction to create different result impressions.

Some digital abstraction results (rendered in 2023 by Michael Stroh) using the developed framework. The number of shapes per image is around 1500, which can be realized by the e-David system in about a day of automated painting.

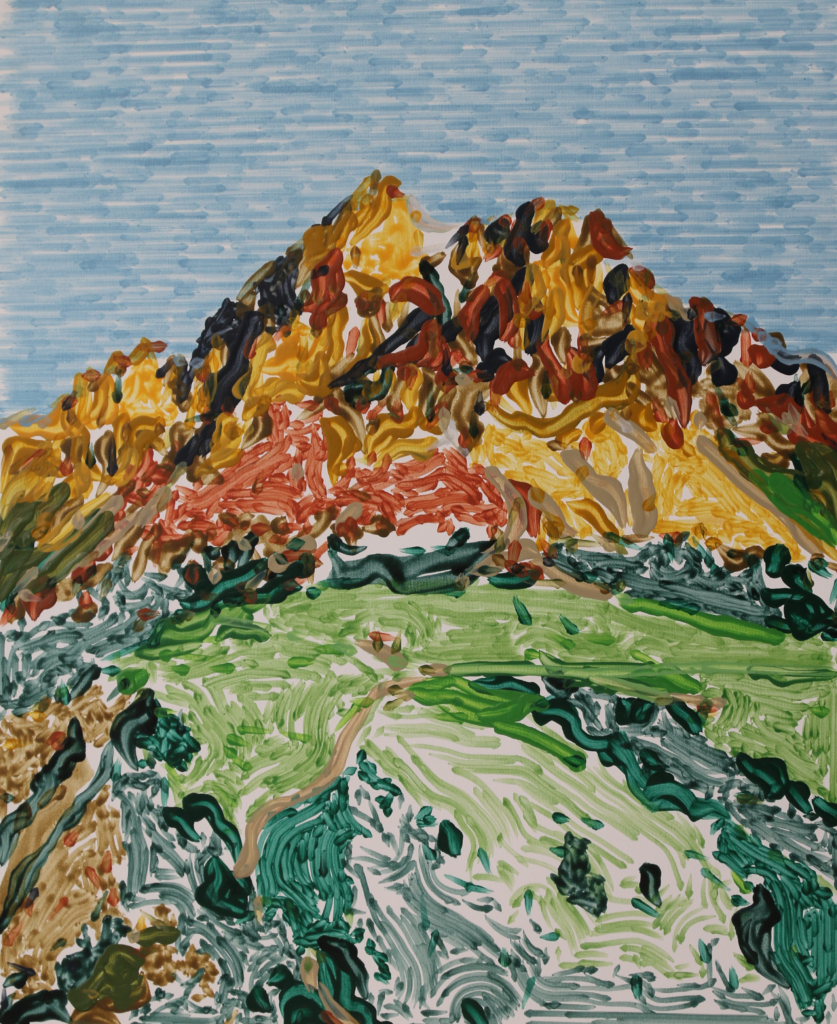

We further continue the research on increasing the realization fidelity of the individual shapes and how to implement different human artist-like painting styles using stroke placement and local color choice. The following shows an abstract acrylic painting of a mountain scene that was painted by e-David during the testing phase of the abstraction framework. Further tuning of the painting process, different region-filling strategies, specific stroke placement strategies, and region-blending are also being explored.

Left: digital abstraction result (rendered in 2023 by Michael Stroh) using the developed framework. The image has approximately 1000 regions of varying sizes and was painted on the right side by the e-David system. Right: “Flowing Mountain Meadow”, acrylic on cardboard, 60 * 40 cm, 2023 by Michael Stroh & Jörg-Marvin Gülzow.

2018-2022: Research on Region based painting

The painting system software was overhauled to create a layered component design that connects the robotic hardware, indented brush technique realization, and the image composition creation on different levels of technicality. This enables users to jump in and make changes to specific components of the painting setup, interacting with the robot directly or creating new brushstroke styles without the need to learn and/or be aware of the other components that run on the system. This design choice also allows different system configurations to exist in parallel for different users, painting styles, and material/tool setups.

We implemented a new brush calibration method tailored to precise line drawing by characterizing brush dynamics. This makes it possible to paint text fonts for any language and fragile strokes onto the canvas with good precision.

Based on paintings by artists like Paul Cézanne, Robert Delauney, Wassily Kandinsky, and many others artists, we decided to approach robotic painting from a new perspective that focuses on realizing individual regions or shapes with specific characteristics instead of applying brushstrokes iteratively. This means that instead of trying to recreate a global painting style by approximating a template image, we identify regions of monochromatic color or a color composite within a given scene or even individual objects. We then realize these regions on the canvas using the improved ABB robot setup.

2016-2018: Modernization

One of the major changes we’ve implemented is the transition to ABB robots that allow better programming and more precise basic moves. We further comprehensively redesigned the painting hardware from the brush-/pen holder to the color palette and the brush-washing mechanism. The focus here has been to simplify the materials used in the process and their production method, making the required hardware easy and cost-effective to replace. With the available construction plans for these items, we can also make adjustments as needed to reorder and produce updated tool holders or palette designs.

A further optimization of the painting system is the creation of a tool holder that eliminates play and relies on a magnet-based mechanism. This enhances the precision of our tooling and significantly simplifies the manufacturing process. Our tool holders are now more reliable and durable, with easier production and maintenance.

Furthermore, we have upgraded our camera systems to make them more robust and resilient. This overhaul ensures that our visual feedback system is reliable and ensures better control over the visual quality of painted results.

2013-2016: Painting in Color

Soon, we had sufficiently perfected ink painting to take e-David another step forward: We further developed the painting software to handle grayscale and multicolor palettes. We also enabled the robot to switch between different brushes while painting. We decided to use acrylic paints because they dry quickly and are robust against overpainting when painted following paint drying times for overlapping strokes.

On the other hand, oil paints are more difficult to handle, both for visual feedback and when cleaning brushes. Computer vision techniques were incorporated so that e-David could recognize objects in the input image to better map them. We assigned customized stylization methods to different objects respectively.

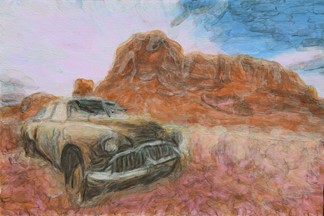

Two paintings were created with acrylic paint, brushes, and more complex painting algorithms. (Left: “Car in desert”, acrylic on cardboard, 60 * 40 cm, 2017. Right: “Constance harbor house”, acrylic on cardboard, 40 * 60 cm, 2016). Works by Dr. Thomas Lindemeier using the e-David

© VG-BildKunst, Liat Grayver

2012-2013: First Painints using Visual Feedback

We began to develop a painting program that used ink brushes to create black-and-white images on a paper canvas. Only black ink was used, so the canvas could only be darkened. The decision at what position to add more strokes was made based solely on the difference in brightness between the canvas and the original. Here, we used a camera-based setup for visual feedback that regularly captured the canvas. The length and curvature of the strokes could be varied for individual areas of the image. A meadow was represented with short, straight strokes, while long strokes visually distinguished a tree in the meadow following the structure. Using this approach, we could generate complex images in their unique style.

The first pictures painted with visual feedback. (Top left: “Tree on meadow”, ink on paper, 43 * 28 cm, 2013. Bottom left: “Hands”, ink on paper, 42 * 42 cm, 2012. Right: “Bird drawing”, 50 * 40 cm, 2013) by Dr. Thomas Lindemeier

2009-2012: Construction of the painting robot assembly.

In addition to installing the robot arm, we developed all system parts ourselves. Brush holders, a paint palette, and a brush cleaning system were planned and built. The first version of the painting software was programmed, which initially worked without visual feedback. This means that the first projects were painted with just a set of painting instructions from the robot, without dynamically adjusting the painting process to reflect inaccuracies between what it was supposed to paint and what was placed on the canvas.

In this illustration, some drawing results were created with felt pens and variable contact pressure.

Two examples from the first generation of images painted by e-David. Felt-tip pens were used with varying contact pressure to create a grayscale impression. (Left: “Portrait of George Clooney”, felt-tip pen on paper, 38 * 58 cm, 2010. Right: “Statue of Liberty”, felt-tip pen on paper, 38 * 58 cm, 2009) by Dr. Thomas Lindemeier