By Kevin Lewis & James Carty

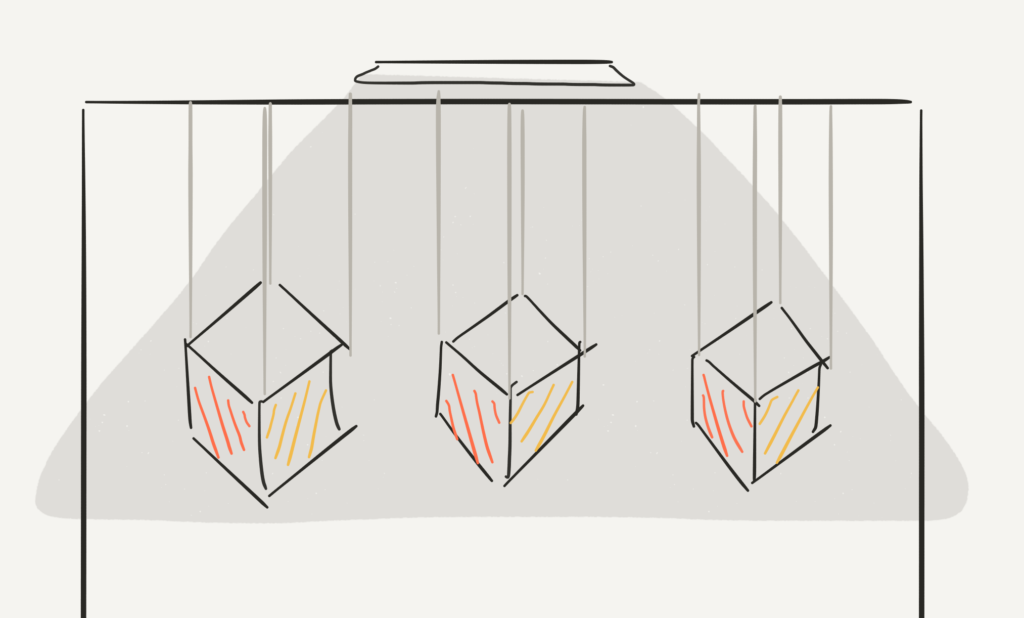

Carillon was an interactive installation in which children and playful adults interact with a series of digital musical instruments (DMIs). The instruments are bells which are projected onto suspended cardboard boxes. Users interact with the project by swinging the boxes.

Each box was to be hung from a railing at an angle which clearly presents two sides to the user. An image of the instrument was projected onto one side of each box, whilst a visualisation representing the sound it creates was projected on the other visible face.

As users swing the boxes, bell-like sounds were created, and the visualisations which coincide with the sounds were produced. After experimenting with various different sounds, bells were the most naturally suited instrument based on the physical action of swinging the boxes. When played together, they would have formed a major chord.

Four LEDs were placed on each face of the box, one on each corner, and were used to track the position of the box. The projected instrument and visualisation followed the position of the physical box without user intervention.

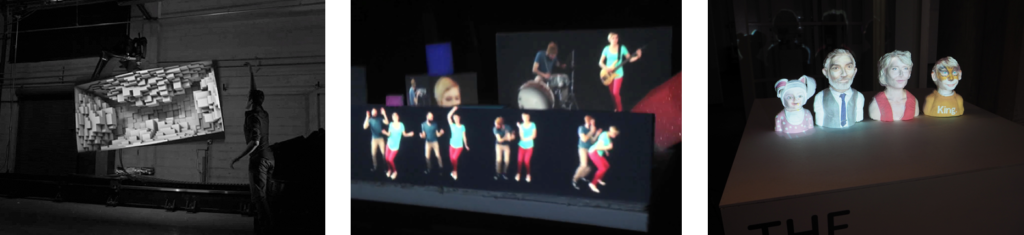

Carillon was inspired by Bot & Dolly's "Box"1, Pomplamoose's Happy Get Lucky2 and Joe McAlister's The Watsons3. The idea that we could use projection mapping in an unusual context, bridging the gap between the 'digital world' and the 'physical world', was intriguing. Often, installations involving projection mapping are meticulously planned, and involve little user interaction. We wanted to allow our users to feel a sense of control around what was being shown and heard.

As you can tell from the past-tense, we faced issues with this project which led to it not working. Below we explore the reasons for this, and our creative process, challenges and attempts to fix them.

Research

One recurring theme in our early research was the desire to build a digital musical instrument (DMI). At this point, we'd just completed a piece of work involving projection mapping using color tracking, so decided that it'd be fun to build a DMI using a projection mapped box.

The Ishikawa Watanabe Laboratory (University of Tokyo) have developed Lumipen4, a low-latency projection tracking system. However, they used a custom hardware and software rig (including a 1000 fps camera and a saccade mirror array). We didn't have the resources (time, money or skill) to produce something similar. That said, our project was much less ambitious and we decided to try and build it with technologies we already knew (p5.js).

Due to levels of excitement with our idea we decided to pursue our build before conducting further research, which upon reflection was admittedly premature.

Build

Our first few milestones involved creating visuals and synthesised sounds - we had decided early on that suspending and swinging boxes would be an appealing way of interacting with the piece, but weren't yet set on the A/V elements. Our first set of visuals can be seen here, and interact with just a single variable value changing.

We'd just completed a piece of separate work involving computer vision, and used our code sample from this work, which involved tracking the most dominant colour bin of a given colour. However, this proved quite unreliable and instead we based our project on Kyle McDonald's Computer Vision PointTracking example5.

![]()

Our first challenge was with the PointTracking example's memory management. While the project works reliably, after running for more than a minute it slowed down significantly. The array of coordinate values was only growing, and were then stored in an unusual data structure we'd not encountered before. After exploring the structure, we spliced it to keep only the latest 4 pairs of values to help with the latency issue.

This project also used bitshifting, which is a new programming concept and took time to understand. In order to understand why it was being used, we needed to contact Kyle and get some support. We found out that curr_xy[j<<1] was similar to curr_xy.x and curr_xy[j<<1+1] was similar to curr_xy.y. Learning this made it much easier to work with.

@_phzn curr_xy is [x0 y0 x1 y1... xn yn], curr_xy[i<<1] is like saying curr_xy[i].x, curr_xy[(i<<1)+1] is like curr_xy[i].y. i<<1 is equal to i*2

— Kyle McDonald (@kcimc) April 11, 2017

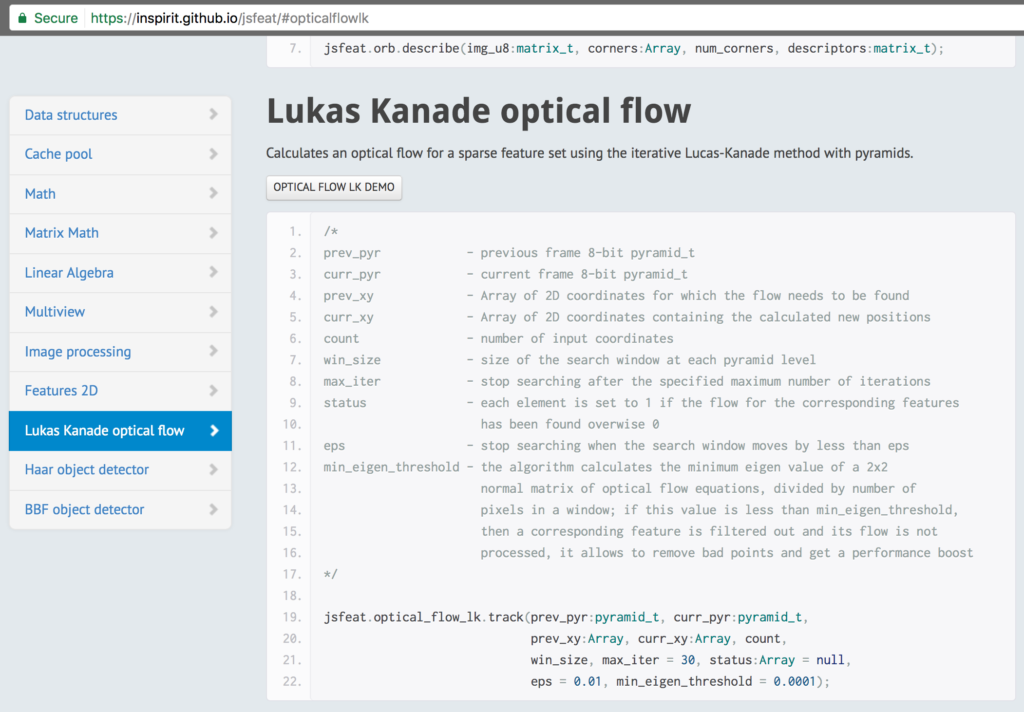

We still lacked understanding, so more conversations with Kyle were needed. Unfortunately, while he was lovely, he let us know that the inner-workings of his computer vision examples were actually powered by JSFeat - a computer vision library for JavaScript6. While this was a great piece of learning, the JSFeat documentation left much to be desired. The PointTracking example was straight out of the JSFeat documentation and Eugene (the developer behind the library) didn't go into too much depth about how the library works.

Instead of spending more time to further understand JSFeat, we worked around the limit of our understanding by manipulating values provided and creating new data structures which served our purpose. I believe this lack of understanding was one of our larger setbacks. We are both creatives who must understand something to a low-level, or we struggle to retain the knowledge. "It just works so we'll leave it be" isn't an attitude we found easy to adopt and spent much time trying to understand a complex library which was the very backbone of our entire project.

Our next challenge was the realised lack of processing power provided to us by using browser-based tools, which resulted in an unacceptably-high level of latency. In order for this project to work in a compelling way, we really needed the piece to be reactive to interactions from users. Our chosen tools didn't allow for this.

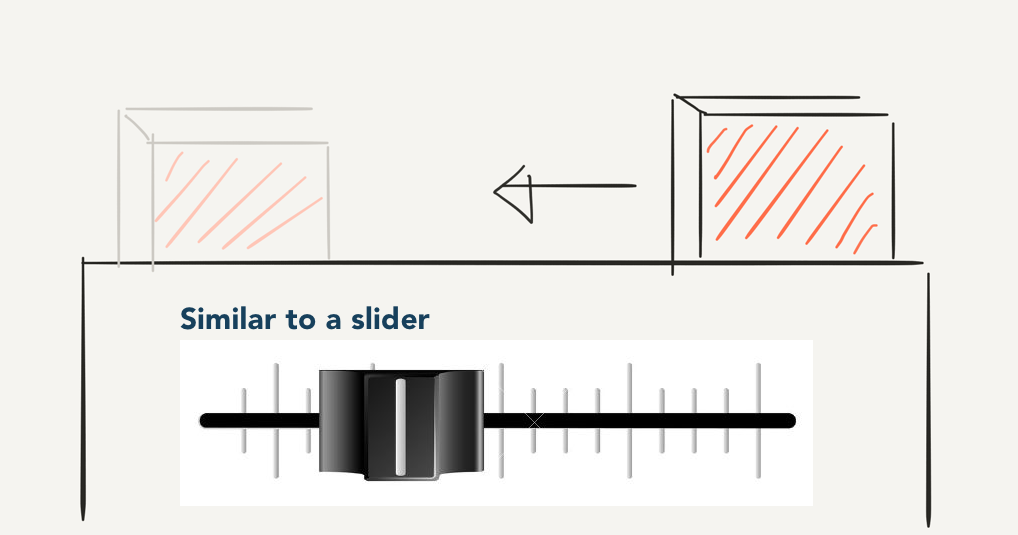

In order to accommodate this limitation, we changed the scope of the project significantly. At this point, Carillion would just be a single face of a single box. Instead of swinging, it would now move back and forth horizontally, similar to a slider on a mixing desk. It would now make a constant sound, which would be manipulated as the box position moved.

Our piece works by taking in a video feed of the box and tracking each corner with an LED on it. We used p5.js in the browser to manually calibrate the position of the box in terms of the projector, and then project the graphic back out onto the box. If we move the box 10cm to the left, the projected graphic also moves, but not nearly enough. We believed this could be fixed by a manually-calibrated scaling mechanism, but in order for this to work, we needed to know how much an item moves between frames.

As we could only work with the coordinate points provided by JSFeat, which were updated per-frame, we didn't know how to get the difference. We changed our array splice to give us the latest 8 points (2 sets of 4). We then found the average x and y movement of the four points, but could never get it working quite right. This had us stumped for over 70 hours and eventually we realised that, along with the high latency, our idea wouldn't come to fruition with our chosen tools.

Our code, to the point we stopped development, can be found here (box).

Evaluation

Our evaluation framework looked at three core areas:

- Is the project easily understood? Will users be able to approach the project and know what is expected from them?

- Is the project reliable? Does it actually work as expected consistently and reliably?

- Is the sentiment post-use positive? Based on a post-use interview, how did users feel when interacting with this project?

As we didn't ever get Carillon to a point where users could interact with it, we were unable to carry our planned evaluation.

Learnings

We spent time over several weeks working to create visuals and audio before building a prototype of the projection tracking system. It was three weeks before we were encouraged to focus our attention on the box itself, by which time we'd already invested enough resource (time and energy) into the idea that we were reluctant to change it. If we had spent more time initially researching-by-doing, we would have realised that the low feasibility of this project and pivoted to work on something else.

The tools we chose were due to our proficiency in using them. Instead, we should have realised that p5.js wasn't the right choice of technology sooner, at which point we may have had enough time to learn an alternate framework (openFrameworks, for example) which could have made this project a reality.

This project ultimately failed because we spent too little time testing out the idea at the start of the creative process. If we had, we may have realised that what we were trying to achieve was quite demanding and either been more prepared, or worked on another interpretation of a physical DMI.

References

[1] Bot & Dolly, "Box", https://www.youtube.com/watch?v=lX6JcybgDFo, 2013

[2] Pomplamoose, "Pharrell Mashup (Happy Get Lucky)", https://www.youtube.com/watch?v=i7X8ZnmLfM0, 2014

[3] Joe McAlister, "The Watsons", https://joemcalister.com/the-watsons/, 2016

[4] Ishikawa Watanabe Laboratory, "Lumipen 2", http://www.k2.t.u-tokyo.ac.jp/mvf/LumipenRetroReflection/index-e.html, 2014

[5] Kyle McDonald, "cv-examples PointTracking", https://kylemcdonald.github.io/cv-examples/PointTracking/, 2016

[6] Eugene Zatepyakin, "JSFeat", https://inspirit.github.io/jsfeat/, 2016